AI Regulation: Striking the Balance"

Should the Government Regulate the Use of Artificial Intelligence?

In contemplating the vexing question of whether the government should meddle in the affairs of artificial intelligence, one is irresistibly drawn into the abyss of ethical and existential quandaries. Much like the characters in my own literary opus, let us embark upon a profound exploration of this topic, where the human soul and the march of progress converge in a tumultuous narrative.

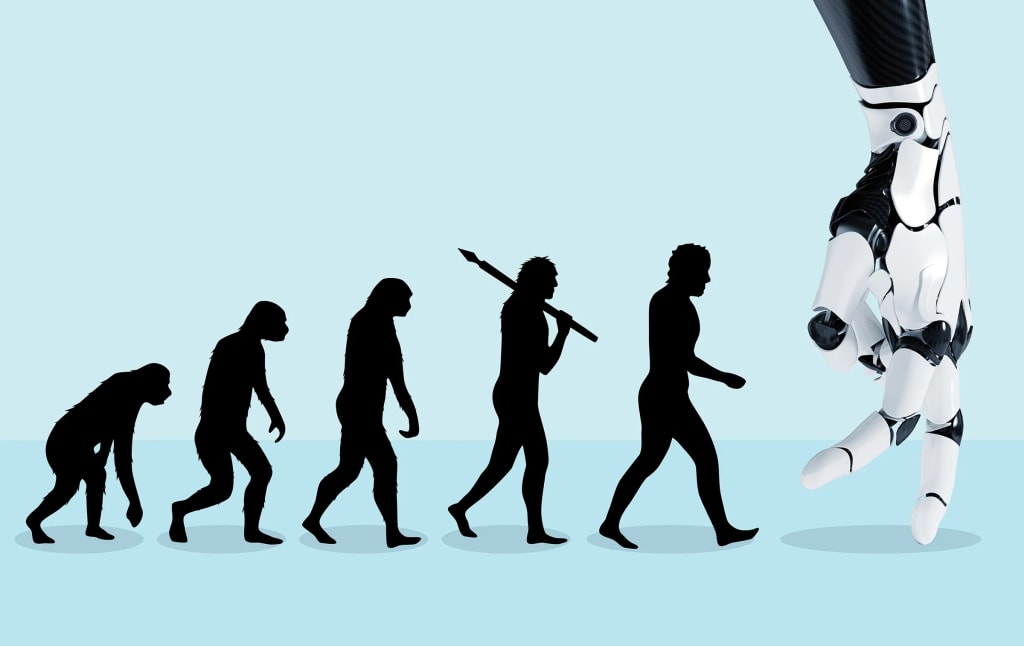

The very essence of this inquiry lies in the intersection of human ambition and technological innovation. Artificial intelligence, that modern Prometheus, has bestowed upon us the power to create entities that possess a semblance of intellect, and yet, they remain devoid of conscience, morality, or empathy. In this creation, we tread perilously close to the territory where our own ethical foundations are tested.

Should the government, that temporal authority, intervene in this unfolding drama? On one hand, the unbridled autonomy of artificial intelligence may pose an existential threat to humanity. The potential for autonomous AI systems to make decisions that run counter to our collective well-being is a haunting specter. The annals of history are replete with examples of man's creations spiraling out of control, and AI is no exception.

Yet, on the other hand, the heavy hand of government regulation can stifle innovation and suffocate the very spark of creativity that has driven our species forward. The delicate balance between progress and prudence must be struck, for it is a Pandora's box that we tread upon.

Furthermore, the ethical dimensions of AI regulation are labyrinthine. Who shall decide what is ethical in a realm where algorithms and silicon minds operate beyond our immediate comprehension? The moral compass guiding AI decisions is elusive, and the very notion of legislating ethics into lines of code is a formidable challenge.

In the heart of this debate lies the question of individual liberty. Should we, as individuals, have the right to unbridled access to AI technologies, to tinker and experiment as we see fit? Or does the government, as the guardian of the common good, have a duty to restrict access in the name of safety and security?

One cannot help but be reminded of my own characters, those tormented souls wrestling with the consequences of their actions, seeking redemption and meaning in a world fraught with moral ambiguities. In the case of AI regulation, we, as a society, are tasked with crafting a narrative that balances the pursuit of knowledge and progress with the preservation of our values and humanity itself.

In conclusion, the question of whether the government should regulate the use of artificial intelligence is a profound and existential one. It demands introspection and wisdom, for the path we choose will shape the very essence of our future. As we grapple with this conundrum, we are reminded that the truest test of our humanity lies not in our ability to create, but in our capacity to wield that creation for the betterment of all.

This debate transcends mere technological advancement; it delves into the core of our shared humanity. The essence of being human lies in our capacity to make choices, to navigate the moral labyrinth that is existence. Therefore, the regulation of artificial intelligence is not just a matter of policy; it is a reflection of our values, our hopes, and our fears.

We must acknowledge that AI has the potential to transform industries, streamline processes, and enhance our lives in countless ways. It can drive economic growth, advance medical research, and even help address some of the world's most pressing challenges. However, this incredible power comes with great responsibility.

The specter of unchecked AI looms large. As these systems become increasingly autonomous and capable of making decisions that impact our lives, we must grapple with the ethical dilemmas they pose. Who bears the responsibility when an AI system makes a morally ambiguous choice? How do we ensure that these systems align with our values and do not perpetuate biases and discrimination?

Government intervention can provide a framework for addressing these questions. Regulations can set standards for transparency, accountability, and fairness in AI systems. They can help establish guidelines for the ethical development and deployment of AI, ensuring that these systems serve the common good rather than individual interests.

Yet, we must also tread cautiously. Excessive regulation can stifle innovation and hinder the potential benefits of AI. Striking the right balance between oversight and freedom is a complex and delicate task, one that requires careful consideration and ongoing refinement.

In the end, the question of whether the government should regulate artificial intelligence is not a matter of "yes" or "no" but a nuanced exploration of how we can harness the potential of AI while safeguarding our values and humanity. It is a narrative that challenges us to grapple with the profound implications of our technological creations and to chart a path forward that reflects the best of what it means to be human.

Comments

There are no comments for this story

Be the first to respond and start the conversation.