Cognitive Bias — Part 1: What they are. What they do.

Distortions in judgments and decisions

Cognitive bias is a term that refers to the ways in which the human mind is inclined to process information in ways that may lead to inaccurate or distorted outcomes (Haselton, Nettle & Andrews, 2005). These biases represent systematic patterns of deviation from norm or rationality in judgment and thus can lead to inaccurate perceptions, illogical conclusions, and even irrational behavior when individuals rely on their own constructed reality rather than the objective reality (Ariely, 2008; Baron, 2007). In other words, an individual’s thoughts and/or behaviors might be determined more by how they create reality, than by the unbiased input.

The concept of cognitive bias has been studied for decades, being first introduced in 1972 by Amos Tversky and Daniel Kahneman (Kahneman & Frederick, 2002). Recent research indicates that all individuals utilize these automatic assumptions to guide decisions. They develop as characteristic thought patterns from experience and knowledge, as well as from the way people process information (Bless, Fiedler & Strack, 2004; Morewedge, & Kahneman, 2010).

Cognitive bias is closely related to the concept of heuristics, with subtle differences. Heuristics, refer to, “a simple procedure that helps find adequate, though often imperfect, answers to difficult questions” (Kahneman, 2011, p. 98); while its relationship to cognitive bias can be explained as, “ . . . cognitive biases stem from the reliance on judgmental heuristics” (Tversky & Kahneman, 1974, p. 1130). More specifically, Gonzalez (2017, p. 251), defined the distinction stating, “Heuristics are the ‘shortcuts’ that humans use to reduce task complexity in judgment and choice, and biases are the resulting gaps between normative behavior and the heuristically determined behavior.”

Generally, cognitive biases are often seen as negative because they can lead to illogical behaviors, but in reality, humans use these mental shortcuts all the time, by necessity and for efficient navigating through the environment and adapting to circumstances (i.e., survival). Typically, cognitive biases represent mental shortcuts that lead humans towards understanding and making sense of the world more quickly than they would otherwise be capable of doing so without them.

Biases are often a result of the brain's attempt to simplify information processing. They can work as rules-of thumb that help one make sense of experience, while also being prone themselves to various forms of memory distortions or logical errors. Some of these biases have a memory component. For a variety of reasons, the way an individual recalls an experience may be skewed, which can then result in biased thinking and decision-making.

Mechanisms of attention also result in cognitive biases. Since attention is a finite resource, the brain decides, in every conscious moment, what is worthy of further processing (i.e., attention) and what is not. Hence, humans rely on these mental shortcuts when navigating our day-to-day lives - taking note only of those events relevant enough for us at any given time - a very select group - rather than paying attention to the unlimited amount of stimuli one is continuously subject to.

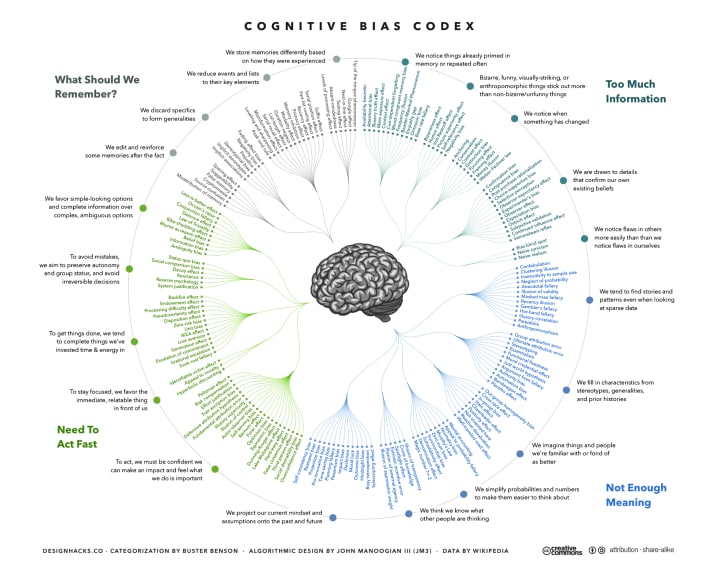

In an attempt to define and classify cognitive biases and heuristics, researchers John Manoogian III and Buster Benson, developed the Cognitive Bias Codex (see Figure 1) to visually representing the known biases and their associated underlying cause or purpose (Benson, 2016).

Each quadrant of the codex is represents a specific group of cognitive biases:

1. What should we remember? - Biases that affect our memory for people, events, and information

2. Too much information - Biases that affect how we perceive certain events and people

3. Not enough meaning - Biases that we use when we have too little information and need to fill in the gaps

4. Need to act fast - Biases that affect how we make decisions

In short, cognitive bias is a term used to describe the ways in which the human mind can shape and process information. Some of the most common cognitive biases are cognitive bias towards extremity (thinking that everything is extreme), confirmation bias (fact-checking your own beliefs), mental inertia (not changing your mind even after ample evidence has been presented), and dual process theory (thinking along two different lines at the same time). Kahneman and Tversky (1996), who first studied this concept, argue that the study of cognitive biases has practical implications for areas including clinical judgment, entrepreneurship, finance, and management.

Read on in this series for an in-depth examination of specific cognitive biases – how they work and when they don’t.

References

Ariely, D. (2008). Predictably Irrational: The Hidden Forces That Shape Our Decisions. New York, NY: HarperCollins

Baron, J. (2007). Thinking and Deciding (4th ed.). New York, NY: Cambridge University Press.

Bless, H., Fiedler, K., & Strack, F. (2004). Social cognition: How individuals construct social reality. Hove and New York: Psychology Press.

Gonzalez, C. (2017). Decision-making: A cognitive science perspective. In S. Chipman (Ed.), The Oxford handbook of cognitive science (pp. 249–264). Oxford University Press. Accessed on July 9, 2020 from https://www.cmu.edu/dietrich/sds/ddmlab/papers/oxfordhb-9780199842193-e-6.pdf

Haselton, M. G., Nettle, D., & Andrews, P. W. (2005). The evolution of cognitive bias. In Buss DM (ed.). The Handbook of Evolutionary Psychology. Hoboken, NJ, US: John Wiley & Sons Inc. pp. 724–746.

Kahneman, D. (2011). Thinking, fast and slow. Farrar, Straus, and Giroux.

Kahneman, D., & Frederick, S. (2002). Representativeness Revisited: Attribute Substitution in Intuitive Judgment. In Gilovich T, Griffin DW, Kahneman D (eds.). Heuristics and Biases: The Psychology of Intuitive Judgment. Cambridge: Cambridge University Press. pp. 51–52

Morewedge, C. K., & Kahneman, D. (October 2010). Associative processes in intuitive judgment. Trends in Cognitive Sciences,14(10): 435–40. doi:10.1016/j.tics.2010.07.004

Tversky, A., & Kahneman, D. (1974). Judgment under uncertainty: Heuristics and biases. Science, 185, 1124–1131.

About the Creator

Donna L. Roberts, PhD (Psych Pstuff)

Writer, psychologist and university professor researching media psych, generational studies, human and animal rights, and industrial/organizational psychology

Enjoyed the story? Support the Creator.

Subscribe for free to receive all their stories in your feed. You could also pledge your support or give them a one-off tip, letting them know you appreciate their work.

Reader insights

Nice work

Very well written. Keep up the good work!

Top insight

Expert insights and opinions

Arguments were carefully researched and presented

Comments

There are no comments for this story

Be the first to respond and start the conversation.