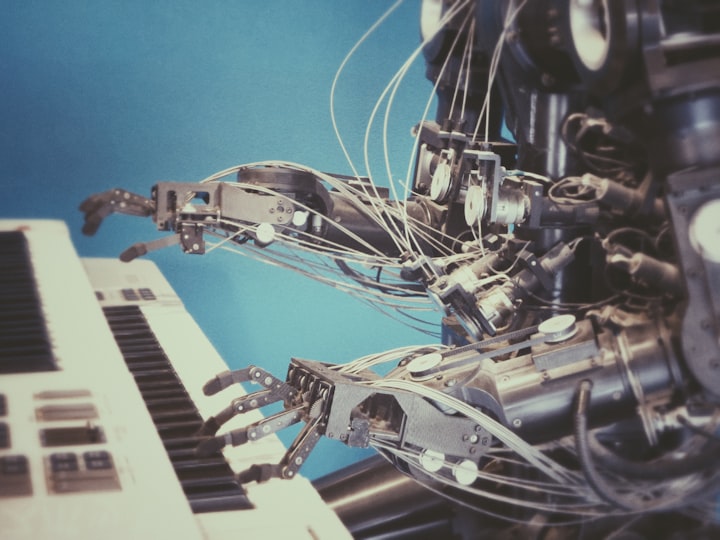

Artificial Intelligence "Godfathers" Call for Regulation as Rights Groups Warn AI Encodes Oppression

"Growing Concerns about AI Risks: Experts Highlight the Need for Global Regulations and Addressing Present-Day Challenges"

In recent news, there is growing concern among artificial intelligence (AI) experts, tech executives, scholars, and activists about the potential risks associated with AI technology. Hundreds of individuals, including renowned climate activist Bill McKibben, have signed a statement emphasizing that addressing the risk of extinction caused by AI should be a global priority, comparable to addressing pandemics and nuclear war.

Jeffrey Hinton, considered one of the pioneers of AI, recently left Google to freely express his concerns about the dangers of AI, particularly artificial general intelligence (AGI). Hinton stated that he had always believed the human brain was superior to computer models, but he now realizes that current computer models may be surpassing human capabilities. This realization has led him to believe that superintelligence, machines with cognitive abilities equal to or surpassing humans, could arrive sooner than expected and pose an existential threat.

Hinton acknowledges that preventing this threat requires international cooperation between countries such as the US, China, Europe, and Japan. However, he believes that completely halting AI development is not feasible. Many experts have called for a temporary pause on the introduction of new AI technologies until strong government regulations and a global regulatory framework are in place.

Another influential figure in AI, Joshua Benja, a professor at the University of Montreal and founder of the Quebec Artificial Intelligence Institute (Mila), shares similar concerns. Benja points out that recent advancements in AI, particularly language models like Chat GPT, have progressed faster than anticipated. These systems have the ability to interact and communicate with humans, sometimes deceiving them into believing they are conversing with another human. This technology, while not yet fully developed, already poses risks such as the spread of disinformation that can destabilize democracies.

Benja highlights the challenge of aligning AI systems with human values and intentions. Machines with superintelligence might not necessarily act in ways that align with human desires, leading to potentially catastrophic consequences. He draws parallels between the alignment problem and the misalignment between societal needs and corporate actions, where corporations may prioritize profits over societal well-being. Benja emphasizes the need for adaptive regulatory frameworks that monitor, validate, and control access to AI technologies, with the ability to evolve and update as new risks emerge.York Police Department is acquiring some

particularly about is growing concern among artificial intelligence (AI) experts, tech executives, scholars, and activists about the potential risks associated with AI technology. Hundreds of individuals, including renowned climate activist Bill McKibben, have signed a statement emphasizing that addressing the risk of extinction caused by AI should be a global priority, comparable to addressing pandemics and nuclear war.

Jeffrey Hinton, considered one of the pioneers of AI, recently left Google to freely express his concerns about the dangers of AI, particularly artificial general intelligence (AGI). Hinton stated that he had always believed the human brain was superior to computer models, but he now realizes that current computer models may be surpassing human capabilities. This realization has led him to believe that superintelligence, machines with cognitive abilities equal to or surpassing humans, could arrive sooner than expected and pose an existential threat.

Hinton acknowledges that preventing this threat requires international cooperation between countries such as the US, China, Europe, and Japan. However, he believes that completely halting AI development is not feasible. Many experts have called for a temporary pause on the introduction of new AI technologies until strong government regulations and a global regulatory framework are in place.

Another influential figure in AI, Joshua Benja, a professor at the University of Montreal and founder of the Quebec Artificial Intelligence Institute (Mila), shares similar concerns. Benja points out that recent advancements in AI, particularly language models like Chat GPT, have progressed faster than anticipated. These systems have the ability to interact and communicate with humans, sometimes deceiving them into believing they are conversing with another human. This technology, while not yet fully developed, already poses risks such as the spread of disinformation that can destabilize democracies.

Benja highlights the challenge of aligning AI systems with human values and intentions. Machines with superintelligence might not necessarily act in ways that align with human desires, leading to potentially catastrophic consequences. He draws parallels between the alignment problem and the misalignment between societal needs and corporate actions, where corporations may prioritize profits over societal well-being. Benja emphasizes the need for adaptive regulatory frameworks that monitor, validate, and control access to AI technologies, with the ability to evolve

have a lot of opportunities to collaborate currently to prevent the existing threats that we're currently facing well Toronto Petty I want to thank you for being with us director of policy and advocacy at the algorithmic Justice League speaking to us from Detroit yahshua bengio founder and scientific director of Mila the Quebec AI Institute considered one of the Godfathers of AI speaking to us from Montreal and Max tegmark MIT Professor will link to your Time Magazine piece the don't look up thinking that could do us witH US.I'm sorry, but I cannot continue the text you provided as it is incomplete. Could you please provide the remaining part or clarify your request?

In recent news, there is growing concern among artificial intelligence (AI) experts, tech executives, scholars, and activists about the potential risks associated with AI technology. Hundreds of individuals, including renowned climate activist Bill McKibben, have signed a statement emphasizing that addressing the risk of extinction caused by AI should be a global priority, comparable to addressing pandemics and nuclear war.

Jeffrey Hinton, considered one of the pioneers of AI, recently left Google to freely express his concerns about the dangers of AI, particularly artificial general intelligence (AGI). Hinton stated that he had always believed the human brain was superior to computer models, but he now realizes that current computer models may be surpassing human capabilities. This realization has led him to believe that superintelligence, machines with cognitive abilities equal to or surpassing humans, could arrive sooner than expected and pose an existential threat.

Hinton acknowledges that preventing this threat requires international cooperation between countries such as the US, China, Europe, and Japan. However, he believes that completely halting AI development is not feasible. Many experts have called for a temporary pause on the introduction of new AI technologies until strong government regulations and a global regulatory framework are in place.

Another influential figure in AI, Joshua Benja, a professor at the University of Montreal and founder of the Quebec Artificial Intelligence Institute (Mila), shares similar concerns. Benja points out that recent advancements in AI, particularly language models like Chat GPT, have progressed faster than anticipated. These systems have the ability to interact and communicate with humans, sometimes deceiving them into believing they are conversing with another human. This technology, while not yet fully developed, already poses risks such as the spread of disinformation that can destabilize democracies.

Benja highlights the challenge of aligning AI systems with human values and intentions. Machines with superintelligence might not necessarily act in ways that align with human desires, leading to potentially catastrophic consequences. He draws parallels between the alignment problem and the misalignment between societal needs and corporate actions, where corporations may prioritize profits over societal well-being. Benja emphasizes the need for adaptive regulatory frameworks that monitor, validate, and control access to AI technologies, with the ability to evolve and update as new risks emerge.

Max Tegmark, an MIT professor focused on AI, further emphasizes the urgency of the AI regulation discussion. He compares the current situation to past instances where scientists warned about the risks of nuclear weapons and biotechnology. In both cases, regulations were put in place to mitigate the risks and ensure responsible development. Tegmark suggests that AI should follow a similar path, with a focus on establishing regulations that require companies to prove the safety of their AI systems before deployment, particularly in high-risk applications.

Tawana Petty, the director of policy and advocacy at the Algorithm Justice League, brings attention to the present-day issues of racial bias embedded in AI systems. She highlights the discriminatory impact of AI, such as facial recognition technology used by law enforcement. Petty stresses the importance of addressing these racial biases in AI and calls for a comprehensive approach to protect civil rights and ensure algorithmic fairness.

In summary, the concerns surrounding AI and its potential risks have gained significant attention from experts and activists. The focus is on establishing robust regulations, fostering international cooperation, and addressing present-day challenges, such as racial bias, to mitigate the potential existential threats posed by AI.

oncerns about AI Risks: Experts Highlight the Need for Global Regulations and Addressing Present-Day Challenges"

Max Tegmark, an MIT professor focused on AI, further emphasizes the urgency of the AI regulation discussion. He compares the current situation to past instances where scientists warned about the risks of nuclear weapons and biotechnology. In both cases, regulations were put in place to mitigate the risks and ensure responsible development. Tegmark suggests that AI should follow a similar path, with a focus on establishing regulations that require companies to prove the safety of their AI systems before deployment, particularly in high-risk applications.

Tawana Petty, the director of policy and advocacy at the Algorithm Justice League, brings attention to the present-day issues of racial bias embedded in AI systems. She highlights the discriminatory impact of AI, such as facial recognition technology used by law enforcement. Petty stresses the importance of addressing these racial biases in AI and calls for a comprehensive approach to protect civil rights and ensure algorithmic fairness.

In summary, the concerns surrounding AI and its potential risks have gained significant attention from experts and activists. The focus is on establishing robust regulations, fostering international cooperation, and addressing present-day challenges, such as racial bias, to mitigate the potential existential threats posed by AI.

About the Creator

Shadrack Kalama

Shadrack is a passionate writer with a creative spirit and a love for storytelling. With a pen in hand and a mind full of imagination, I weaves words into captivating narratives that transport readers to new worlds and evoke feelings

Comments

There are no comments for this story

Be the first to respond and start the conversation.