The Promise of Social Robots

Rather than fear robots will take our jobs, we should be more worried there won’t be enough of them as the workforce ages.

THERE IS SOMETHING unnerving about Geminoid F. She breathes, blinks, smiles, sighs, frowns, and speaks in a soft, considered tone. On the surface, she appears to be a Japanese woman in her 20s, about 165 cm tall with long dark hair, brown eyes and soft pearly skin. She breathes, blinks, smiles, sighs, frowns, and her lips move when she speaks in a soft, considered tone.

But the soft skin is made of silicon, and underneath that is urethane foam flesh, with a plastic head atop a metal skeleton. Her movements are powered by pressurised gas and an air compressor hidden behind her chair. She sits with her lifelike hands folded casually on her lap. She — one finds it hard to say “it” — had been on loan to the Creative Robotics Lab at the University of New South Wales in Sydney when I visited, and where mechatronics researcher David Silvera-Tawil had set her up for a series of experiments.

“For the first three or four days I would get a shock when I came into the room early in the morning,” he tells me. “I’d feel that there was someone sitting there looking at me. I knew there was going to be a robot inside, and I knew it was not a person. But it happened every time!”

The director of the lab, Mari Velonaki, an experimental visual artist turned robotics researcher, has been collaborating with Geminoid F’s creator, Hiroshi Ishiguro, who has pioneered the design of lifelike androids at his Intelligent Robotics Laboratory at Osaka University.

Their collaboration seeks to understand ‘presence’ — the feeling we have when another human is in our midst. How does the feeling arise? And can it be reproduced by robots?

Despite her long experience with robots, Velonaki admits being occasionally startled by Geminoid F. “It’s not about repulsion,” she says. It’s an eerie, funny feeling. When you’re there at night, and you switch off the pneumatics, she goes … (Velonaki makes a sighing sound, then hunches down, dropping her head lower) … it’s strange. I really like robots, I’ve worked with many robots. But there’s a moment when there’s an element of … strangeness.”

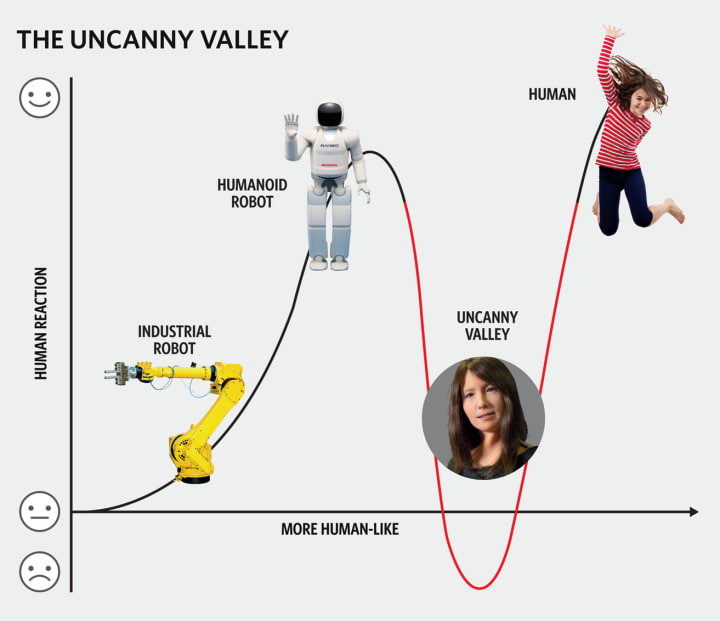

This strangeness has also been observed with hyper-real video animations. It even has a name: ‘the uncanny valley’: it’s the sense of disjunction we experience when the impression that something is alive and human does not entirely match the evidence of our senses (see “The Uncanny Valley” at bottom of this article).

For all her disturbing attributes, Geminoid F’s human-like qualities are strictly skin deep. She is actually a fancy US$100,000 puppet — partly driven by algorithms that move her head and face in lifelike ways, and partly guided by operators from behind the scenes. Her puppet masters supervise her questions and answers to ensure they’re relevant. Geminoid F is not meant to be smart: she’s been created to help establish the etiquette of human-robot relations.

Which is why those studying her tend to be cross-disciplinary types. “We hope that collaborations between artists, scientists and engineers can get us closer to a goal of building robots that interact with humans in more natural, intuitive and meaningful ways,” says Silvera-Tawil, now at Australia’s national science agency, CSIRO. It is hoped Geminoid F will help pave the way for robots to take their first steps out of the fields and factory cages to work alongside us. In the near future her descendants — some human-like, others less so — will be looking after the elderly and teaching children.

It will happen sooner than you think.

RODNEY BROOKS has been called “the bad boy of robotics”. More than once he has helped turn the field upside down, bulldozing shibboleths with new approaches that have turned out to be prophetic and influential.

Born in Adelaide, Australia, he moved to the United States in 1977 for his PhD and in 1984 joined the faculty of the Massachusetts Institute of Technology (MIT). There he created insect-like robots that, with very little brainpower, could navigate over rough terrain and climb steps.

At the time, the dominant paradigm was that robot mobility required massive processing power and a highly advanced artificial intelligence. But Brooks reasoned that insects had puny brains and yet could move and navigate, so he created simple independent ‘brains’ for each of the six legs of his robots, which followed basic commands (eg. always stay upright irrespective of direction of motion), while a simple overseer brain coordinated collaborative movement.

His work spawned what is now known as behaviour-based robotics, used by field robots in mining and bomb disposal.

In the 1990s, he changed tack, exploring human-robot interaction and developing humanoid robots that could interact with people and with the real world. First he created Cog, a humanoid robot of exposed wires, mechanical arms and a head with camera eyes, programmed to respond to humans. Cog’s intelligence grew in the same way a child’s does — by interacting with people. The Cog experiment fathered social robotics, in which autonomous machines interact with humans by using social cues and responding in ways people intuitively understand.

Brooks believes robots are about to become more commonplace, with ‘social robots’ leading the way. Why? It’s partly due to demographics, he argues: the population is ageing, and we’re running out of workers, so robots will have to fill the breach. The percentage of working age adults in the U.S. and Europe is currently 80% and 77% respectively, which has been largely unchanged for 40 years. But over the next 40 years, this will fall dramatically — to 69% for the U.S. and 64% for Europe — as the baby boomers retire.

“The statistics show that care-givers, before our eyes, are getting older,” he says. “As the people of retirement age increase, there’ll be less people to take care of them, and I really think we’re going to have to have robots to help us. I don’t mean companions — I mean robots doing things, like getting groceries from the car, up the stairs into the kitchen. I think we’ll all come to rely on robots in our daily lives.”

In the 1990s, he and two of his MIT graduate students, Colin Angle and Helen Greiner, founded iRobot Corp, maker of the Roomba robot vacuum cleaner. It was the first company to bring robots to the masses — 30 million of their products (14 million of them Roombas) have been sold worldwide, and 4.5 million are now shipped every year.

The company began by developing military robots for bomb disposal work. Known as PackBots, they are rovers on caterpillar tracks packed with sensors and a versatile arm. They’ve since been adapted for emergency rescue, handling hazardous materials or working alongside police hostage teams to locate snipers in city environments, and more than 5,000 have been deployed worldwide. They were the first to enter the damaged Fukushima nuclear plant in 2011 — although they failed in their bid to vent explosive hydrogen from the plant.

With the success of the Roomba, iRobot has since launched other domestic lines: the floor moppers Braava and Scooba, the pool cleaner Mirra, the video collaboration robot Ava and the lawn mower Terra. Its most recent new robot was the tall, free-standing RP-VITA, a telemedicine healthcare robot, which drives itself to pre-operative and post-surgical patients within a hospital, allowing doctors to assess them remotely.

But the company is not unique any longer — scores of others have sprouted up in the past 20 years, with robots that run across rocky terrain, manoeuvre in caves and underwater, and can be literally thrown into hostile situations to provide intelligence to their human sidekicks.

Robot skills have grown dramatically of late thanks to advances in natural language processing, artificial speech, vision and machine learning, plus the proliferation of fast and inexpensive computing aided by access to the Internet and big data. Computers can now tackle problems that, until recently, only people could handle. It’s a self-reinforcing loop — as machines understand the real world better, they learn faster.

Robots that can interact with ordinary people are the next step. This is where Brooks comes in. “With the amount of computation you have today in an embedded, low-cost system, we can do real-time 3D sensing,” says Brooks. “We also have enough understanding of human computer interaction, and human-robot interaction, to start building robots that can really interact with people. An ordinary person — with no programming knowledge — can show it how to do something useful.”

In 2008, Brooks and former MIT colleague Ann Whittaker founded a new company, Rethink Robotics, which did exactly that — created a ‘collaborative robot’ that could safely work elbow to elbow with humans. Baxter requires no programming and learns on the job, much as humans do. If you want it to pick an item from a conveyor belt, scan it and place it with others in a box, you grasp its mechanical hand and guide it through the entire routine.

Aimed at small to medium businesses for whom robots had been prohibitively expensive, and priced at an affordable US$22,000, one has to admit that Baxter is kinda cute too: its ‘face’ is a digital screen, dominated by big, expressive cartoon eyes. When its sonar detects someone entering a room, it turns and looks at them, raising its virtual eyebrows. When Baxter picks something up, it looks at the arm it’s about to move, signalling to co-workers what it’s going to do. When Baxter is confused, it raises a virtual eyebrow and shrugs.

“The first industrial robots had to be shown exactly how to follow a trajectory, and that’s how the vast majority of industrial robots are still programmed. It’s your ‘hammer’ — but you have to restructure all your ‘nails’ so that hammer can hit those nails,” says Brooks. “In the new style of robots, there’s a lot of software with common-sense knowledge built in.”

And that’s the difference: the 1.64 million industrial automatons that work tirelessly in the world’s factory floors today are mostly multipurpose manipulator ‘arms’ that move in three or more axes. And they are very limited: unaware of their surroundings and reliant on complex programming. In essence, they haven’t really changed much from those that began to appear on factory floors in the late 1960s: they’re still stand-alone machines stuck in cages, hardware-based and unsafe for people to be around.

Nevertheless, industrial robots are a big business, used for welding, painting, assembly, packaging, product inspection and testing — all accomplished with speed, precision and capable of running 24 hours a day. More than 422,000 were shipped in 2018, generating US$16.5 billion in sales.

Brooks’ hope is that ‘learn-by-doing’ collaborative robots — or ‘cobots’ as they are sometimes known — will become smart and cheap enough that researchers will develop applications beyond manufacturing. Which they have: Baxter is extremely popular with research labs around the world. Sadly, Rethink itself ran into financial straits and ceased operations in 2018, selling off its assets, and German automation specialist Hahn Group acquired Rethink’s patents as well as its Intera 5 software platform.

That left its main rival, Denmark’s Universal Robots, to jump to the fore. Founded by the engineers Esben Østergaard, Kasper Støy and Kristian Kassow, it launched its first cobot in 2008, the UR5, a highly flexible robotic arm with a carrying capacity of 5 kilos and designed for repetitive or risky tasks. The company now has six cobot models in various configurations: latter versions are bigger and more versatile, like the UR16e which is suited for heavy payloads — like material handling, packaging and palletising — and can lift 16 kilos.

Like Baxter, it is easy to set up and program but, unlike Baxter, it does not ‘learn by showing’: they are run by desktop controllers and taught tasks using tablet computers. They may not be as social, but they are reliably safe alongside humans. And, since the passing of Rethink, Universal Robots have come to dominate more than half of the world market: the company has so far sold 40,000 cobots and revenue has grown from US$100 million in 2015 to US$234 million in 2019.

Brian Scassellati, who studied under Brooks and is now a professor of computer science at Yale University, also believes robots are about to leave the factory and enter homes and schools. “We’re entering a new era … something we saw with computers 30 years ago. Robotics is following that same curve. They are going to have a very important impact on populations that need a little bit of extra help, whether that’s children learning a new language, adults who are ageing and forgetful, or children with autism spectrum disorder who are struggling to learn social behaviour,” he says.

In 2012, Scassellati’s Social Robotics Lab began a U.S. National Science Foundation program with Stanford University, MIT and the University of Southern California to develop a new breed of ‘socially assistive’ robots designed to help young children learn to read, overcome cognitive disabilities and perform physical exercises.

“Eventually, we’d like to have robots that can guide a child towards long-term educational goals … and basically grow and develop with the child,” he says.

Despite the rapid progress in human-robot interaction, Scassellati’s challenge is still daunting. It requires robots to detect, analyse and respond to children in a classroom; to adapt to their interactions, taking into account each child’s physical, social and cognitive differences; and to develop learning systems that achieve targeted lesson goals over weeks and months. To try to achieve this, robots have been deployed in schools and homes for up to a year, with the researchers monitoring their work and building a knowledge base.

Early indications are that real gains can be made in education, says UNSW’s Velonaki. Another of her collaborators, cognitive psychologist Katsumi Watanabe of the University of Tokyo, has tested the interaction of autistic children over several days with three types of robot: a fluffy toy that talks and reacts; a humanoid with cables and wires visible; and a lifelike android. Autistic children usually prefer the fluffy toy to start with, but as they interact with the humanoid, and later the android, they grow in confidence and interaction skills — and have been known to even converse with the android’s human operators when they emerge from behind the controls. “By the time they go to the android, they’re almost ready to interact with a real human,” she says.

Scassellati sees them as helping, rather than replacing, teachers. “They’re never going to take the place of people, but they are going to have a very important impact on populations that need a little bit of extra help, whether that’s children learning a new language, adults who are ageing and forget a few things, or children with autism spectrum disorder who are struggling to learn social behaviour.”

Brooks has no doubt that, as people and money flood into the field, artificial intelligence with social smarts will develop fast.

Take Google’s self-driving car; the original algorithms were found to be useless in real traffic, where an understanding of social interactions was an essential part of the challenge. For example: early cars arriving at four-way stop sign intersections were often trapped, because they waited for other cars which had arrived first to move, but would not initiate a move if another car moved first. They couldn’t read other drivers’ intentions, or edge forward slightly to indicate they were about to go next. The solution came in part by incorporating social skills into the algorithm.

THE NUMBER ONE fear people have of smart, lifelike humanoid robots is not that they’re creepy, but that they will take away people’s jobs. And according to some economists and social researchers, they are right to worry.

Erik Brynjolfsson and Andrew McAfee of MIT’s Centre for Digital Business say that, even before the global financial crisis in 2008, a disturbing trend was visible. From 2000 to 2007, U.S. GDP and productivity rose faster than they had in any decade since the 1960s — yet employment growth slowed to a crawl ever since. They believe this has been largely due to automation and that the trend will only accelerate as big data, connectivity and cheaper robots become more commonplace.

“The pace and scale of this encroachment into human skills is relatively recent and has profound economic implications,” they write in their book, Race Against the Machine.

Economic historian Carl Benedikt Frey and artificial intelligence researcher Michael Osborne at the University of Oxford agree. They estimate that 47% of American jobs could be replaced “over the next decade or two”, including “most workers in transportation and logistics … together with the bulk of office and administrative support workers, and labour in production occupations.

“As robot costs decline and technological capabilities expand, robots can thus be expected to gradually substitute for labour in a wide range of low-wage service occupations, where most U.S. job growth has occurred over the past decades,” they write. “Meanwhile, commercial service robots are now able to perform more complex tasks in food preparation, health care, commercial cleaning and elderly care.”

Perhaps unsurprisingly, the robot industry takes the opposite view: that the widespread introduction of robots in the workplace will create jobs — specifically, jobs that would otherwise go offshore. Both Universal Robots and Rethink have previously stated that, once robots were introduced, their customers hired more people to deal with the increased output. “The more a company is allowed to automate, the more successful and productive it is, allowing it to employ more people,” chief executive Enrico Krog Iversen told The Financial Times. But the jobs they will be doing will change, he argued. “People themselves need to be upgraded so they can do something value-creating.”

Even the European Union, which launched which it calls it the world’s largest robotics research program — a US$3.6 billion Partnership for Robotics, known as SPARC —seems to be onboard, expecting the initiative will “create more than 240,000 jobs”.

Brooks believes the fear that robots will strip-mine jobs from humanity is overplayed. The reality could be the opposite, he argues. It’s not only advanced Western economies that are faced with a shrinking human workforce as their populations age, and even China is facing a demographic crisis with the number of adults in the workforce of advanced economies will drop to 67% by 2050, mirroring the coming crunch in Europe and the USA. By the time we’re old and infirm, we could all be reliant on robots.

Says Brooks,“I’m not worried about them taking jobs, I’m worried we’re not going to have enough smart robots to help us.”

Whatever the future holds, it’s clear that androids like Geminoid F are not about to turn into baristas or daycare educators: social robots of the future will more likely take on roles like teaching assistants or caregivers able to handle some everyday tasks, while socially-skilled cobots will become common working alongside people doing the heavy lifting or those dull, repetitive tasks.

Meanwhile, the knowledge learned from human interactions with Geminoid F and her ilk will inform the future design of hardware and software of all robots. They will become friendlier, less scary and easier to use. Who knows? They may even come to be considered that most social of things: friends.

THE UNCANNY VALLEY

IN THE CLASSIC science fiction film Alien, Ash is the science officer aboard a commercial space freighter on its way back to Earth, a stiff company man who is later revealed to be an android with a disturbing agenda. In Blade Runner, ‘replicants’ are worker androids of synthetic flesh who follow programming, but begin to deviate — and rebel — at the end of their functional lives. In Moon, Gerty is all gears and switches with a smiley face and a soothing voice to help his interactions with humans, but is entirely focussed on what’s best for the mission.

They are all robots. But what is a robot? It’s probably better to start by asking, “What is a human?” For when electro-mechanical machines start resembling and — more disturbingly — behaving like humans, we have to define what they are by, first, what they are not. They are clearly not human. But what is it to be human?

For the 19th century American essayist Ralph Waldo Emerson, humans were “an intelligence served by organs”, while for the 18th century U.S. polymath and scientist Benjamin Franklin “a tool-making animal”. But if your organs are electrical and your intelligence driven by software, what then?

We’ve already crossed Franklin’s line with tool-making robots in the 1960s — they are mindless and hardly sentient, but they both use and make tools. The Roman philosopher Seneca the Elder, on the other hand, saw humans as “A reasoning animal” — but a lot of what we see in advanced artificial intelligence looks eerily like reasoning.

Even as the gamut of human capabilities are increasingly replicated, mimicked or even matched by automatons with no blood coursing through their veins and no heart beating in their chests, we still believe we know what it is to be human. But when they look and act like us, and as we begin to interact with them as if they were people, a vague discomfort creeps in. If it walks and talks like a person, is it — or is it not — a person?

This is ‘the uncanny valley’. The term was coined by Japanese roboticist Masahiro Mori in 1970; his thesis was that, as the appearance of a robot is made more and more humanoid, a human observer’s emotional response becomes increasingly positive and empathic. But only up to a point. When the robot becomes too human-like, the response can quickly turn to repulsion.

“There are cute stuffed animals — and then there are those scary dolls few would want on their sofa,” Mori told The Japan Times. “With robots, it’s the same. As their design gets closer and closer to looking like humans, most people begin to feel more and more scared of them. To a certain degree, we feel empathy and attraction to a human-like object; but one tiny design change, and suddenly we are full of fear and revulsion.”

The theory has long had currency in cognitive science, with anecdotal instances in real life. In Pixar’s groundbreaking 1988 short film Tin Toy, the animated human baby who plays with the protagonist provoked strong negative reactions from test audiences, leading the film company to take the uncanny valley thesis seriously. Sony Pictures encountered a similar problem with The Polar Express in 2004; despite the film grossing well, many critics have said its mannequin-like human characters — which some critics compared to zombies — “gave them the creeps”.

It wasn’t until 2009 that the uncanny valley effect was properly tested. A study led by cognitive scientist Ayse Pinar Saygin of the University of California in San Diego scanned the brains of 20 subjects with no experience working with robots. They were shown 12 videos of the humanoid robot Repliee Q2 — another of Ishiguro’s creations — performing ordinary actions such as waving, nodding, taking a drink of water and picking up a piece of paper. Subjects were then shown videos of the same actions performed by a woman and then one of a stripped-down version of the automaton with all of its metal circuitry visible.

MRI scans showed a clear difference in brain response when the humanoid appeared: the brain areas that lit up included those believed to contain mirror neurons. Mirror neurons are thought to form part of our empathy circuitry. In monkeys they fire when the animal performs an action and when it sees the same action performed by another. They are not yet proven to act the same way in humans.

Saygin says her results suggest that if something looks human and moves likes a human, or if it looks like a robot and acts like a robot — we’re unfazed. However, if it looks human but moves like a robot, the brain fires up in the face of the perceptual conflict.

Like this story? Please click the ♥︎ below, or send me a tip. And thanks 😊

About the Creator

Wilson da Silva

Wilson da Silva is a science journalist in Sydney | www.wilsondasilva.com | https://bit.ly/3kIF1SO

Comments

There are no comments for this story

Be the first to respond and start the conversation.