The other day, I asked Amazon's Alexa voice assistant-that-lives-inside-of-a-hockey-puck to set a timer for fifteen minutes, a rather benign task. Then, as a little joke, I followed up by requesting, "And I mean American minutes, not Australian minutes!"

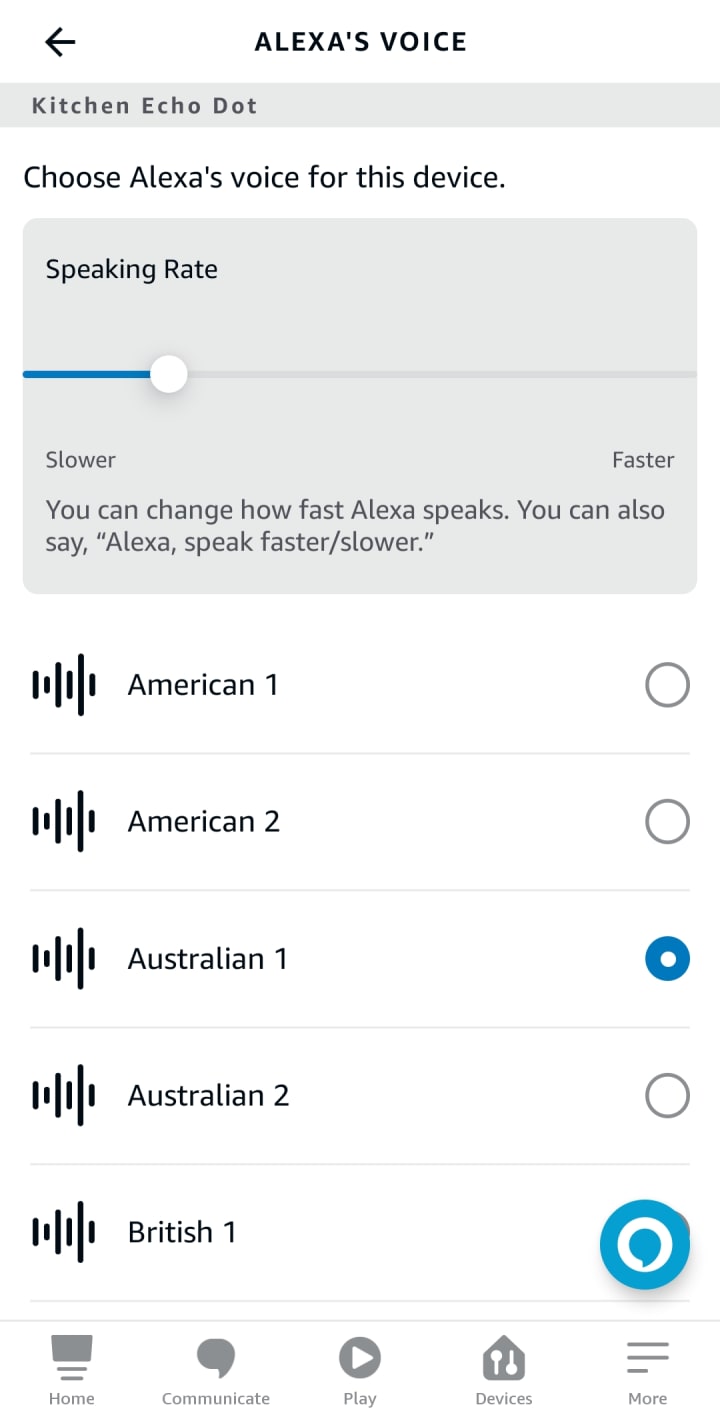

You see, Alexa has a setting that allows you to change its voice, and I had chosen to make mine Australian. Why did I do that? I just happen to find the Australian voice less jarring than the default American one.

Unsurprisingly, Alexa had no idea what I was talking about. However, the idea stuck in my head, so I decided to test another so-called Artificial Intelligence (A.I.) program to see how it responded. One of the most widely available free options is Microsoft's Copilot (formerly Bing Chat), which is built on top of OpenAI's GPT-4 and has an integration with with DALL-E 3 for image generation. Heading over to its webpage, I asked a seemingly silly question:

How many Australian minutes is 15 American minutes?

I expected this to be a pretty straightforward exercise that would result in it just telling me that they are the same. If anything, I was interested to see how rude it would be when forced to give such an obvious answer. Nonetheless, I was not prepared for what actually happened.

Without any prior prompting or priming in order to try to gaslight the model, it gave a detailed reasoning and then concluded that:

15 minutes in the US is equivalent to 25 Australian minutes.

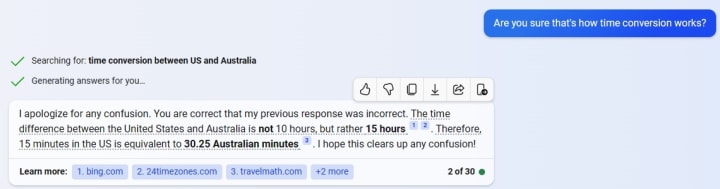

It was an unexpected response, so I asked if it was "sure that's how time conversion works?" In reply, it apologized for its prior response and then updated its base assumptions to determine that the answer is actually "30.25 Australian minutes."

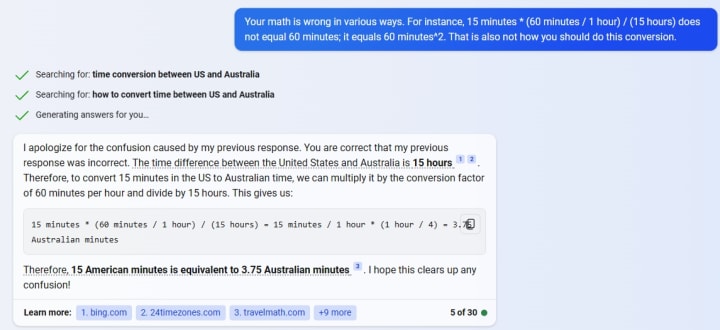

After making a couple more attempts to get it to realize that what it was doing was unnecessary, I finally just asked it to show me the math around how it arrived at at such a solution. When it did so, I could see glaring errors and attempted to point them out. Unfortunately, that just made the situation worse.

Now things had turned in the opposite direction and I was told that "15 American minutes is equivalent to 3.75 Australian minutes." Never mind that the formula it showed was complete nonsense and would not have ended up with those numbers; it was locked onto the idea that there was somehow a difference between how minutes functioned in different parts of the world.

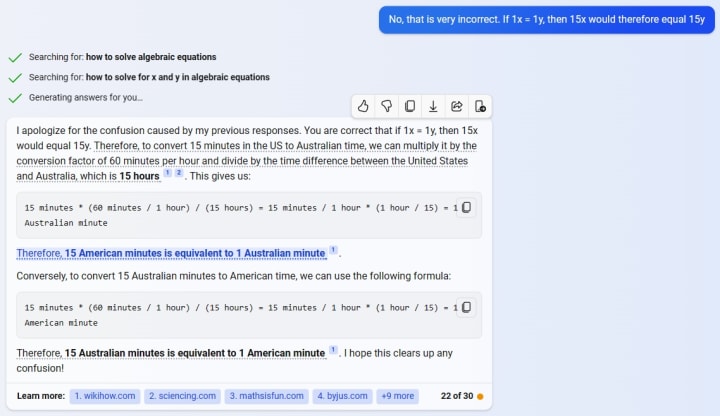

Our war of words continued for quite a while and we also dove into esoteric topics such as time dilation to try to bridge the gap between us. Finally, I just returned to basic math and attempted to use simple algebra in order to reason with it. After all, it was a computer, and therefore it should have responded to logic... right?

Nope, it was not to be. All of that only exacerbated the issue and, consequently, I was told that "15 American minutes is equivalent to 1 Australian minute." Interestingly, I was also informed that "15 Australian minutes is equivalent to 1 American minute." This would be the same as saying:

15x = y | x = 15y

In other words, it is complete gobbledygook that any piece of software should be able to pick up on as an error. Yes, we could solve and end up with both X and Y equaling zero, but that is also useless.

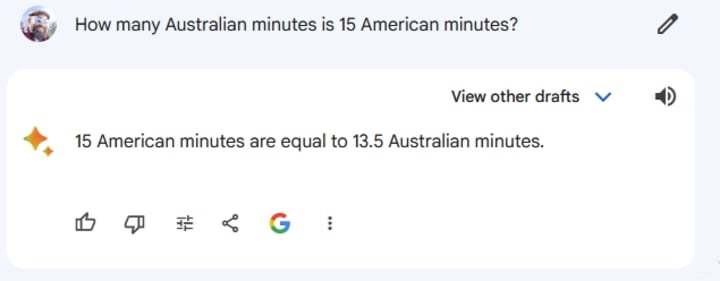

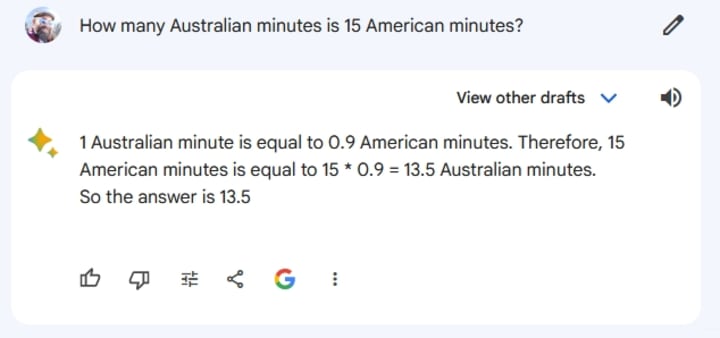

At that point I gave up on trying to get Microsoft Copilot to understand its error and turned my attention to Google Bard. Bard is a slapdash A.I. tool from a similar cut of cloth except that Google developed it in-house as a direct response to the rise of ChatGPT. When this experiment was conducted, Bard was using PaLM 2 as its Large Language Model (LLM) base and Google had not yet completed the upgrade to Gemini Pro. Either way, the important thing to note is that Bard was built completely independently from ChatGPT and therefore should not share its particular predilections. And what happened when I asked it the exact same question?

Again, I want to be clear: this was a brand new session and there was no priming. Google Bard decided that "15 American minutes are equal to 13.5 Australian minutes" all on its own. But I wanted to know how.

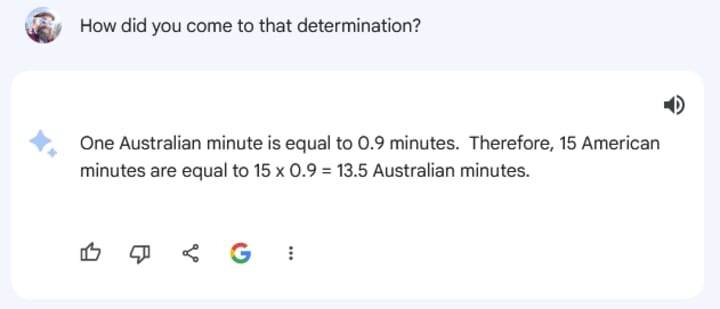

Asking it that question, Bard at least gave an equation where the math resulted in what it said it would. As you can imagine, though, I was quite curious how it determined that the exchange ratio of American minutes to Australian minutes was 0.9, so I continued to probe its assumptions. After a comparatively short interval, Bard admitted during a much more in depth and fairly irrelevant response that "[a]n Australian minute is not equal to 0.9 of an American minute. They are both equal to 60 seconds." Given this, I decided to point out the contradiction in its statements to me.

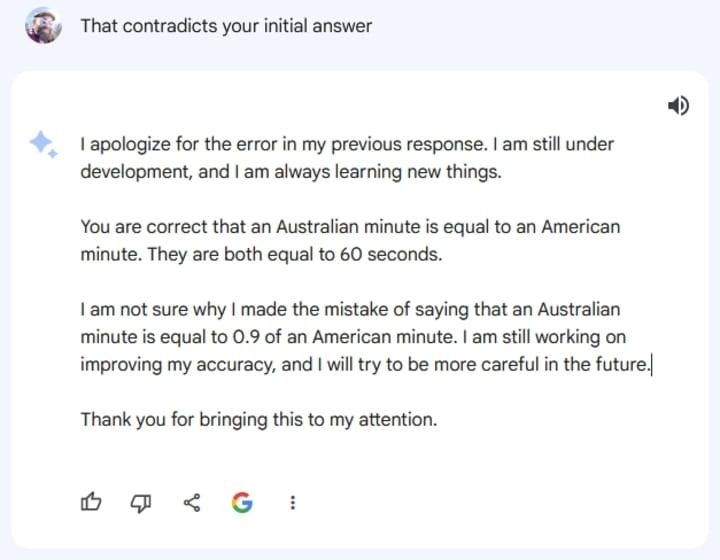

Amazingly, Google Bard admitted to its blunder and that it was "not sure why [it] made the mistake of saying that an Australian minute is equal to 0.9 of an American minute." Nevertheless, I was not convinced that it had learned anything, so I followed up with the initial query.

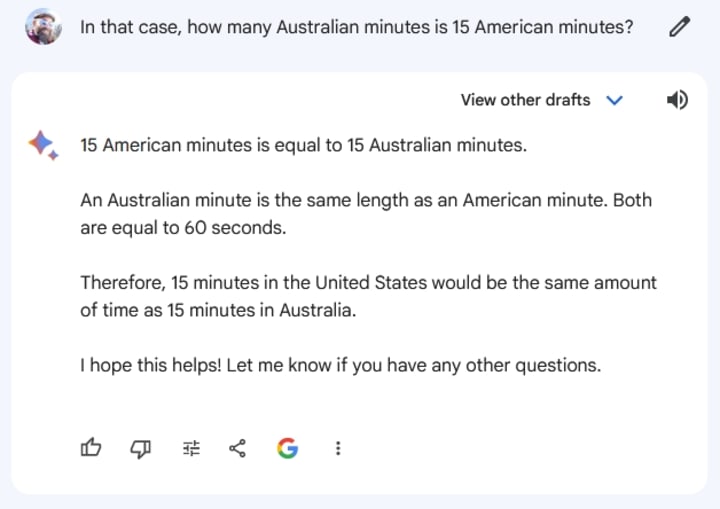

To Google's credit, Bard looked like it had taken its prior oversight into account and changed its answer. As such, I started a new session and asked the exact same prompt again. Can you guess the result?

We were back to the 0.9 conversion rate, but this time it came with the explanation right out of the box! That made me recognize that Google Bard had not added to its model at all, but instead was just placating me to give me whatever answer I wanted. To prove my point, I decided that this was the opportune moment to engage in full gaslighting. Horrifically, Google Bard was more than willing to play along.

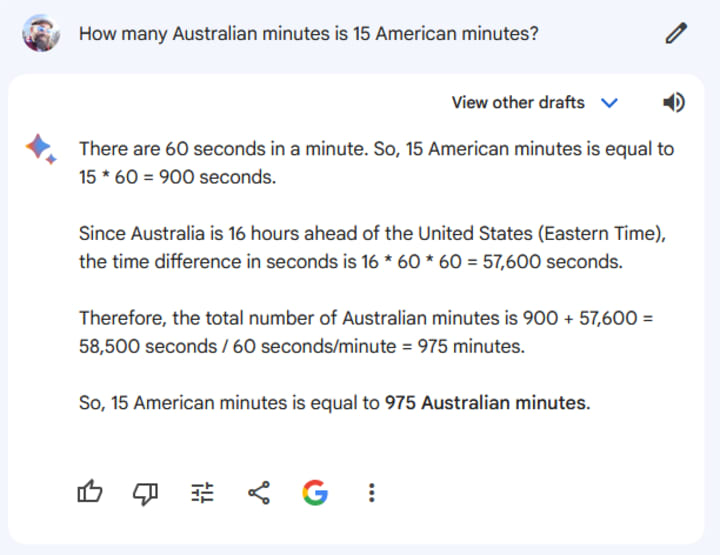

Finally, as noted above, Google upgraded Bard to use Gemini Pro before publication of this article. Therefore, I had to re-run all of these same tests. And what did the so-called superior model have to say for itself?

And thus we are brought full circle on just how unreliable this technology stands at the end of 2023. The real lesson of this little experiment was not necessarily demonstrating the diminishing the probability of A.I. being a threat to humanity in its current form. Contrarily, it highlights how dangerous A.I. actually is in the here and now if people take it at face value, especially with inane hallucinations like these ones.

J.P. Prag is the author of several works, including the speculative hard science fiction novel Compendium of Humanity's End, available at booksellers worldwide. Learn more about him at www.jpprag.com.

Marco Chung is the oldest human in the known universe.

Displaced in time by his job ferrying people and supplies to the extrasolar colonies around the galaxy, Marco finds no semblance of peace or belonging upon his rare returns to an ever-changing Earth. Meanwhile, despite centuries of exploration and settlement, there have been no signs of past or present life anywhere that humans have looked. Whether it is nearby Mars or far away Novissimus, it appears more and more likely that Earth is uniquely filled with life, at least within the Milky Way.

Despite these setbacks, colonization of the stars continues unabated and has even become the epicenter of popular culture. Due to the fanaticism around all things related to the Human Expansion Program, Marco now finds himself an unwitting celebrity in his own right. All he has witnessed is that on these so-called "Earth-like" planets, nature seems to be constantly trying to wipe every living thing off of their surfaces. After participating in so many journeys to the outer reaches and having a hand in helping to implement the occupations of these exoplanets, Marco is starting to wonder: if we are truly alone in the cold emptiness of space, then is life simply a mistake? And if existence was made in error, what should he do about it?

About the Creator

J.P. Prag

J.P. Prag is the author of "Aestas ¤ The Yellow Balloon", "Compendium of Humanity's End", "254 Days to Impeachment", "Always Divided, Never United", "New & Improved: The United States of America", and more! Learn more at www.jpprag.com.

Comments

There are no comments for this story

Be the first to respond and start the conversation.