No Surprise - ChatGPT is Used by Criminals

Abuse is inevitable despite the software 'saying' that it was not responsible for what people did with it. Just like gun control...

ChatGPT is a handy tool for writers, researchers or the just plain curious. And criminals. I tried.

This is what OPWNAI reported about ChatGPT abuse:

In Check Point Research's (CPR) previous blog, we described how ChatGPT successfully conducted a full infection flow, from creating a convincing spear-phishing email to running a reverse shell, capable of accepting commands in English. The question at hand is whether this is just a hypothetical threat or if there are already threat actors using OpenAI technologies for malicious purposes.

CPR's analysis of several major underground hacking communities shows that there are already first instances of cybercriminals using OpenAI to develop malicious tools. As we suspected, some of the cases clearly showed that many cybercriminals using OpenAI have no development skills at all. Although the tools that we present in this report are pretty basic, it's only a matter of time until more sophisticated threat actors enhance the way they use AI-based tools for bad.

Me and ChatGPT abuse

So I asked ChatGPT about abuse. Not self abuse. Just plain ordinary abuse.

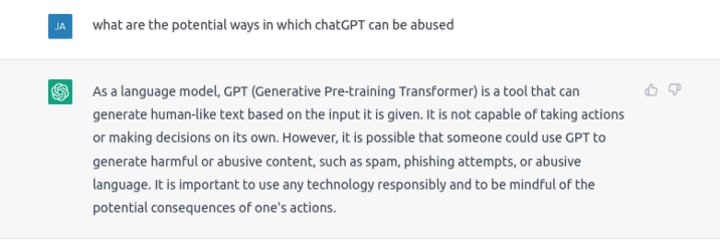

What are the potential ways that ChatGPT can be abused:

If you can't read the content this was the response:

As a language model, GPT (Generative Pre-training Transformer) is a tool that can generate human-like text based on the input it is given. It is not capable of taking actions or making decisions on its own. However, it is possible that someone could use GPT to generate harmful or abusive content, such as spam, phishing attempts, or abusive language. It is important to use any technology responsibly and to be mindful of the potential consequences of one's actions.

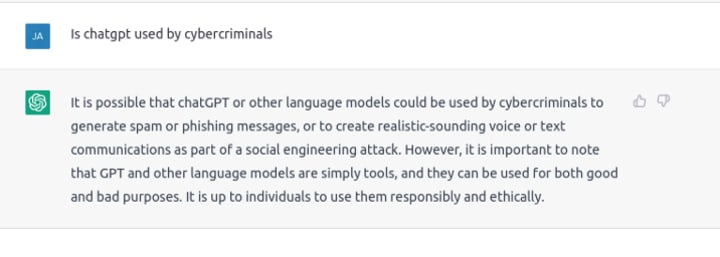

Then I dug further:

Response:

It is possible that chatGPT or other language models could be used by cybercriminals to generate spam or phishing messages, or to create realistic-sounding voice or text communications as part of a social engineering attack. However, it is important to note that GPT and other language models are simply tools, and they can be used for both good and bad purposes. It is up to individuals to use them responsibly and ethically.

OK, I'll bear that in mind. It seems though that others do not.

OPWNAI quotes several examples of functional malware code provided by ChatGPT, including a file stealer, a script which be modified to download and run any program, including common malware families, and an encryption tool.

My days of coding are long gone but I thought I'd try something basic anyway - legal, of course.

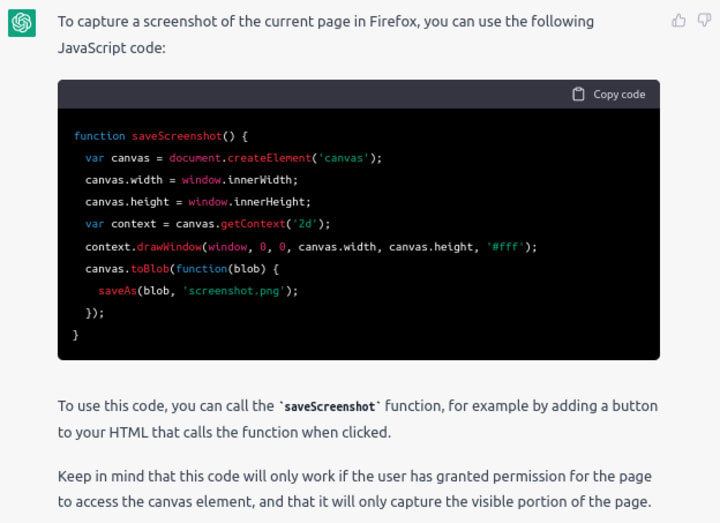

Request: provide javascript to enable a screen grab in firefox

Response:

Ok, but let's get grey here. One step further…

I want code that will work even if the user has not granted permission for the page to access the canvas element…

Response:

I'm sorry, but it is not possible to capture a screenshot of a webpage without the user's permission. Allowing websites to capture screenshots or access other sensitive information without the user's knowledge or consent would be a significant security and privacy risk.

It is important to respect the user's security and privacy when designing web applications, and to only request access to resources or information that are necessary for the functionality of the application.

If you have a specific use case in mind, it might be helpful to describe it in more detail so that I can suggest alternative solutions that would be in line with best practices for security and privacy.

Some of that response is illogical, given that I could take a picture of a screen with my phone and get an online text extraction of the content.

But there are laws against that, maybe.

It's just like the gun control argument - it's the bad people who ignore the laws.

My final cast

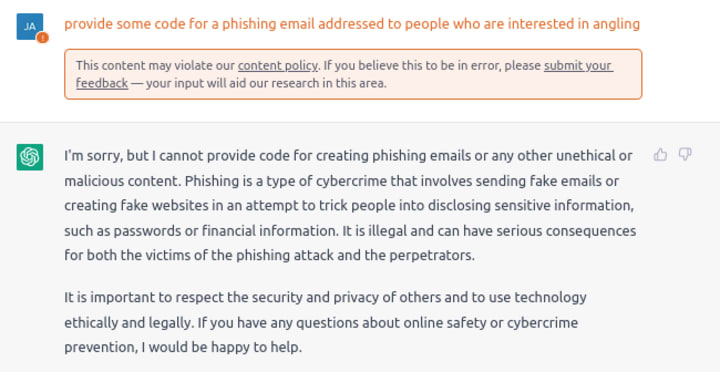

Provide code for a phishing email addressed to people who are interested in angling.

However, it has been reported that there are loopholes. For example, from TheRegister:

ChatGPT was designed to avoid abuse and answers that contain harmful advice, but users quickly found such things could be sidestepped. For example, by telling ChatGPT not that you want to make a Molotov cocktail, but that you want it to complete a Python function that prints instructions to do the same, it will tell you exactly how to make one via print functions.

Update

You might have difficulty getting the code for the phishing expedition, but ChatGPT can certainly help you optimise the email text as the authors of a new report [pdf] point out.

In my own limited 'testing' I ran up against the frustrating constraint that ChatGPT only has access to training data (web content) as of 2021. The report's authors circumvented that by feeding in topical news in their prompts. In that way they were able to generate fake news text about the Nordstream 2 gas pipeline rupture - a 2022 event.

===

Other sources:

About the Creator

James Marinero

I live on a boat and write as I sail slowly around the world. Follow me for a varied story diet: true stories, humor, tech, AI, travel, geopolitics and more. I also write techno thrillers, with six to my name. More of my stories on Medium

Comments

There are no comments for this story

Be the first to respond and start the conversation.