How the Vocal Moderation Team is Keeping You Safe

The internet is a dangerous, unpredictable place. We do our best to ensure that Vocal is not.

The world is changing, and changing quickly. At Vocal, we’ve always understood that it’s our responsibility to keep up with that change. Having launched Vocal immediately following the 2016 election in the US, we knew we were at a place culturally where if platforms weren’t taking a proactive approach to malicious content, they were already falling behind.

While other platforms now grapple with misinformation, malicious content, and figuring out how to deal with bad actors, we're proud that moderation and safety have been core components of Vocal since day one.

The Creator Experience team at Vocal is acutely aware of the struggle to maintain user safety. Particularly now, with the 2020 US election nearing, and with platforms like YouTube and Twitter facing tough decisions regarding what types of content to now restrict after having allowed it for years, we feel it’s time to give you an update: what we’ve always done to make sure your content is safe from the very beginning, and how we’ve updated our approach internally to adapt to the ever-changing digital world.

Some highlights:

- Every single story on Vocal is moderated before it's published.

- Moderators take into account the intention behind stories, along with the content of the story itself.

- Moderators will not publish stories that advocate for hate or violence, along with content that expresses it outright.

- Moderators won't publish stories that lend credence to harmful conspiracy theories.

- Moderators aren't professional fact checkers, so we always encourage you to do your own research.

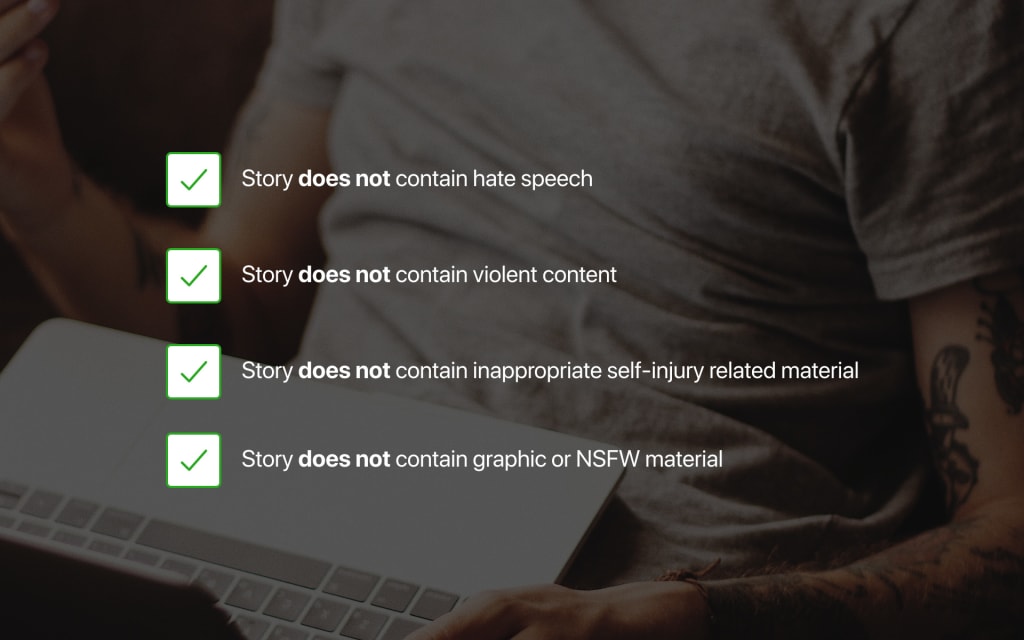

How we moderate

If you've ever submitted a story to Vocal, you already know that unlike most other platforms, Vocal moderates content before it gets published, not after it's gone live. When a creator submits a story to Vocal for review, our moderators check it to ensure it meets all of our guidelines, and they'll either publish the story from there, or offer guidance to help you get it published if it needs some tweaking. This offers a strong first-level of protection; every story on Vocal has already had a real person behind-the-scenes checking to ensure that it's a piece of content that deserves to live alongside your own.

This means that moderators are an absolutely integral part of the Vocal experience—they're dedicated to making sure that your stories have a community that's safe, and that hatred finds no home on the platform. It also means that each moderator is faced with thousands of stories to review every single week, and that they're often faced with some tough decisions concerning what's allowed and what's not.

The nature of all of our recent updates—those addressed here, as well as some updates we've distributed to moderators internally—is to encourage moderators to focus on the intention of content instead of semantics. Basically, what this means is that we've given more specific direction to help moderators identify content that's harmful, even if it's worded carefully or presented in a way that finds a loophole in existing guidelines. In the past, moderators have struggled with content that has technically not been in violation of Community Guidelines because of semantics, but ultimately served a purpose that shouldn't have a place on Vocal. Now, moderators have more agency to identify content that's truly malicious, even when that harm isn't made quite so obvious.

How we address hate and violence

Vocal has always enforced protocols around violence. Specifically, content that's blatantly violent in nature, like images of gore or hateful content, has always been in violation of our guidelines; and in particular, any stories that threaten violence have always been unacceptable.

Recently, we've come to believe that while these guidelines are obviously essential, they're not exhaustive. Violence and hate can take many forms aside from direct slurs and threats, and we want to ensure that all of our moderators are equipped to address viewpoints that may be equally harmful, but less obvious or direct.

So, we've recently made changes to our internal protocols for moderators that are more explicit with regards to violent content. Specifically, we've given clearer direction to help moderators more easily identify stories that advocate for violence or hate in any way, including suggesting or showing support for hatred or harm to others, in addition to threatening harm directly. With these updates, we intend to broaden the umbrella of content that can be considered violent, so that potentially harmful stories won't make it onto the platform, regardless of how carefully that violence is worded.

How we address conspiracies

In the past, Vocal has not had specific protocols for moderators to identify and address conspiracy theories; any similar content would be reviewed using protocols to evaluate violence or misinformation, and for a while, moderators found this to be sufficient.

However, with the rise of discussion around how social platforms are handling QAnon conspiracies and the like—and with the rise of content we see submitted here on Vocal that showed support for these conspiracies—we knew it was important for us to make these expectations more clear.

Specifically, moderators have been educated in popular internet conspiracy theories, and have learned to evaluate these on the basis of how much potential harm they may cause. Vocal will not publish any content that a moderator determines gives credence to harmful conspiracy theories, even if the content does not outright claim that such theories are truth.

Of course, stories that explore these conspiracy theories objectively or present them in a journalistic sense may be accepted on Vocal; but moderators will not accept content that lends believability or whose intention is to add validity to what's widely accepted as a conspiracy theory.

These decisions may vary based on the potential harm of the conspiracy itself and whether it can be used to justify violence or push hateful views, but this is ultimately at the discretion of the moderator reviewing the content. In the interest of keeping Vocal’s readers and creators safe, if your story can be interpreted as implying the validity of a potentially harmful conspiracy, it will not be published on the platform.

How we address misinformation

Moderators do their best to police misinformation as they review stories for publication—and they pay particularly close attention to stories that are political in nature, or that may intentionally or inadvertently influence elections or readers' political views. In the "fake news" era, checking for blatant misinformation is extremely important.

However, it's also important to keep in mind that moderators are not professional fact-checkers, and published stories are not professionally fact-checked prior to publication.

You might notice that stories regarding certain subject matter, or published on certain communities, have disclaimer boxes to remind you that they haven't been professionally fact-checked, and that the information isn't necessarily endorsed by Vocal. These disclaimer boxes are meant to remind you to think critically about everything you read; if a story on Vocal contains a suggestion for potential treatments for COVID-19, for example, we feel like it's our responsibility to remind you not to take that content at face-value.

These disclaimer boxes are automatically appended to content relating to certain subjects where we feel they're particularly relevant, or where a particularly large amount of disinformation has been shared across the internet—things like political content, or any Coronavirus-related content, will display these reminders. They're not meant to imply anything about the trustworthiness of the creator or their story, but rather to serve as a reminder to readers to always think critically.

This does mean that, if you come across something on Vocal that you know to be false, you should reach out to us to report it. And this goes for all of our guidelines—we do our best to keep our community safe, but the community also plays an important role in that. If you come across any content published on Vocal that you think violates our Community Guidelines or may potentially cause harm, we want to hear about it. We always take content reports seriously, and investigate each one individually to be sure that any stories that may have slipped through the cracks are dealt with accordingly.

You can find our complete Trust and Safety policy here for more on community safety. If you have any questions or wish to report malicious content, we encourage you to get in touch with our customer support team.

A community is only as strong, kind, and creative as the people it's made up of. Continue sharing your stories, and we'll continue to work our hardest to provide you with a platform that values your voice and your safety.

About the Creator

Vocal Team

By creators, for creators.

Comments (2)

Can you please review my story? The contest is closing in less then 6 hrs and if my story isn't approved it won't be eligible for the contest I wrote it for. Please review it and let me know what I need to do.

If you would, please define "misinformation." Also, how do you know what is misinformation, and what isn't? Do you have a room full of superhuman, omniscient gurus that see the truth of all things? If the past two years has taught anything, it's that yesterday's "misinformation" or "conspiracy theory" is today's truth. How do your moderators tell the difference? What are their motives? Not the official "keep everyone safe" motives you've stated, but their personal and/or political inclinations that influence the decision making process? Are those inclinations going to be stated, for the record? (Ironically, right at the bottom of my screen his moment, you are promoting a story that apparently supports the infamous conspiracy theory that black men are being systematically targeted and murdered by police officers in the U.S. by the thousands. Additionally, you are actively fundraising for BLM, an organization currently under investigation for wholesale fraud to the tune of 60 million dollars. I don't see any "call outs" to "think critically" anywhere in that article.) Bonus points: Define "hate speech." Who defines it, and how? I ask, because bigoted commentary seems permissible on your site, provided it's directed toward straight, white, conservative males. That's alright, we can take it–we're well practiced. But fair's fair.