Algorithms Cannot Learn.

Note the Period at the end of the Title - It Is Intentional - Exactly Like For a Machine the Ability to Learn is a Logical Impossibility for an Algorithm

Can we please stop with this algorithms learn garbage? It was bad enough when only machines could learn (they cannot), but to suggest an algorithm can learn is an even more egregious crime against logic and language. Math and statistics, formerly only considered subjects to study, and useful tools to be used in a variety of applications (including the creation of algorithms), are now fully autonomous learning subjects themselves! Wow! Does Grammar learn too? What about Social Studies? Is it also a learning subject? I sure hope French doesn’t learn how to learn, I mean Sacre Bleu, right? Oh wait, but it is the advanced nature of, and the way we combine the various mathematical and statistical treatments that we apply to the input data that allows the algorithm to transcend its previous status as a lowly ‘normal’ algorithm and become an exalted “learning” algorithm. That is some seriously magical math and statistics. Where can I get me some of that magic math. Math and stats with actual life giving properties. We have a new contender for man’s universal yearning for a creator story. It was not God or random chance, it was in fact, magic math that birthed us all. Magic math and the magical box, the computer/machine. It is all a very neat little creation story perfectly fit for a simulationist.

Stop and think for just a moment

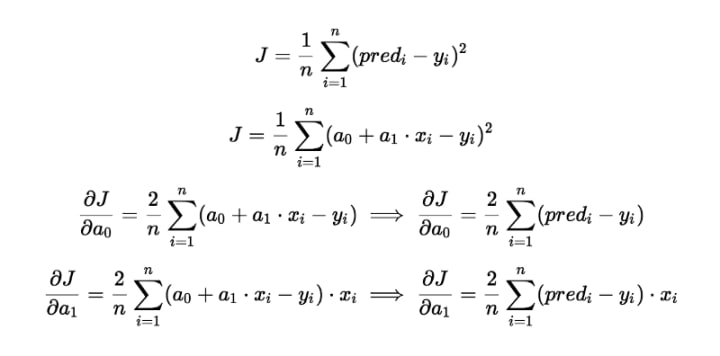

Seriously, seriously, think about what you are saying when you say an algorithm can learn. Really, sit down, deep breathe, think, and ask yourself this question. Can an algorithm learn? Ok before you answer, stop, breathe, think. OK. Now answer. Didn’t work. Try this question. Can 2+2 learn? Tough one right. 2+2 is an algorithm right? It should be able to learn? Right? Sorry, it is of course much too simple of an algorithm to be able to learn. Then at what point exactly does the equation/calculation become complex enough that it now can learn things, it is now capable of learning? or it is capable of bestowing the ability to learn upon the machine in which it is programmed. How about the algorithm below? It looks quite complex (it really isn’t but let’s pretend that it is). Can it learn? Can it grant a machine/computer the ability to learn?

Two Logical Fallacies

It is really a very simple answer, never, it cannot and it will never be able too because the logic of our world, the logic our language does not allow it. You can say it and type it and write over and over and over again all day until the end of time, but it will never be the case that an algorithm can learn, never. The same is true of a machine for the simple reason that at the exact moment at which a machine became capable of learning it would no longer be a machine.

Exactly like machines and neural networks and any other man made thing or object or device to date, algorithms, cannot learn, nor can they forget.

To suggest that they can is to commit two logical fallacies, (a form of) the mereological fallacy and the compulogical fallacy. Suggesting a neural network (or an algorithm) can learn is exactly as wrong as suggesting the same thing of a brain, which also is not capable of learning. Only of a whole human person (and some non human animals) with a (mostly) fully functioning nervous system including a brain can we say they are capable of learning. To say otherwise is to commit the mereological fallacy, assigning traits/characteristics/attributes, etc. to a part of a thing that can only (logically only) be applied to the whole thing.

The second logical fallacy is related to the first but is more specific and it is the logical crime that is committed when attributes/capabilities etc. that can only be (logically) assigned to human persons and some non human animals are instead assigned to machines/computers. Examples include things like feeling, remembering, forgetting, learning, etc. Simply put if a machine (or in this case a part of a machine-ANN) were capable of any of these things it would no longer be a machine.

About the Creator

Everyday Junglist

Practicing mage of the natural sciences (Ph.D. micro/mol bio), Thought middle manager, Everyday Junglist, Boulderer, Cat lover, No tie shoelace user, Humorist, Argan oil aficionado. Occasional LinkedIn & Facebook user

Comments

There are no comments for this story

Be the first to respond and start the conversation.