Are we (humans) ready for Human AI Symbiosis?

Exploring the Evolution and Architecture of AI Agents: Insights into RAG Systems, AutoGPT, BabyAGI, and LATS

ChatGPT has been widely utilized for initial generative AI applications, particularly in the development of chat systems that involve private data. These applications have commonly employed the Retrieval Augmented Generation (RAG) pattern. As the development of RAG systems progresses, multiple academic organizations and companies are dedicating resources to constructing independent AI agents. These agents are impacted by open-source initiatives such as AutoGPT (github/Significant-Gravitas/AutoGPT) and BabyAGI (github/yoheinakajima/babyagi). The article by Yohei Nakajima created by the BabyAGI agent, details a task-driven AI agent that is capable of completing tasks, generating new tasks depending on the outcomes of completed tasks, and prioritizing tasks in real-time [1].

What are AI agents?

An AI agent is a software program capable of interacting with its surroundings, gathering data, and utilizing that data to autonomously accomplish certain objectives in order to achieve specific goals. It is engineered to perceive its surroundings, analyze information, and execute actions to accomplish a certain objective or set of objectives, functioning independently without direct human intervention [1]. AI agents can be classified into various sorts, such as simple reflex agents, goal-based agents, utility-based agents, and learning agents. Each type employs distinct methodologies to address problems and accomplish objectives.

How are AI agents different from Co-pilots?

The fundamental distinction between an AI agent and a co-pilot resides in their degree of autonomy and human participation. An AI agent is a mathematical and architectural model that independently interacts with an environment to fulfill a knowledge task. An AI co-pilot is a conversational interface that utilizes extensive language models to assist users in a range of tasks and decision-making processes. It operates in conjunction with humans, offering direction. An AI agent assumes complete control of a job or process, whereas an AI co-pilot enhances human capabilities by augmenting their abilities rather than replacing them.

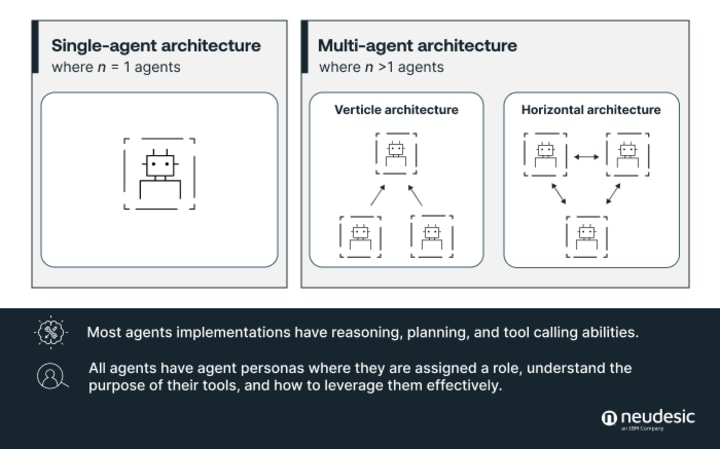

AI agents architecture [3]

Single-agent architectures provide a straightforward and efficient approach to dealing with well specified problems. Compared to multi-agent architectures, they are simpler to develop, implement, and maintain. In addition, single-agent systems can frequently achieve expedited decision-making as there is no requirement for coordination with other agents. Nevertheless, individuals might encounter difficulties in situations that demand collaboration or when different perspectives are necessary to resolve a problem. Single-agent systems exhibit restricted scalability and may encounter difficulties in managing intricate activities that necessitate various expertise [4].

Conversely, multi-agent architectures provide exceptional performance in scenarios that require cooperation and synchronization across several entities. They can utilize the varied knowledge of multiple agents to more efficiently handle intricate challenges. Multi-agent systems provide robustness and fault tolerance as the failure of one agent does not necessarily result in system failure. Nevertheless, the process of creating and overseeing multi-agent architectures can be intricate and need significant computer resources. Efficiently managing the behaviors of numerous agents necessitates the use of advanced communication and negotiation methods, which might result in additional processing time and delays. In addition, maintaining the stability and equity of interactions between agents can be difficult, particularly in dynamic contexts.

Single-agent architecture

The achievement of goals by agents depends on effective planning and the ability to make necessary adjustments. In the absence of self-evaluation and the ability to devise efficient strategies, individual agents may become trapped in a perpetual cycle of execution, resulting in either failure to complete a given job or the production of outcomes that fail to fulfill user expectations. Single agent architectures are particularly advantageous in tasks that necessitate direct function calling and do not necessitate feedback from another agent. Several noteworthy single agent approaches are ReAct, RAISE, Reflexion, AutoGPT + P, and LATS.

One of the single-agent architectures LATS is expanded below.

LATS

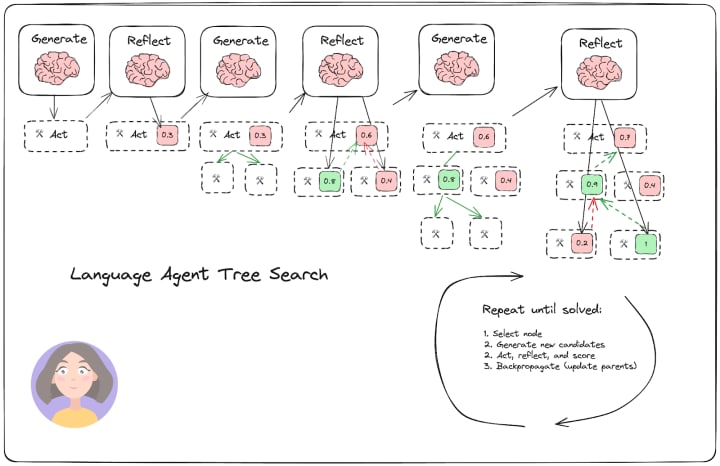

Language Agent Tree Search (LATS), developed by Zhou et al., is a single-agent approach that utilizes the general LLM agent search algorithm. It combines reflection/evaluation and search, specifically monte-carlo trees search, to improve overall task performance compared to similar techniques such as ReACT, Reflexion, or Tree of Thoughts [5].

Language Agent Tree Search (LATS) is a method for a single-agent that combines planning, acting, and reasoning by utilizing trees [6]. This approach, derived from Monte Carlo Tree Search, displays a state as a node and considers taking an action as moving between nodes. The system employs LM-based heuristics to explore potential choices and subsequently determines an action based on a state evaluator. LATS outperforms previous tree-based approaches by incorporating a self-reflection reasoning stage, resulting in a significant enhancement in performance. When an action is performed, both environmental data and feedback from a language model are utilized to assess if there are any logical mistakes and provide alternatives. LATS has exceptional performance on diverse tasks due to its capacity for self-reflection and a robust search methodology. Nevertheless, because of the intricate nature of the algorithm and the inclusion of reflection processes, LATS frequently requires a greater amount of processing resources and a longer duration to finish compared to alternative single-agent approaches.

It has four main steps:

- Select: select the optimal subsequent actions based on the combined rewards obtained from step (2). Either provide a response if a solution is discovered or if the maximum search depth has been reached, or continue the search.

- Perform an analysis and simulation: choose the most optimal 5 probable actions and carry them out simultaneously.

- Reflect + Evaluate: carefully analyze the results of these acts and assess the decisions based on introspection (and potentially external input).

- Backpropagate: revise the scores of the main trajectories according to the results.

LATS utilizes a (greedy) Monte-Carlo tree search. During each search phase, the algorithm selects the node with the highest "upper confidence bound", which is a measure that takes into account both exploitation (highest average reward) and exploration (lowest number of visits). Beginning from that specific node, it produces a total of N (which is 5 in this particular scenario) fresh potential actions to be taken, and appends them to the tree. The search process terminates when it has either produced a viable solution or when it has reached the predetermined limit for the number of rollouts (depth of the search tree) [7].

Multi-agent architecture

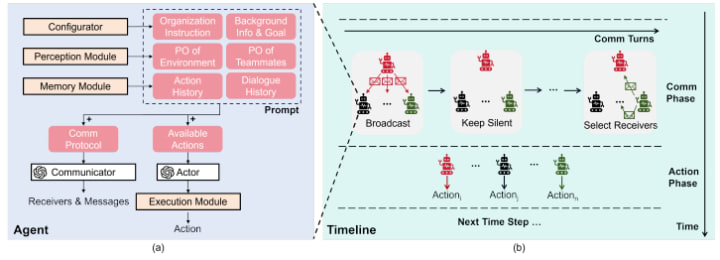

Multi-LLM-agent architecture. (a) The components of an LLM agent and the arrangement of prompts. (b) One time step consists of two phases: the Communication phase and the Action phase. During the communication phase, the agents alternate between broadcasting and identifying receivers to transmit unique messages. The agents also have the option to remain silent. Comm is an abbreviation for Communication, while PO is an abbreviation for Partial Observation [8] [9].

LLM agents with physical bodies acquire the ability to work together in structured groups.

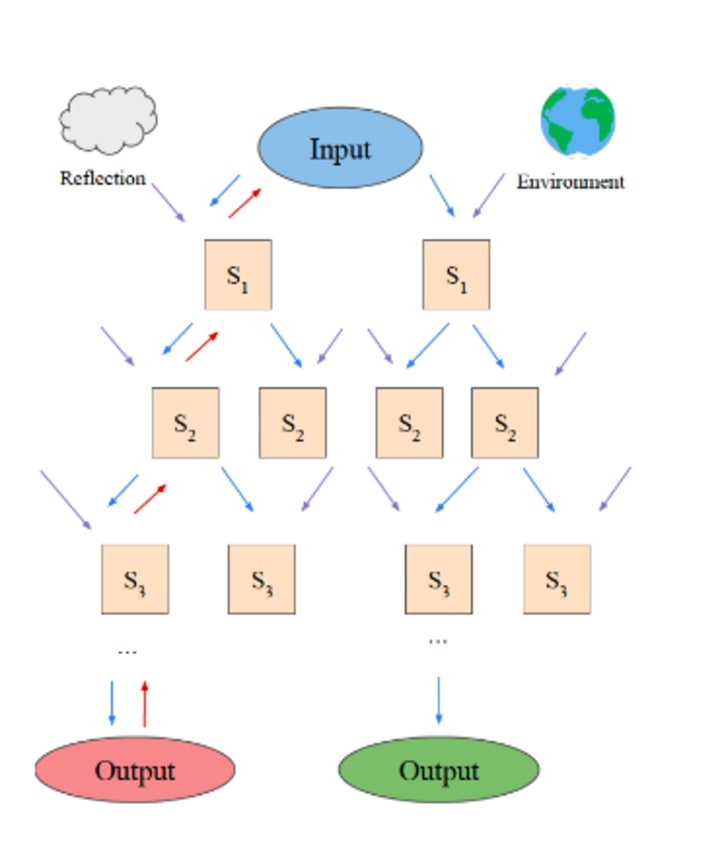

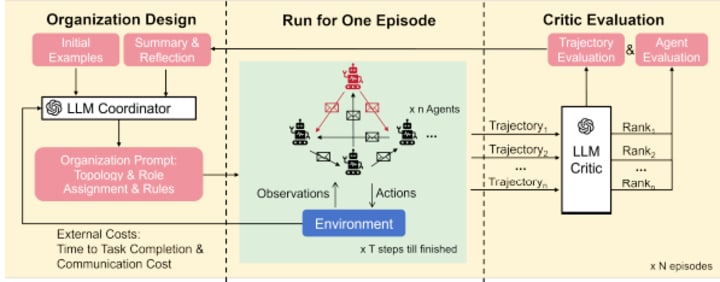

Diagram: Criticize-Reflect architecture for enhancing organizational structure. The red agent symbolizes the individual who holds a position of authority and influence inside an organization. The Critic assesses the paths taken and examines the effectiveness of the agents. In addition to the environmental externalities, the Coordinator suggests implementing a new organizational prompt to enhance team efficiency.

The study conducted by Guo et al. illustrates the influence of a primary agent on the total efficiency of the team of agents [10]. This design incorporates both a vertical component, facilitated by the leader agent, and a horizontal component, allowing agents to communicate with each other in addition to the leader. The study findings indicate that teams led by an organized leader accomplish their work approximately 10% more quickly compared to teams without a leader. In addition, it was found that in teams lacking a defined leader, agents allocated the majority of their communication time (~50%) to issuing commands to each other, while dividing the remaining time between exchanging information and seeking direction. In contrast, among teams that have an assigned leader, 60% of the leader's communication is dedicated to providing instructions, which encourages other team members to prioritize the exchange and solicitation of information. Their findings illustrate that teams of agents are most efficient when the leader is a human.

In addition to team structure, the study highlights the significance of incorporating a "criticize-reflect" phase for making plans, assessing performance, giving feedback, and reorganizing the team [9]. Their findings suggest that agents operating within a dynamic team structure, where leadership roles are rotated, yield the most favorable outcomes. These outcomes include the shortest time required to complete tasks and the lowest average cost of communication. In essence, leadership and dynamic team structures enhance the collective team's capacity to think, strategize, and execute tasks with efficiency.

About the Author

Radhika Kanubaddhi is Data and AI Architect at Microsoft. Radhika Kanubaddhi has over 10 years of experience in software engineering, databases, AI/ML, and analytics. Radhika helps customers adopt Microsoft Azure services to deliver business results. She crafts ML and database solutions that overcome complex technical challenges and drive strategic objectives.

Before Microsoft, she developed and deployed machine learning models to improve revenues, profits, increase conversions for clients from various industries such as airlines, banks, pharma, retail, and hospitality. She is an expert in assembling the right set of services to solve client needs.

Radhika has worked with almost all technical innovations and services in the last decade – including Internet of Things, cloud application development, ML models, and Azure.

Some of her accomplishments include:

- Led three ML recommendation engine POCs converting 2 out of 3 clients resulting in $1.17M annual revenues.

- Implemented real-time recommendation engine for an airline client resulting in $214M increase in 30-day revenue.

- Developed ‘Backup as a Service’ to clone and encrypt data from millions of Internet of Things (IoT) devices

- Radhika Kanubaddhi has a Master’s in computer science. She has authored impactful technical content across different media. Outside of work, she enjoys playing with her daughter, reading, and creating art.

Fundamentals of Machine Learning

References

- Nakajima, Yohei. "Task-driven Autonomous Agent Utilizing GPT-4, Pinecone, and LangChain for Diverse Applications." 2024.

- Licklider, J.C.R. "Man-Computer Symbiosis." 1960.

- https://www.neudesic.com/blog/ai-agent-systems/

- The Landscape Of Emerging Ai Agent Architectures For Reasoning, Planning, And Tool Calling: A Survey (https://arxiv.org/pdf/2404.11584v1)

- Birr, Autogpt. "AutoGPT: Autonomous GPT-3.5 Agents in Action." 2024.

- https://langchain-ai.github.io/langgraph/tutorials/lats/lats/

- Language Agent Tree Search Unifies Reasoning Acting and Planning in Language Models(https://arxiv.org/abs/2310.04406)

- https://github.com/geekan/MetaGPT

- MetaGPT: Meta Programming for A Multi-Agent Collaborative Framework(https://arxiv.org/pdf/2308.00352)

- Xudong Guo et al. Embodied LLM Agents Learn to Cooperate in Organized Teams. 2024. arXiv: 2403.12482 [cs.AI].(https://arxiv.org/pdf/2403.12482)

About the Creator

Enjoyed the story? Support the Creator.

Subscribe for free to receive all their stories in your feed. You could also pledge your support or give them a one-off tip, letting them know you appreciate their work.

Comments

There are no comments for this story

Be the first to respond and start the conversation.