Do 17 poses identified in the adult film?

Big Brother Online Help: Not enough training sets

A big brother recently did the task of pose recognition in adult movies and posted that the training set was not enough. Immediately, we got a response from enthusiastic users: I'll sponsor 140TB of data!

Brothers are back to learn technology!

Today's topic is Human Action Recognition (HAR), which means recognizing human action poses in pictures and videos through models.

Recently, a Reddit user had the idea that if the model is used in the field of adult content, it can greatly increase the accuracy of identification and search of pornographic videos.

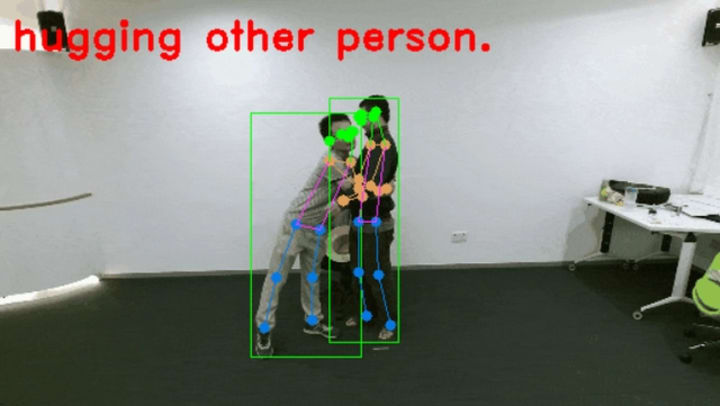

According to the author, the deep learning model he built, which uses image RGB, skeleton (Skeleton), and audio as input, can already achieve 75% accuracy in recognizing actors' poses in videos.

But not just a simple migration of the model, the training process also encountered some technical difficulties, mainly because the camera position often changes in the video.

According to the description of the netizens, I speculate that the camera shooting adult movies may not have a fixed camera position or the number of camera position changes, and the model of human action recognition is usually applied to surveillance video and other cameras with a fixed position.

The authors also state that the relatively small training data set is also a problem; he only has about 44 hours of training data, and the locations of the characters in the films are usually very close together, so it is difficult to get accurate pose estimates for most of the videos, and there is no way to incorporate all the locations into the skeleton-based model.

A relatively novel finding is that the audio signal in the input stream is boosted for the classification of 4 actions, though it is only useful for some actions.

Once the post came out, it also received unanimous praise from the tech gurus.

Everything is for science!

But netizens also immediately understood the core claims of the author: begging for resources!

Some netizens said that you can either get a huge amount of resource support or not get any at all.

Someone also said he had done a similar project and the problems he encountered were mainly highly noisy scenes and very unstable cameras. The really difficult scenes were those with more than 3 actors involved, which would make the distinction between entities would become very difficult.

And he said he had no concept of sex position with more than 2 people, so he felt a little frustrated to see this model achieve such a high accuracy rate so easily and wanted to see how the owner had achieved it.

The most generous netizen said on the spot that I would like to sponsor the dataset! The video is 140.6TB in size and 11 years and 6 months in length, including 6416 performing artists, and 46.5GB of images.

Some netizens followed the comments, asking for resources for their friends who are engaged in research.

As for the significance of this study, netizens said the application prospects can be great! In the future, in the search on the resource site, you can filter the video according to the specified POSITION, not just the traditional tags, titles, categories, etc.

Decent science

The original author made the source code public, stating that his goal was to see how the state-of-the-art Human Action Recognition (HAR) model would perform in the pornography field.

HAR is a relatively new and active area of research in the field of deep learning, where the goal is to recognize human behavior from various input streams (such as video or sensors).

From a technical point of view, the field of pornography is interesting because it has some distinctive difficulties, such as lighting changes, occlusions, and huge variations in different camera angles and shooting techniques (POV, professional videographers) making position and action recognition difficult. Two identical positions and actions may exist with multiple different camera views taken, thus completely confusing the predictions of the model.

The authors collected a very diverse dataset, including a variety of recordings such as POV, professionally shot, amateur, with or without a dedicated camera crew, etc., and also a variety of environments, people, and camera angles.

The authors also indicate that this problem may not be particularly serious if only films shot by professional teams are used.

Based on the collected dataset, the authors summarize the identification of 17 actions, such as kissing, although the definition of actions may be incomplete and there may be conceptual overlap.

Among them, the authors treat fondling as a placeholder when no other action category is detected, although the authors found during the labeling of the data that only 48 minutes of fondling data were obtained from 44 hours of movie data.

The implementation of the project is based on MMAction2, a PyTorch-based open-source toolkit for video understanding that allows, among other things, the recognition of skeletal movements of the human body.

The models for obtaining SOTA results are obtained by post-integration of three models based on three input streams.

Significant performance improvements can be achieved compared to using only RGB-based models. Since more than one action may be sent simultaneously and some actions/locations are conceptually overlapping, the evaluation criterion is the prediction accuracy of the first two as a performance metric.

The current multimodal model has an accuracy of ~75%. However, since the dataset is quite small and only ~50 experiments were conducted in total, there is a lot of room for improvement.

The multimodal (Rgb + skeleton + audio) model that performs best in terms of both performance and runtime is first presented.

The results of these models are integrated by fine-tuning the weights to 0.5, 0.6, and 1.0, respectively, because of the different importance of these models.

Another approach is to train one model with two input streams at a time (i.e., rgb+skeleton and rgb+audio) and then integrate their results.

However, in practice, this operation is not feasible.

This is because if the model's input contains audio input streams, it can only be valid for certain actions, such as deepepthroat which has a higher pitch due to gag reflexes, while for other actions it is impossible to obtain any valid features from their audio, which are identical from the audio point of view.

Similarly, the skeleton-based model can only be used in those cases where the accuracy of the pose estimation is above a certain confidence threshold (for these experiments, the threshold used was 0.4).

For example, for difficult rare movements such as scoop-up or the-snake, it is difficult to get accurate pose estimates (poses become blurred and blended) at most camera angles due to the proximity of the body positions in the frame, which can negatively affect the accuracy of the HAR model.

For common actions such as doggy, cowgirl, or missionary, the pose estimation is good enough to be used to train a HAR model.

If we had a larger dataset, then we might have enough instances of hard-to-classify poses to train all 17 actions again with a skeleton-based model.

According to the current SOTA literature, the skeleton-based model outperforms the RGB-based model. Of course, ideally, the pose estimation model should also be fine-tuned in the sex domain to obtain better overall pose estimation.

For the RGB input stream, the attention-based TimeSformer architecture achieves the best results for the 3D RGB model and the inference is very fast (~0.53s/7s clips).

In total, the RGB model has ~17,600 training clips and ~4,900 evaluation clips, and various data enhancement techniques such as rescaling, cropping, flipping, color inversion, Gaussian blur, elastic transformation, and bionic transformation are applied.

The best results based on the skeleton model are achieved by the CNN-based PoseC3D architecture and the inference speed of the model is fast (~3.3s/7s clips).

The pose dataset is much smaller than the original RGB dataset, with only 33% of the frames having a confidence level higher than 0.4, so the final test set has only 815 clips and only 6 target classes.

The speech-based model uses a simple ResNet 101,jiyu Audiovisual SlowFast with very fast inference (0.05s/7s clips).

The preprocessing of the speech is to cut out the audio that is not loud enough from the dataset. The best results were achieved by trimming the quietest 20% of the audio. In total, there are about 59,000 training clips and 15,000 validation clips.

About the Creator

Richard Shurwood

If you wish to succeed, you should use persistence as your good friend, experience as your reference, prudence as your brother and hope as your sentry.

Enjoyed the story? Support the Creator.

Subscribe for free to receive all their stories in your feed. You could also pledge your support or give them a one-off tip, letting them know you appreciate their work.

Comments

There are no comments for this story

Be the first to respond and start the conversation.