AI: Daylight robbery?

New Scientist is baffled by how to deal with the threat of AIs like ChatGPT running off with the publisher's valuable content. The answer is simple.

Concerns about AI and its problem child ChatGPT abound in the press but I was intrigued to note multiple articles on this topic in the May 13 (2023) edition of New Scientist.

“Who will foot the bill,” asks the leader article, when whole swathes of New Scientist content has been ‘ingested’ by the Artificial Intelligence? Other content owners have raised similar concerns and it seems there may be lawyers involved at some point, all adding to the now long-running saga of what to do about AI. Should we fear this new technology or should we embrace it?

Personally, I have been hearing stories about Artificial Intelligence for many decades, starting from the time I was a junior reporter working for the international technology press. This was in the 1980s. Even then, publicists were talking about AI taking over the world, computers (as they were then known) adopting ‘machine-learning’ to solve problems, and ‘neural networks’ replacing traditional algorithmic programming. It was all bunkum of course. Has this changed?

The only answer I can offer to that question is: I don’t know. Does the concept of artificial intelligence at last have some substance? I would sincerely hope that forty years of playing around with inference engines would have achieved something… but what? I have done very little research about the new generation of AIs but I have heard that they can assist with customer interactions, providing a kind of robotic customer service assistant to save the bother of anyone having to speak to a customer personally. My experience of dealing with chatbots is that they merely add to the nuisance of trying to get some help from a big bank or comms company that doesn’t give a damn. I wonder how much worse it will get if the chatbot is programmed to pretend it is human. On the other hand, perhaps they can provide a step change in research tools, just like Google did when it displaced the very basic search algorithms of the past. Who knows? Only time will tell.

On the question of legalities, I would suggest the answer to New Scientist’s little dilemma is simplicity itself, if you set aside the absurd notion that AI programs such as ChatGPT have in any way acquired a vestige of humanity or sentience (except in the broadest possible definition). As New Scientist points out, if a person reads their publication, in paper form or online, and commits any amount of the content to memory, they breach no laws. Certainly not in UK jurisdictions where the magazine is published and, it seems, the publishers are more than happy to accept a human reader’s subscription payment in compensation for this “value provision.” This supposedly scientific journal then goes on to make a very unscientific comment that illustrates the problem I describe. “...every piece of content consumed by an AI becomes a part of it, frozen in the numerical values that make up its neural networks. With this in-built knowledge…” and so it goes on.

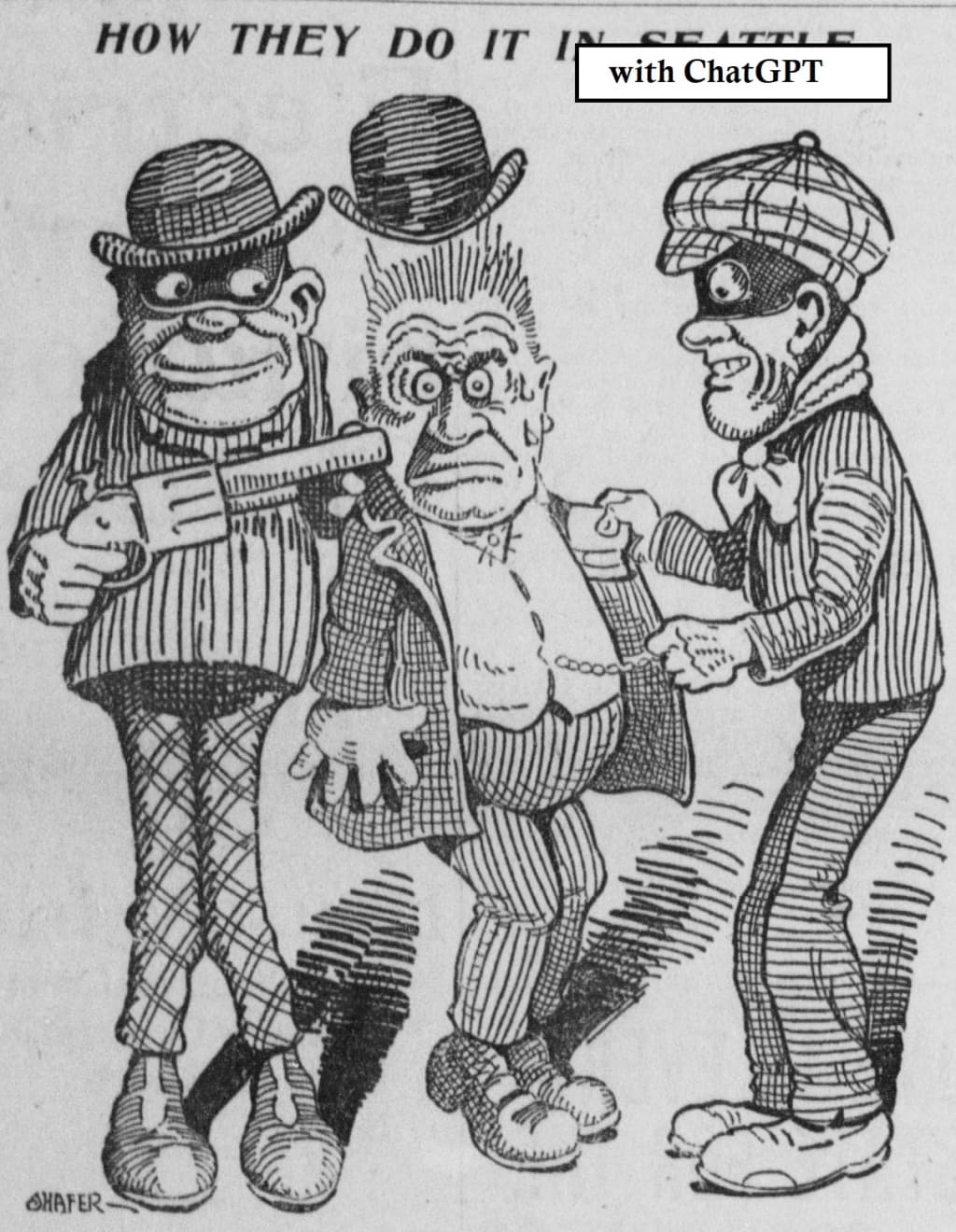

So, New Scientist, you are concerned about your “content” being “consumed”, frozen into “neural networks”, your “value provision” going unpaid? Really? Let me translate this gobledegook into plain English for the benefit of those of us who prefer that language. The owners (human or corporate) of the ChatGPT computer program have programmed it to copy your legally-protected written text, stored by you on certain electronic devices? This it has done, as programmed, and nobody has yet asked your permission to do so or offered you any payment for copies it has taken?

Put it that way (the plain and simple way) your copyright material has been copied without your permission, and therefore stolen. Is this what has happened?

My advice (no fee involved as I am not qualified to offer legal advice) to the publishers of New Scientist, is to talk to their intellectual property lawyers and ask them to consider legal remedies for breach of copyright. They will no doubt be familiar with the Copyright, Designs and Patents Act 1988 s3A(1).

~ ~ ~ ~ ~

Thanks for reading. If you would like to enjoy one of my fictional stories about the problems of artificial intelligence please feel free to try:

No artificial intelligence has been used in the preparation of this article, which has been produced by a naturally occurring organism that is wholly organic and, if intelligent, it doesn't often show it.

More articles about AI and the writer

About the Creator

Raymond G. Taylor

Author based in Kent, England. A writer of fictional short stories in a wide range of genres, he has been a non-fiction writer since the 1980s. Non-fiction subjects include art, history, technology, business, law, and the human condition.

Comments (1)

Great insight into a growing industry that in my opinion needs more regulatory rules. AI should be helpful, not criminal. Also, I loved the author’s note at the end. Well played.