As an AI language model, ChatGPT has been continually evolving and improving since its inception. Here are some key milestones in its evolution:

GPT-1 (2018): The first version of the GPT language model was released in 2018 by OpenAI. It was trained on a large corpus of text and had 117 million parameters. GPT-1 (Generative Pre-trained Transformer 1) was the first version of the GPT series of language models developed by OpenAI. It was released in June 2018 and had 117 million parameters, making it much smaller than its successors.

Despite its smaller size, GPT-1 was still a significant advancement in the field of natural language processing (NLP). It was pre-trained on a massive corpus of text data using a transformer architecture, which enabled it to generate human-like text, complete sentences, and even paragraphs.

However, GPT-1 had some limitations compared to the later versions of the GPT series. For instance, its performance on certain NLP tasks was not as good as later models due to its smaller size and less data used during training. Nonetheless, GPT-1 paved the way for the development of larger and more advanced language models that we see today, such as GPT-2 and GPT-3.

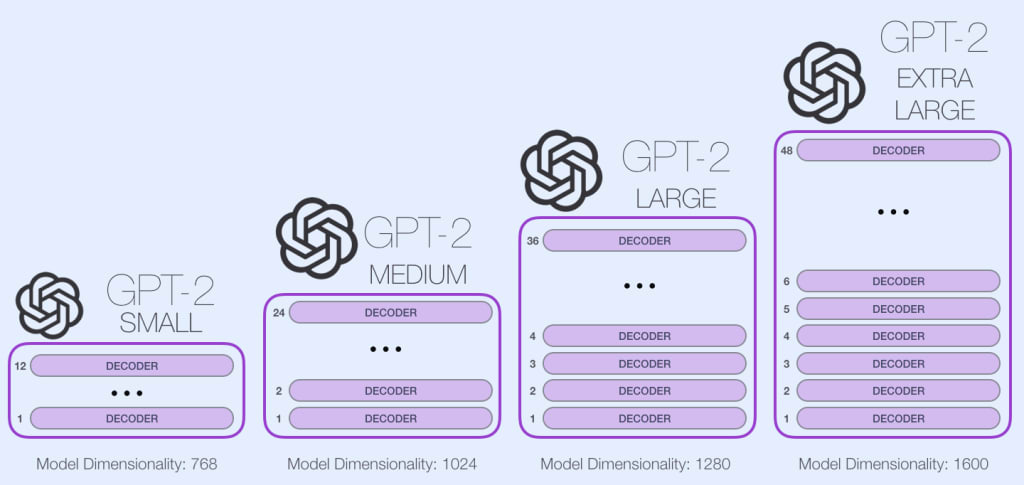

GPT-2 (2019): In 2019, OpenAI released GPT-2, which was a major improvement over GPT-1. GPT-2 had 1.5 billion parameters and was able to generate much more coherent and realistic text. GPT-2 (Generative Pre-trained Transformer 2) is a state-of-the-art language model developed by OpenAI and released in February 2019. It is the second model in the GPT series, and it has 1.5 billion parameters, making it much larger and more powerful than its predecessor, GPT-1.

GPT-2 is pre-trained on a massive dataset of text data using an unsupervised learning method. This means that the model is not explicitly trained on any specific task but rather learns to generate human-like text by predicting the next word in a given sentence. This pre-training process allows the model to capture the nuances and subtleties of natural language and generate coherent and grammatically correct sentences.

GPT-2 has been shown to be incredibly versatile and can perform a wide range of NLP tasks, including language translation, question-answering, summarization, and text completion. It has also been used to generate realistic-looking text, including news articles, poetry, and even computer code.

However, due to concerns over the potential misuse of GPT-2's language generation capabilities, OpenAI initially limited access to the full model and only released a smaller version with fewer parameters. The full version of GPT-2 has since been made available to researchers and developers, but with some restrictions on its use for certain applications.

GPT-3 (2020): GPT-3 was released in June 2020 and was a significant leap forward in the development of language models. It has 175 billion parameters and is capable of a wide range of natural language processing tasks, including translation, question-answering, and text completion. GPT-3 (Generative Pre-trained Transformer 3) is a state-of-the-art language model developed by OpenAI. It uses deep learning algorithms to generate human-like text by predicting the next word or sequence of words based on a given input. GPT-3 has 175 billion parameters, making it the largest and most powerful language model currently available. It has been trained on a massive dataset of diverse text sources, allowing it to perform a wide range of natural language processing tasks, such as language translation, question answering, text summarization, and content generation. Its capabilities have made it a popular tool for developers, researchers, and businesses looking to leverage its advanced language processing capabilities.

ChatGPT (2021): OpenAI introduced ChatGPT in 2021, which is a version of GPT-3 specifically designed for conversational AI applications. ChatGPT has been fine-tuned to understand and respond to human language in a natural and engaging way, making it a powerful tool for chatbots, virtual assistants, and other conversational AI applications.

Overall, the evolution of ChatGPT and the GPT series of language models has brought us closer to the goal of creating truly intelligent machines that can understand and communicate with us in natural language.

Comments

There are no comments for this story

Be the first to respond and start the conversation.