Artificial Intelligence (AI) is fixing manual software testing woes like never before. Going a little beyond the traditional finding and fixing of bugs, AI testing is transforming the entire quality assurance process.

AI-driven testing is an improvement on traditional quality assurance processes, offering unparalleled efficiency, accuracy, cost-effectiveness, and scalability. In this blog, we explore why AI is becoming a crucial player in modern development cycles. Let us unpack this transformative force together.

Why Should You Consider AI in Software Testing?

Artificial Intelligence (AI) represents a suite of technologies that empower machines to mimic human intelligence, encompassing learning, reasoning, and problem-solving capabilities. AI algorithms learn from data, identify patterns, and make decisions to achieve specific goals.

Let us delve a little deeper into how AI is revolutionizing testing practices and why businesses should consider integrating AI into their software development cycles.

Challenges with Conventional Manual Testing

Businesses relying solely on manual testing methods may encounter several challenges, including:

- Resource Intensiveness: Manual testing requires significant manpower and time investment, making it less scalable and efficient for large-scale projects or frequent software updates.

- Human Error: Manual testing is susceptible to human error, leading to inconsistent test coverage and missed defects.

- Limited Test Coverage: Manual testing may not adequately cover all possible usage scenarios and edge cases, potentially leaving critical defects undetected.

- Time Constraints: Manual testing can be time-consuming, leading to delayed release cycles and impacting time-to-market goals.

- Difficulty in Scaling: As applications grow in complexity and scale, manual testing becomes increasingly challenging to scale up effectively.

Embracing AI for Enhanced Testing Efficiency

Integrating AI into software testing addresses these challenges by introducing automation, intelligence, and scalability into the quality assurance process. AI-powered testing not only accelerates testing cycles but also improves accuracy, coverage, and overall software reliability. By leveraging AI technologies, businesses can navigate the complexities of modern software development with greater confidence and efficiency.

How is AI Changing the Dynamics of Software Testing?

AI utilizes intelligent algorithms that can sift through large volumes of data, identifying patterns and anomalies that might be missed by human testers. These algorithms can analyze the historical data of previous software bugs, identify the most common and high-risk areas, and use that information to prioritize testing. They can also optimize the order of test cases, running those that are most likely to find defects earlier. These AI algorithms can use machine learning to adapt and improve over time, learning from every testing cycle to continually enhance test effectiveness.

How is AI Changing the Dynamics of Test Automation?

Traditional test automation is largely dependent on predefined scripts, which are created based on particular scenarios or functionalities. These scripts, while efficient in some cases, can become obsolete or inadequate when the software undergoes changes, leading to potential gaps in testing and increased maintenance efforts.

On the other hand, AI-driven test automation employs machine learning algorithms to dynamically generate and adapt test scripts. Unlike traditional methods, this approach does not rely on specific scenarios, but instead, it learns from the application's behavior, user interactions, and previous data. This continuous learning process allows for real-time adjustments in the test scripts, ensuring their relevance even as the application evolves.

AI-driven test automation can also identify patterns and anomalies in the application, allowing for more comprehensive test coverage. It also reduces the time spent on test script maintenance, as the AI is capable of updating the scripts to match the current state of the software. This level of adaptability and efficiency is especially beneficial in an agile or continuous delivery environment, where rapid changes to the software are common.

Methods for AI-Based Software Test Automation — Leveraging AI in Testing

AI-based test automation leverages cutting-edge techniques including machine learning, natural language processing (NLP), and computer vision to revolutionize the software testing landscape. These advanced methods empower AI to handle a wide range of tasks, enhancing efficiency and effectiveness across the testing process.

Tasks AI Software Testing Can Assist With

- Test Case Generation: AI algorithms can autonomously create test cases based on application behavior and user interactions, capturing critical scenarios and edge cases that might be overlooked in manual testing.

- Intelligent Test Execution: AI can optimize test execution by intelligently selecting and prioritizing test cases, focusing on high-risk areas and critical functionalities to maximize test coverage.

- Anomaly Detection: AI excels at detecting anomalies and deviations from expected behavior, enabling early identification of defects and potential issues that may impact system performance.

- Log Analysis: AI-driven tools can analyze log data to uncover hidden patterns, errors, or performance bottlenecks, aiding in root cause analysis and troubleshooting.

- User Behavior Simulation: AI can simulate user interactions and behaviors to uncover usability issues, assess application responsiveness, and optimize user experience testing.

AI-Enabled QA Software Testing Tools and Technologies

Numerous AI-powered testing tools, systems, technologies, and bots are available to enhance the software testing process.

- Automated Test Case Generation: This process involves analyzing and learning from patterns and behaviors within the application to identify critical scenarios, edge cases, and potential points of failure that require testing. The AI system first interacts with the software under test, observing how it behaves across different inputs, user interactions, and system states. Through this interaction, the AI model gathers data on various application paths, responses, and outcomes. Using this data, the AI algorithm can then intelligently generate a diverse set of test cases that cover both typical and exceptional usage scenarios.

- Assistance in Test Creation: AI-driven assistance in test creation begins by analyzing existing test cases, historical data, and application requirements. The AI model learns from this information to understand the context and objectives of the testing effort. Using this knowledge, the AI platform can offer intelligent suggestions and recommendations to testers, enhancing their productivity and improving the overall quality of test cases. One key aspect of AI-enabled test creation assistance is its ability to identify potential gaps or redundancies in test coverage. By analyzing patterns and dependencies within the application, AI can highlight areas that require additional testing or refinement, ensuring comprehensive validation of critical functionalities.

- Improved Test Stability: AI leverages sophisticated algorithms and data analysis to delve deep into test results and system behavior, identifying underlying factors that may contribute to test instability. AI begins by systematically analyzing test results, examining patterns of success and failure across different test scenarios. By correlating this data with system metrics and environmental variables, AI can pinpoint potential causes of test instability, such as intermittent failures, resource bottlenecks, or configuration issues. One of the key capabilities of AI in improving test stability is its ability to predict and prevent test failures before they occur. By learning from historical data and past test executions, AI can anticipate scenarios that are prone to instability and recommend preventive measures. This proactive approach minimizes the likelihood of unexpected test failures and ensures a more reliable testing process.

- Screen Element Detection: Screen Element Detection powered by AI represents a sophisticated approach to graphical user interface (GUI) testing, enabling precise and efficient interaction with UI components. AI algorithms are trained to recognize and interact with various screen elements, such as buttons, input fields, dropdown menus, checkboxes, and more, across different applications and platforms. The process of screen element detection begins with AI models learning to interpret visual information from the application's interface. These models are trained on large datasets containing annotated screen images, teaching them to identify and classify different UI elements based on their visual characteristics, layout, and context within the application. Once trained, AI algorithms can accurately locate and interact with screen elements during automated GUI testing. This capability is particularly valuable for validating UI behavior across different screen resolutions, devices, and operating systems, ensuring consistent user experiences across diverse environments.

- Automated Issue Identification: AI-based testing solutions leverage advanced algorithms and machine learning techniques to automatically detect, prioritize, and classify issues within software applications, enabling faster resolution and continuous improvement of software quality. The process of automated issue identification begins with AI models analyzing various aspects of the software under test, including its behavior, performance metrics, and user interactions. By processing large volumes of data and learning from historical patterns, AI can identify anomalies, deviations from expected behavior, and potential defects that may impact the software's functionality and reliability. A key advantage of AI-driven automated issue identification is its ability to prioritize detected issues based on their severity and impact on the overall system. AI can categorize issues into different levels of criticality, enabling testing teams to focus their efforts on addressing high-priority issues that pose the greatest risk to software quality and user satisfaction.

How to Use OpenAI for AI Testing - A Quick Demo

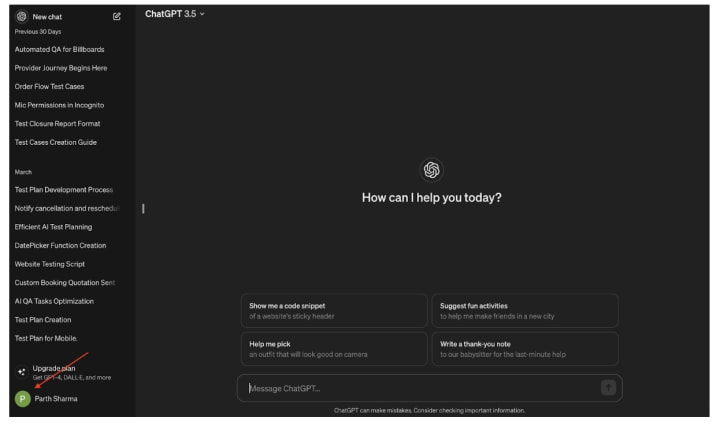

In this section, we show you how to use OpenAI’s ChatGPT to create test plans efficiently and effectively.

Prerequisite

1. Navigate to Profile: Begin by locating and accessing your profile settings within the ChatGPT interface.

2. Click on "Customize ChatGPT": Once in your profile settings, locate the option to customize ChatGPT and click on it to proceed.

3. Add Details: In the customization menu, you'll have the opportunity to input specific details or custom instructions for your ChatGPT experience.

4. Save: After making your desired changes, don't forget to save your customization.

Creating Test Plans Efficiently with AI

Efficiently create test plans with the help of AI. Save time and effort by letting AI handle the test planning process. Achieve this objective by following steps:

Automated Test Case Generation

Utilize AI to effortlessly generate test cases, eliminating the need for manual input and saving valuable time. This is how we can achieve this:

Requirement Traceability Management

Effortlessly track and link test cases to specific requirements using AI. Say goodbye to tedious manual record-keeping. To fulfill this task, follow the provided prompt:

Regression Test Suite Identification

Identify the most relevant test cases for regression testing with AI, ensuring efficient testing coverage while saving time and effort. To meet this objective, follow the following prompt:

Integration Test Generation

Get your integration tests written with the help of AI. Easily test how different parts of the software work together without spending a lot of time doing it manually. To reach this milestone, heed the following prompt:

Sign-Off Email Generation

Streamline the sign-off process with automated email generation powered by AI. Communicate test results efficiently and keep stakeholders informed. In order to achieve this, follow the following prompt:

Conditional Sign-Off Determination

AI aids in drafting conditional sign-offs using industry-standard outlines, ensuring thoroughness and reliability in decision-making processes. To accomplish this aim, follow the subsequent prompt:

Closure Report Generation

Use AI to create detailed reports summarizing all testing activities. Save time and effort by letting AI handle report creation and analysis for you. Follow these steps to achieve this goal:

Tips For Implementing AI In QA Software Testing

- Start Small: Initiate AI implementation through pilot projects focused on specific testing tasks or scenarios. This approach allows teams to assess the feasibility and effectiveness of AI tools and methodologies in real-world testing environments before scaling up.

- Data Quality: Prioritize data quality and diversity to ensure the effectiveness of AI models. High-quality datasets that accurately represent various usage scenarios, edge cases, and system behaviors are essential for training AI algorithms to perform reliable and comprehensive testing.

- Collaborate: Foster collaboration between AI experts, data scientists, and testing teams to leverage domain knowledge and expertise. Encourage open communication and knowledge sharing to identify testing challenges and opportunities where AI can add value effectively.

- Define Clear Objectives: Establish clear objectives and success criteria for AI implementation in testing. Define specific goals such as improving test coverage, accelerating test execution, or enhancing defect detection rates to measure the impact and ROI of AI initiatives.

- Select Appropriate Tools: Evaluate and select AI-powered testing tools based on specific testing requirements and project needs. Consider factors such as compatibility with existing testing frameworks, ease of integration, scalability, and support for various testing types (e.g., functional, performance, security).

What Does AI Testing Mean for QA Testers and the Future?

The way AI is woven into software testing is not about replacing QA testers but boosting what they can do. As AI becomes more integrated, QA roles will keep evolving. Now, testers are diving into AI tools and learning how to analyze data, sharpening their critical thinking and problem-solving skills along the way. With AI handling routine tasks, testers can shift their focus to strategic planning, tackling complex scenarios, and ensuring that AI algorithms are not just effective but also ethical.

In a nutshell, AI testing is reshaping how we ensure software quality, allowing teams to roll out sturdy, top-notch applications on a larger scale. This new process is all about embracing a fresh mindset that values efficiency, innovation, and continuous growth. And as AI keeps evolving, its impact on software development and testing will undoubtedly steer the future of the industry.

Article source: This article was written by JENNIFER RENITA K W -Lead (Software Engineer in Testing - III) and was published on GeekyAnts Blog.

About the Creator

Geekyants

GeekyAnts is an app design and development studio in USA, having global presence in India and UK. We specialize in building solutions for web and mobile that drive innovation and transform industries and lives. https://geekyants.com/

Comments

There are no comments for this story

Be the first to respond and start the conversation.