ROBOTS WITH AI ALSO NEED PHYSICAL INTELLIGENCE

Robots with AI (artificial intelligence) are emerging as developers combine large language models (LLMS) with autonomous devices. Find out why they’re discovering that it takes far more than linguistic skills to replicate basic human skills.

Like most my generation, I think the first robot of which I became aware was the one on the TV program Lost in Space, whose name was simply Robot, although fans of the show tell me he was officially Robot Model B-9. Another famous fictional robot came before him.

That was Robby the Robot in the 1950s science fiction classic Forbidden Planet. That was before my time and I didn’t get to see that film until my college years.

People seem to have speculated about mechanical beings, automatons, androids and robots since the dawn of civilization. The word “robot” emerged with the science fiction boom in the 1920s.

THE TERM ROBOT EMERGED FROM SCIENCE FICTION

Isaac Asimov drew even more public attention to the concept in 1950 with his book I, Robot. Star Trek’s Commander Data, and of course C-3PO and R2D2 made fictional robots more friendly and relatable.

These days, robots have become part of our daily lives. One of the offices where I used to work had a robot that delivered the mail twice a day until, ironically, email made it obsolete – I missed it.

Contemporary robots are much more sophisticated. They play key roles in manufacturing, warehousing, agriculture, transportation and even the restaurant business in come countries.

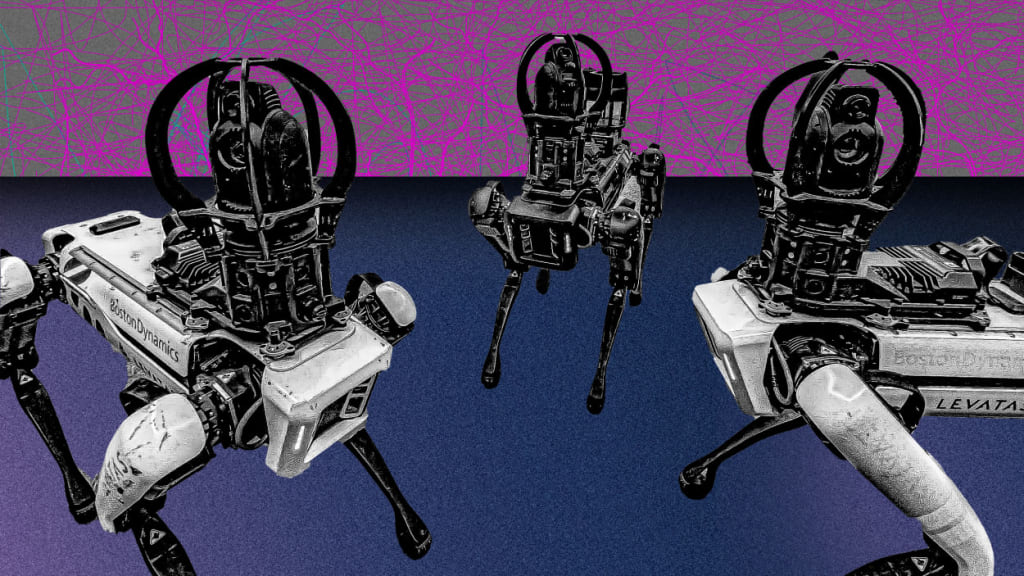

SPOT THE ROBOT DOG

The technology company Boston Dynamics has attracted the media’s attention with its product called Spot. It’s an agile mobile robot whose four-legged, dog-like structure inspired its name.

Spot works autonomously and has its own dynamic sensing program. It performs a wide range of inspection and measurement tasks in factories, warehouses, research labs and on construction sites.

At the same time, Open AI’s ChatGPT application has become a household word, at least if those households include technophiles. It’s the most famous of a category of new applications called large language models (LLMs).

LARGE LANGUAGE MODELS: THE LATEST “KILLER APPLICATIONS”

It’s the language aspect of LLMs that have made them the latest “killer applications.” In 2022, a team of researchers published an influential paper called Do As I Can, Not As I Say at the arXiv.org website, with the title suggesting that devices need improved physical skills, to supplement their recent leap in language skills.

The scientists were the first to propose a fusion between robots and LLMs. As the paper describes, “The robot can act as the language model’s ‘hands and eyes’ while the language model supplies high-level semantic knowledge about the task.”

Now, a tech company called Levatas is striving to bring that proposal to life by combining Spot with ChatGPT. Partnering with OpenAI and Boston Dynamics, they’ve produced a next-generation robot dog that can understand and follow verbal instructions, talk and answer questions.

“NATURAL-LANGUAGE ABILITY”

Chris Nielsen is Levatas’s CEO. He explained the projects goal to Scientific American this way, “For the average common industrial employee who has no robotic training, we want to give them the natural-language ability to tell the robot to sit down or go back to its dock.”

This new, more conversational version of Spot the Dog is both fascinating and practical. Even so, it still has major limitations.

The Levatas version of Spot still needs very strict parameters and operating environments. It’s an improvement for factories, warehouses or construction sites because its easier for workers to operate, but it’s no substitute for a real dog, let alone a skilled human worker.

THE TURING TEST

Is the new generation of language-driven robots conscious or sentient? That’s a question that goes back to the origins of electronic computers and one of their pioneers, Alan Turing.

Turing famously proposed in 1950 that a system would achieve artificial intelligence if an average person can’t tell the difference between a conversation with the system versus a human being.

The key point is that, according to Turing, the system doesn’t have to understand what it’s doing, or what any of the words mean. So, although these AI-infused robotic devices aren’t aware of what they’re doing, why they’re doing it, or even that they exist, Turing would consider them intelligent.

PRACTICAL PROBLEM ARISE FROM ROBOTIC BODIES

The practical problems the robots encounter arise more from the limitation of their robotic bodies than from the LLM applications. “The way things are now, the language understanding is amazing, and the robots suck,” Stefanie Tellex, a Brown University roboticist told Scientific American. “The robots have to get better to keep up.”

Our fascination with building intelligent robots is part of humanity’s search to understand ourselves, who we are, and our place in the world around us. One of the emerging insights is that, as any coach or phys-ed teacher could have pointed out, humans have a sort of physical or athletic intelligence.

AND ANOTHER THING…

The scientific word for it is kinaesthetic intelligence. It’s one of eight deeper aspects of intelligence that scientists are coming to recognize, based on the work of psychologist Howard Gardner.

So, the linguistic intelligence of LLM applications isn’t enough. Just as humanity has to recognize broader aspects of intelligence like musical, interpersonal and naturalistic awareness, somehow, our machines need to acquire all of these human dimensions to accomplish Pinocchio’s and Commander Data’s ambition to “become a real boy.”

As Google research scientist Karol Hausman told Scientific American, “The bottleneck is at the level of simple things like opening drawers and moving objects. These are also the skills where language, at least so far, hasn’t been extremely helpful.”

We always have more to learn if we dare to know.

LEARN MORE:

Scientists Are Putting ChatGPT Brains Inside Robot Bodies. What Could Possibly Go Wrong?

Brain Scans Enable Scientists to Read Minds

About the Creator

David Morton Rintoul

I'm a freelance writer and commercial blogger, offering stories for those who find meaning in stories about our Universe, Nature and Humanity. We always have more to learn if we Dare to Know.

Comments

There are no comments for this story

Be the first to respond and start the conversation.