Every data scientist needs to understand the fundamentals of clustering algorithms because they are a key component of data science. We'll quickly go over what clustering is and how it benefits data science before talking about the top 5 clustering algorithms.

Describe Clustering

Data points are clustered as part of the machine learning technique known as clustering. It is a well-known method for statistical data analysis and unsupervised learning. Clustering techniques can be used to divide a set of data points into distinct groups. As a result, the data points exhibit similar properties while the different groups exhibit dissimilar properties.

Clustering Algorithm in Data Science: Its Importance

The purpose of clustering algorithms is to derive useful information from massive amounts of structured and unstructured data. It enables you to separate data based on their characteristics or attributes and cluster data according to similarity.

Different industries employ clustering algorithms in several ways. You might use it, for instance, to categorize illnesses in the field of medicine or to categorize consumers in the context of market research.

K-means Clustering Algorithm

This clustering algorithm is the most often used since it is simple to comprehend and utilize. The K-means clustering technique is an essential component of studying the basics of data science and machine learning.

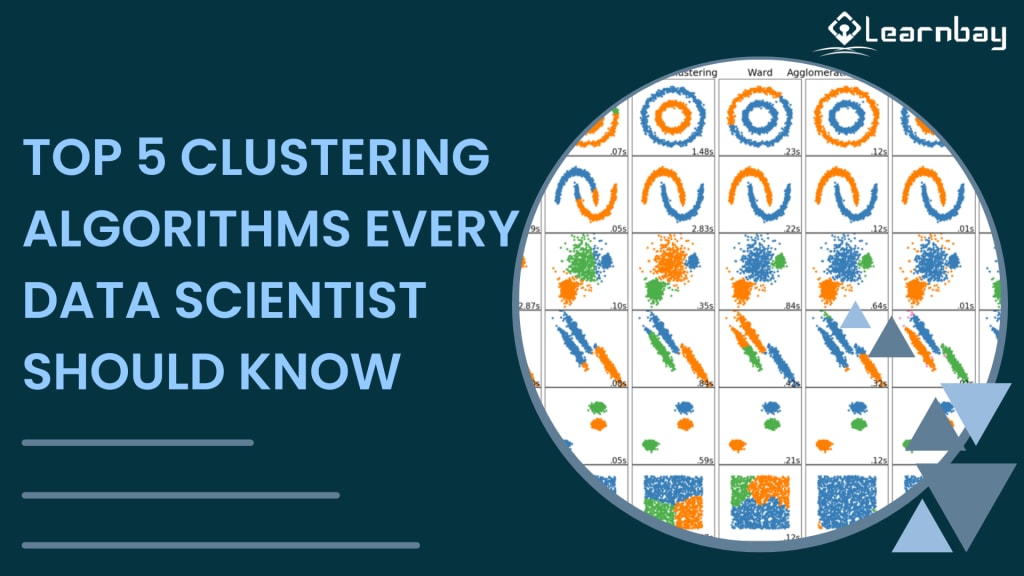

What can we deduce from the image?

- Initialize the center points at random after choosing a few classes or groups. Remember how important it is to decide how many courses you will take. Therefore, carefully examine the information at hand and pinpoint distinctive traits. The center points, shown in the figure as X, are vectors with the same length as the vectors for each data point.

- By measuring the separation between each data point and the center of each group, you may categorize each piece of information. The point classification into the group whose center is closest to it comes next.

- Take the mean of all the vectors in the specific group and recalculate the group center based on this information.

- Repeat the process for a number of people, watching out for significant changes in the group centers between iterations.

- The K-means approach is quick because there aren't many calculations involved.

Cons

- One of the most challenging aspects can be locating and classifying the groups.

- The results may differ because the cluster centers are chosen randomly.

For technical and detailed explanations on k-means clustering, refer to the online data science course, designed comprehensively for working professionals.

Mean-Shift Clustering Algorithm

Mean shift, the second type of clustering method, is a sliding window algorithm. It aids in locating the data points' concentrated areas. Mean-shift Finding the centers of each group is the goal of the centroid-based clustering algorithm. It operates by updating the potential center points as the sliding window's mean of the points. The candidate windows are filtered during the post-processing stage. The near duplicates are lessened as a result. Developing a complete set of center points and the related groups is the end outcome.

- Consider a collection of points in a two-dimensional space. Start with a circular sliding window with a randomly chosen point at its center, C, and a radius of r for the kernel. Each stage of this hill-climbing technique includes moving the kernel to a denser region until convergence.

- The window moves toward the denser regions by altering the center point to the average of the window's points at each iteration. The density inside the sliding window is inversely correlated with the number of points inside the window. As a result, changing the window's mean of the points causes the window to migrate gradually toward the denser areas.

- Up to the point when you can fit the most points within it, keep moving the window per the mean.

- Repeat this procedure on various sliding windows until all the points are contained within a window. If two windows overlap, the window with the greater number of points will win. The data points are now clustered based on the sliding window in which they are present.

Pros

Unlike the K-means clustering algorithm, you are not required to choose the number of clusters.

Given that it makes sense in a data-driven context, the cluster centers converging towards the point of maximum density are desirable.

Cons

It is more complicated to choose the radius t or the window size.

DBSCAN

DBSCAN stands for Density-Based Spatial Clustering of Noise-Induced Applications.

The density-based clustering algorithm DBSCAN outperforms the mean-shift clustering in a number of ways.

- It begins with a new, randomly chosen starting data point. Neighborhood points are defined as all points that are within a distance of "Epsilon -".

- To begin the clustering process, a certain minimum number of points within the neighborhood are required. The current data point becomes the cluster's initial point in such a case. If not, the point is classified as "Noise." The current point becomes a visited point in any scenario.

- The distance causes all nearby points to merge into a single cluster. Repeat the process for each new point that is included in the cluster group.

- Continue until you have visited and labeled every point in the cluster's neighborhood.

- Start afresh with a new, unexplored point to find more clusters or noise when the process is finished. Make sure to label each point as a cluster or noise after the operation.

Pros

Since no minimum number of clusters is needed, the DBSCAN is superior to conventional cluster algorithms.

Unlike the Mean-Shift approach, which drives such points into the cluster despite having differing features, it recognizes outliers as noise.

It does an excellent job of locating clusters of any size or form.

Cons

When there are clusters with different densities, it could be more efficient. When there is a change in the density levels, there is a variation in the distance threshold and the minimum points for recognizing the neighborhood.

Determining the distance threshold becomes difficult if your data is highly dimensional.

However, this technique is highly effective in some cases, and to master them you need to enroll in the best data science courses available online.

Expectation-Maximization with EM Using Gaussian Mixture Models for Clustering (GMM)

When compared to K-means clustering, GMMs are more adaptable. We start off assuming that the data points are distributed in a Gaussian fashion. The mean and the standard deviation are the two variables that describe each cluster's shape. A cluster can have any elliptical shape because we have standard deviations in both the X and Y dimensions. They don't always have to be circular in shape. Thus, a Gaussian distribution is present in every cluster.

We employ the Expectation-Maximization (EM) optimization approach to determine the Gaussian parameters for each cluster. This diagram will help to describe the situation better.

- We choose the number of clusters and randomly initialize the Gaussian distribution parameters for every single one of them, just as the K-means cluster.

- Calculate the likelihood that each data point belongs to a specific cluster in light of this context. The probability that a point will be part of the cluster increases with distance from the center of the Gaussian.

- Based on these calculations, we choose a new set of Gaussian distribution parameters to increase the likelihood that data points will fall into clusters. To calculate these probabilities, we add the weighted positions of the data points. The weighting factor is the likelihood that the data item belongs to the specified cluster.

- Up to convergence, where there is slight variance, repeat steps 2 and 3

Pros

Because of the idea of standard deviation, the GMMs have more flexibility in terms of cluster covariance than the K-means clustering.

You have numerous clusters per data point because this approach relies on probability. As a result, if two clusters that a particular data point is a part of overlap, we can further define it by saying that it is a part of Class 1 and Class 2, respectively.

Clustering with agglomerative hierarchies

Hierarchical clustering methods fall into two categories: top-down and bottom-up. At first, the Bottom-up paradigm interprets each data point as a separate cluster. In order to create a single group that contains all of the data points, it combines pairs of clusters. Consequently, another name for it is Hierarchical Agglomerative Clustering (HAC). Imagine it as a tree with the base representing the singular cluster that unites all samples and the leaves representing the clusters that each contain a single sample.

Think of each data point as its own cluster. The choice of a distance metric to gauge the separation between the two groups is made in the second phase. Utilize the average linkage approach, where the gap between two clusters is defined as the average distance between the data points in each cluster.

We combine the two clusters with the least average connectivity into a single cluster at each iteration.

Repeat the previous step until all the data points are contained in a single massive cluster.

Pros

The number of clusters need not be specified. You can select the clusters that appear the best.

The selection of the distance metric does not affect this algorithm.

Top Clustering Methods for Data Mining

In data mining, there are several different kinds of clustering methods. Here are some data mining clustering algorithms and examples.

- Models for Connectivity in Data Mining - Hierarchical Clustering

- K-means for Data Mining Centroid Models Clustering method

- Models for Data Mining Distribution - EM algorithm

- Models for Data Mining Density - DBSCAN

Conclusion

Data science and data mining are frequently confused. Because it operates according to similar principles, data mining can be considered a close relative of data science.

Data science relies heavily on clustering techniques, which are also important in data mining. The clustering techniques mentioned above should be familiar to any aspiring data scientist who plans to pursue a career in the field. These are the top five algorithms in data science that are discussed the most. Enroll in the best data science courses in India to advance your data science and AI career.

Comments

There are no comments for this story

Be the first to respond and start the conversation.