AI has been around for longer than you might think, without humans even noticing it. If you doubt that, just think about Netflix’s recommendations for TV shows and series, Instagram’s feed personalization, Amazon’s suggestion for accompanying purchases, and text editors that complete your phrases.

All of those are examples of AI-powered features. The community didn’t care if it was AI prediction, human recommendation, or a remarkable coincidence until the recent quantum leap in generative AI. The spark turned into a flame after the public release of ChatGPT.

The astonishingly skilled bot, as well as the recently revealed GPT-4, can mimic authentic writing, images, and coding, providing compelling content and significantly lowering marketing costs for businesses. While the tech revolutionized the way businesses operate within a couple of months, it raised concerns about copyright, authenticity, privacy, and ethics. Therefore, there is now a need to detect AI-generated content, in order to control smart machines.

Generative AI as a Threat to Authenticity, Security, and Ethics

As a result of the huge public exposure around generative AI, its potential risks have been raised, especially copyright issues. Identifying AI-generated content has become a burning discussion point among educators, employers, researchers, and parents.

While researchers and programmers have actively taken on the task of developing tools to help differentiate between human-created and machine-generated content, even the most advanced solutions struggle to keep pace with this breakneck technology’s progress.

This urged some organizations to prohibit AI-generated content. For instance, a world-famous supplier of stock images, Getty Images, some art communities, and even New York City’s Department of Education have banned ChatGPT in public schools.

The questionable positive impact of generative AI, together with increased privacy risks and ethical considerations, turned out to be much more severe than expected even by the tech community. On March 29, Elon Musk, Steve Wozniak, and 1000+ other tech industry leaders signed an open letter to pause AI experiments. The petition seeks to protect humans from the “profound risks to society and humanity” that AI systems with human-competitive intelligence can provoke — from flooding the internet with disinformation and automating away jobs to more catastrophic future risks emphasized in science fiction.

To be more precise, the potential AI-related hazards are the following:

- Bias and discrimination: Chatbots may reinforce and amplify existing prejudices and discrimination if they are trained on biased data.

- Misinformation: AI language models can produce inaccurate or misleading information if not adequately trained or supervised.

- Privacy and security: AI tools can access and analyze vast amounts of sensitive personal information, raising concerns about privacy and security.

- Job displacement: Being able to automate simple repetitive tasks, AI can replace human workers, potentially leading to job displacement.

- Lack of accountability: AI language models can generate inappropriate or harmful content, but holding individuals or organizations responsible for their actions can be challenging. Furthermore, AI can impersonate people for more effective social engineering cyber-attacks.

Given that, risks associated with generative AI underscore the significance of responsible AI development and deployment — and the need for ethical guidelines and regulations to mitigate the potential adverse effects of AI language models.

Some issues are already addressed by legislative authorities. For example, one California bill requires companies and governments to inform affected persons in order to increase AI usage transparency. Such a bill aims to prevent non-compliant data practices—such as those applied by facial recognition company Clearview AI, which was fined for breaching British privacy laws. The company had processed personal data without permission while training its models on billions of photos scraped from social media profiles. In this scenario, evaluating the ethics of data collection and storage practices could have highlighted a lack of privacy safeguards and averted the resulting poor publicity.

One Pennsylvania bill would require organizations to register systems that use AI in important decision-making, as well as to provide info on how algorithms were used. This information will help objectively evaluate AI algorithms and assess bias. In the future, such initiatives will help to eliminate racial bias. One such example of AI-powered racial bias was when Black patients were assigned a lower level of risk than equally sick White patients. This happened because the algorithm used health costs as a proxy for health needs, leading to less money being spent on Black patients who have the same level of need as White patients.

Shining a Light on AI-Generated Content: Existing Detection Tools

Despite a growing demand for generative AI regulation, there is an ongoing effort to identify GPT-generated outputs. You can find below some examples of existing tools for AI-generated text detection.

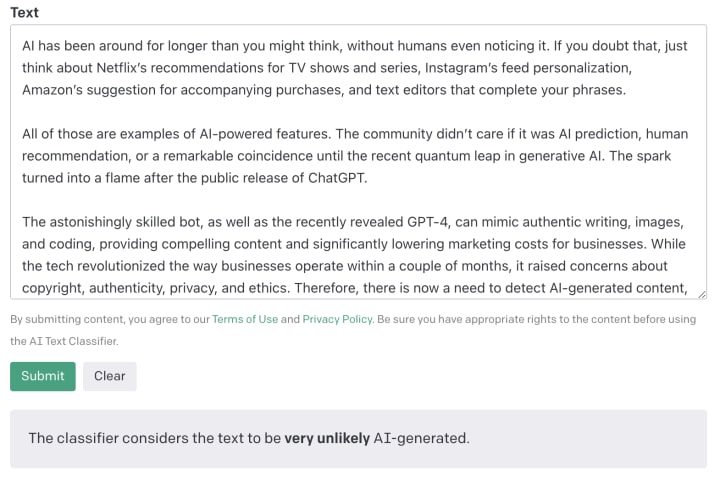

OpenAI AI Classifier

OpenAI released a classifier earlier on January 31. Below are the categories of its prediction:

- Very unlikely corresponds to a classifier threshold of <0.1.

- Unlikely corresponds to a classifier threshold between 0.1 and 0.45.

- Unclear corresponds to a classifier threshold between 0.45 and 0.9.

- Possibly corresponds to a classifier threshold between 0.9 and 0.98.

- Likely corresponds to a classifier threshold >0.98.

The classifier is in its early days, as OpenAI states that for the likely category, “about 9% of human-written text and 26% of AI-generated text from our challenge set has this label.” It means the predictions have a true positive of 26% and a 9% false positive.

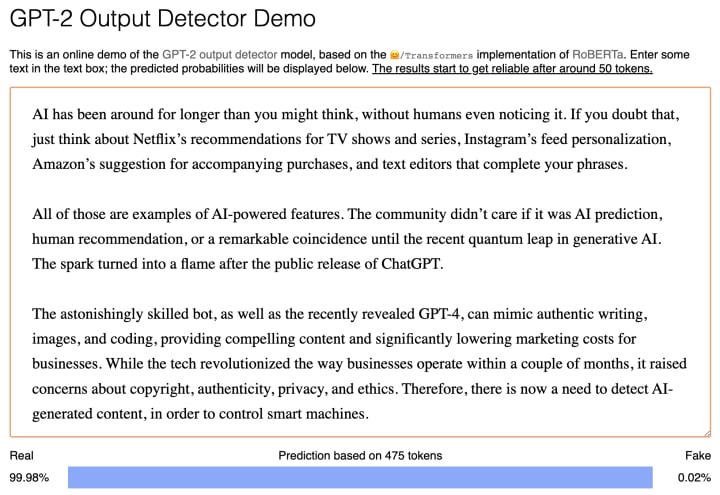

GPT-2 Output Detector

Hosted on Hugging Face, this tool detects whether a text was generated by GPT-2. Considering that ChatGPT is based on GPT-3, as well as the recent release of GPT-4, this tool is quite outdated. It uses the RoBERTa base OpenAI Detector model, which is a RoBERTa base model fine-tuned with the output of the 1.5B-parameter GPT-2 model.

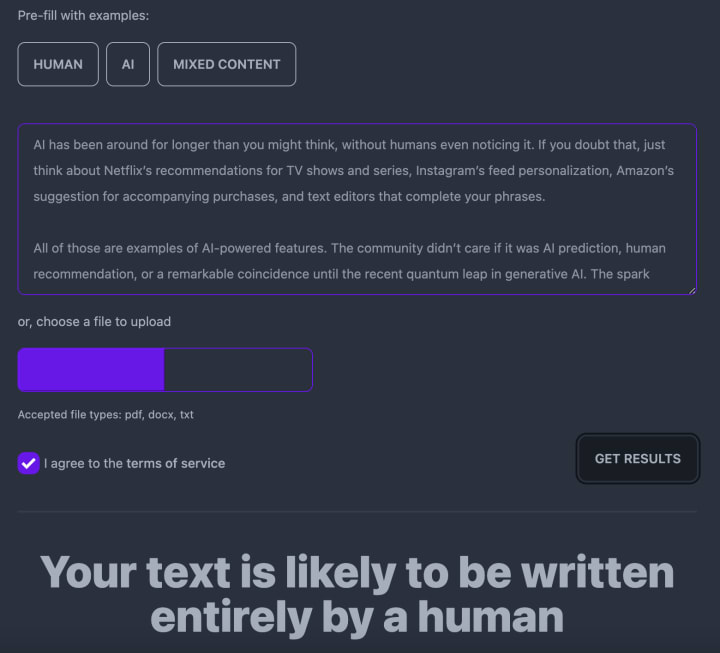

GPTZero

Built over the New Year by Princeton University student Edward Tian, it searches for “perplexity” and “burstiness” to determine whether the text was human-written.

Perplexity is the randomness/complexity of the text. If the text has high complexity, it’s more likely to be human-written. The lower the perplexity, the more likely it’s AI-generated.

Burstiness compares the variation of sentences. Humans tend to write longer or more complex sentences and shorter ones, causing spikes in perplexity in human writing. Machine-generated sentences are more uniform and consistent.

However, the classifier isn’t foolproof and is not susceptible to adversarial attacks.

The Truth About AI Detectors: Current Limitations and Future Prospects

The majority of the available tools come with limitations:

- Volume: Some require a minimum of 1,000 characters, which is approximately 150–250 words.

- Accuracy: A classifier can mislabel both AI-generated and human-written text. Moreover, AI-generated text can be edited easily to evade the classifier.

- Language: the majority of tools can work only with English, as it was the primary language for training. Moreover, they are likely to get things wrong on text written by children, as the training data set is often produced by adults.

It’s still challenging to distinguish between human-created and AI-generated content. Therefore, the niche is likely to see ongoing development and refinement in the coming years, as we continue to grapple with the implications of generative AI for the world of content creation and consumption.

The Future Is Already Here: Are You Ready for It?

Generative AI is expected to keep revolutionizing business strategies. In 2021, the global market size almost reached $8 billion—and it’s forecasted to surpass $110 billion by 2030, growing at a CAGR of 34.3%. 2022 was a breakthrough year for the industry, in which the world saw ChatGPT and DALLE-2 from Open AI, Stable Diffusion from Stability AI, Midjourney, and dozens of other AI tools. With such a breakneck evolution of technology, such advancements are likely only the beginning.

Neural networks that generate text, visual, and audio content based on short user prompts are likely to become widely used both in one’s daily routine and at the workplace. For example, on March 16, Microsoft announced it is bringing the power of next-generation AI to its workplace productivity tools via Microsoft 365 Copilot. The tool represents an entirely new way of working, embedded in the Microsoft 365 apps — Word, Excel, PowerPoint, Outlook, Teams, and more. According to Microsoft News Center, it will allow people to “be more creative in Word, more analytical in Excel, more expressive in PowerPoint, more productive in Outlook and more collaborative in Teams.”

Humans may also soon bid farewell to the good old days of Googling in favor of conversational AI chatbots.

AI Doesn’t Replace You; It Augments Your Knowledge and Capabilities

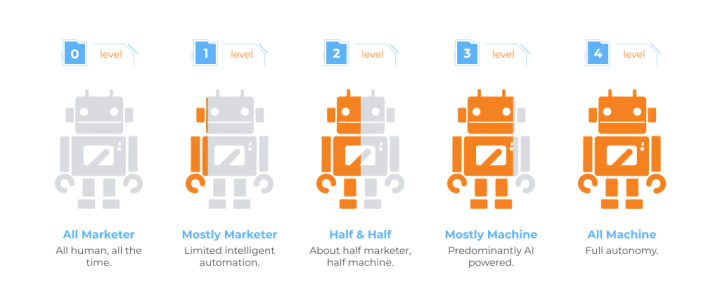

It’s highly doubtful that AI will replace humans any time soon. Suppose that conversational AI can become a receptionist, as its main benefit is automating repetitive tasks. Yet, it can’t handle angry customers. Instead, AI assistants are likely to work alongside human receptionists, recommending actions to resolve customer problems using their expert customer service training and real-time access to customer history and the company’s knowledge base. AI constantly learns and evolves, giving the ability to intelligently automate data-driven, repetitive tasks while continually improving the ability to predict outcomes and human behavior. A great methodology to measure how performance & efficiency can be improved through intelligent automation of processes and routine tasks was proposed by Marketing AI Institute. It is called Marketer-to-Machine (M2M) Scale and represents five levels of intelligent automation at the use case level.

- Level 0: All Marketer. The system operates solely based on human input and manual processes, without any involvement of AI. Its actions are limited to the instructions provided to it. All human, all the time.

- Level 1: Mostly Marketer. The system incorporates some level of intelligent automation through AI, but it heavily depends on marketer inputs and oversight.

- Level 2: Half & Half. The system is a combination of human expertise and machine capabilities, with the ability to handle most of the tasks. However, it still necessitates marketer input and oversight.

- Level 3: Mostly Machine. The system is primarily powered by AI and can function autonomously under specific conditions without human input or oversight.

- Level 4: All Machine. The system can achieve full autonomy and operate at a level equivalent to or surpassing human performance, without any human inputs or oversight. The marketer only needs to specify the desired outcome, and the machine will handle all the necessary tasks.

Today, most AI-based marketing automation solutions fall under Levels 1 and 2. Level 3 can be achieved, but it typically requires a substantial investment of time and resources during the planning, training, and onboarding stages. However, Level 4 marketing automation does not currently exist.

Since the pace of AI advancement is set to surpass all previous revolutions in human history and is likely to provide global impact across industries, it’s worth remaining curious. We recommend taking an active approach to comprehending the potential influence that AI could have on your profession and organization.

Final Thoughts

It will take more time and resources to research, test, and implement accurate, reliable AI tools. Today, it is important to acknowledge potential AI deployment in the workplace for improved efficiency. Although AI-generated content may still have its flaws, it’s best to adopt the tech instead of ignoring it. This may help you to optimize and enhance current business processes.

At the same time, there arises an opportunity for businesses to develop and market new, enhanced AI content-detecting systems and other related software. Need an experienced engineering team? With A-to-Z industry expertise in AI/ML, data processing, and other IT competencies, we’ve built hundreds of solutions of varying complexity and know exactly what it takes to accomplish a commercially successful software engineering project. Let’s talk now!

Stay Ahead of the Game with Future TechTalk by Intetics

Want to be the first to know about all the latest news and trends around AI and other cutting-edge technologies? Stay ahead of the game by subscribing to our podcast, Future TechTalk. And here’s the best part: the podcast is a symbiosis of our 28+ years of expertise packed and delivered to you with generative AI. Join today!

Spotify | SoundCloud | Amazon Music | Deezer | Podcast Index | BuzzSprout | Podchaser

Explore Other Ways You Can Enhance Your Business With AI

- AI Transforming Healthcare Solutions with Conversational Intelligence in Medical Virtual Assistants

- AI in Education – Looking to the Future of Learning

- Intetics Created a Machine Learning Algorithm That Recognizes Human Emotions to Improve Wearables for Sports Fans

- How AI Facial Recognition and Emotion Detection Helps Businesses. Check Yourself with Fun Demo

- How Is ML Disrupting Business at the 4IR Period?

- AI Assistants for Teachers

- AI for Educational Institutions

The article was originally published on the Intetics blog.

About the Creator

Intetics

Intetics is a leading global technology company focused on the creation & operation of effective distributed technology teams aimed at turnkey software product development, digital transformation, quality assurance, and data processing.

Comments

There are no comments for this story

Be the first to respond and start the conversation.