Cross Browser Testing Clarified

Mostly unnecessary now. If so, there is a much simpler and cheaper way to do it.

This article is one of the “IT Terminology Clarified” series.

Cross-Browser Testing (CBT) is to verify the web app in different browsers. Many software companies conducted CBT in some forms around 2014, as there was no absolute dominant (> 60%) browser at that time. And we know that Microsoft Internet Explorer does not confirm the W3C standard strictly.

To reach a maximum customer base, software companies need to spend quite some effort supporting all browsers, IE in particular.

I remember an Australian website charged the client $10 more for using IE in order to justify the extra effort to make its web app behave correctly in IE.

For this reason, Cross Browser Testing has gradually been included in software testing though it has been done quite poorly.

Cross Browser Testing is largely unnecessary now

Currently, the situation is different as Google Chrome dominates the desktop browser market; Microsoft deprecated Internet Explorer 😌 and switched Edge to Edge Chromium. If Chrome and Edge are counted as one browser (based on the same technology), it has over > 75% market share.

On that account, I would suggest software teams forget about Cross-Browser Testing and focus on functional testing in Chrome unless there are specific needs. Just do functional testing well and frequently by using automated test scripts.

If people really want to do CBT (yes, there is still a need for it or simply a mandate from the management) or are interested in this topic, read on.

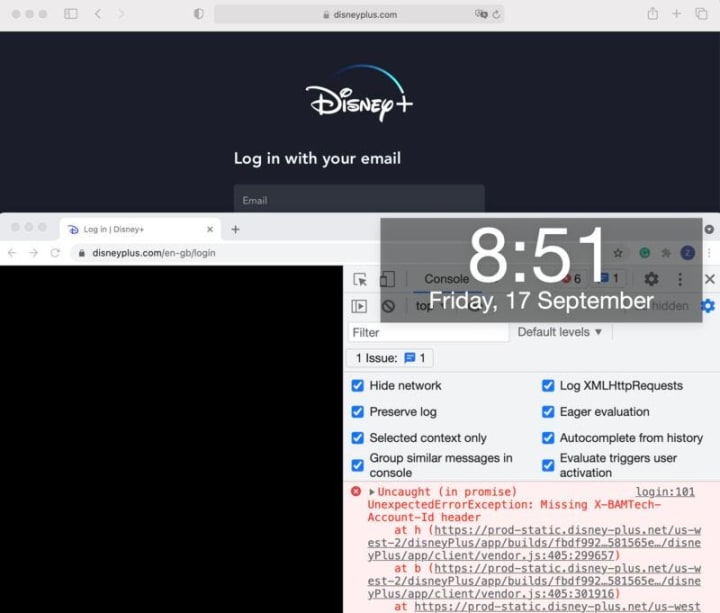

Update: 4 days after the publication of this article. I found that the Disney Plus login page failed to load on mac Chrome (fine on mac Safari and Windows Chrome).

An example of failed CBT, for such a high-profile website.

The high cost of Cross Browser Testing

The concept and process of Cross Browser Testing are simple: just test the web app in different browsers, e.g. Chrome, IE and Firefox, on different OS platforms. However, it is easier said than done. Many people did not realize the potentially high cost of CBT if it is not done properly (which is common).

I have seen ambitious cross-browser-testing plans with goals such as “all features must pass on the following browser configurations:

- Google Chrome on Win 7

- Google Chrome on Win 10

- Internet Explorer 6 on Win 7

- Internet Explorer 6 on Win Vista

- Firefox on Redhat Linux

- Firefox on Ubuntu Linux

- Safari on Mac OS 10.7

- …

Of course, these teams were unable to accomplish that. Here I just want to give you an example on how people plan the tests…

Considering the following criteria:

- Time and Effort. How much time do you allocate the testing team to do CBT?

- Coverage. Will you cover all regression test cases? Or just a set of key test cases?

- Frequency. How often do you run CBT? Every single build, every sprint or just once as a part of User Acceptance Testing?

We all know the ideal answer: 100% coverage for every single build; the earlier the issue is found, the easier and cheaper to fix it. However, the reality is that most software teams cannot afford that, or lack the technical capability to achieve the goal.

Cross-Browser-Testing approaches

There are generally two ways to do CBT.

1. Manual

Manual testers open different browser configurations and verify the web app according to a scripted test plan. Of course, unless it is limited to a very small scope (e.g. a couple of tests such as login and the main flow), it will be tedious and expensive.

2. Automation

Automated test scripts can be used to drive the web app in different browser configurations. There are commercial cloud platforms with cross-browser testing support, such as Sauce Labs and BrowserStack. Automation will greatly reduce the cost of CBT. This sounds cool, doesn’t it?

The truth is: every software company I knew that had purchased the above services had rarely used it.

How do I do Cross-Browser Testing?

At AgileWay (my company), if there are changes made to one application (we have several of them), we push it to production after getting a green build (passing all automated E2E regression suite) on the BuildWise CT server. Therefore, we don’t have the dedicated time to do cross-browser testing.

Yet, we still do Cross-Browser Testing effectively, in normal automated regression testing. In other words, a certain level of cross-browser testing is included in regular regression testing that runs in a Continuous Testing server. The below is from a test report for a build in BuildWise.

As you can see, automated tests have been run on

- Chrome on macOS

- Chrome on Linux

- Firefox on Linux

- Chrome on Win 10

Though I could also do other browser configurations, I believe this is enough for our web apps (more browser-configurations, higher cost). Currently, I am considering switching Chrome to Edge (Chromium) on one of the build agents on Windows.

The setup is easy too, select ‘Target browser’ in the BuildWise Agent.

It is important to remember that there must be no changes to test scripts!

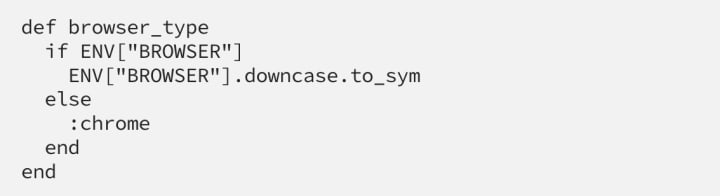

How do I make tests work for different browsers? Easy, by using an environment variable.

Every test script starts with these statements:

"browser_type" is defined in the test helper (see Maintainable Automated Test Design).

Advice: if you want to do proper Cross-Browser Testing, you must select Selenium WebDriver. The reason is simple: Selenium WebDriver is the only automation framework supported by all major browser vendors. Some web automation frameworks (typically in JavaScripts) are not real, they do simulation.

Below is a screenshot of BuildWise Agent that runs tests against the Safari browser on macOS.

---

This article was originally published on my Medium Blog on 2021-09-13.

About the Creator

Zhimin Zhan

Test automation & CT coach, author, speaker and award-winning software developer.

A top writer on Test Automation, with 150+ articles featured in leading software testing newsletters.

Comments

There are no comments for this story

Be the first to respond and start the conversation.