Beginner's Guide to Test-Driven Development

A Primer for Building Better Software with Test-Driven Development

What is Test-Driven Development (TDD)

Test-driven development (TDD) is a software development process that repeats a concise development cycle. First, an automated test case is written that defines the desired improvement or new function. Next, the minimum amount of code is written to pass that test. Finally, that code is refactored to acceptable standards. This process encourages simple and decoupled designs. The benefits of TDD include increased test coverage, faster feedback to developers, and more modular code with reduced defects. Over time, an extensive regression test suite accumulates, allowing for changes with confidence.

Benefits of TDD

Improved software design and architecture

The practice of TDD leads to better overall software design because the tests serve as an executable form of the specifications. First, Writing tests allow developers to think through requirements before implementation. It often results in cleaner interfaces, looser coupling between modules, and more focus on dependencies and interactions. The short cycles also facilitate evolutionary design, allowing architectures to emerge through iterative refinement.

Higher test coverage and quality

By definition, TDD maximizes test coverage because developers create an automated test for every new unit of functionality they code. This comprehensive test suite improves quality by reducing defects in untested parts of code. The tests also execute faster and can be run efficiently even after minor changes. Maintaining these tests ensures the test suite stays relevant over time. The combination provides a safety net for continuous integration and deployment environments.

Faster feedback and debugging

The short develop-test cycles of TDD provide nearly instant feedback, revealing if any given change causes something to fail. Failed tests pinpoint precisely where new code does not meet requirements. It is much faster than waiting for lengthier integration and system testing. Issues can then be fixed immediately by updating the code or testing. This tight feedback loop results in code that works as expected every step of the way.

Confidence in changing and refactoring code

The safety net of a comprehensive regression test suite allows developers to refactor, restructure, and optimize system code without fear of breaking existing functionality. These changes enable the system architecture and design to be adapted for new features and improvements over time. TDD gives assurance that any change that causes test failures will be caught quickly and can be fixed right away. This confidence and flexibility help systems evolve and improve with changing needs.

Fundamentals of TDD

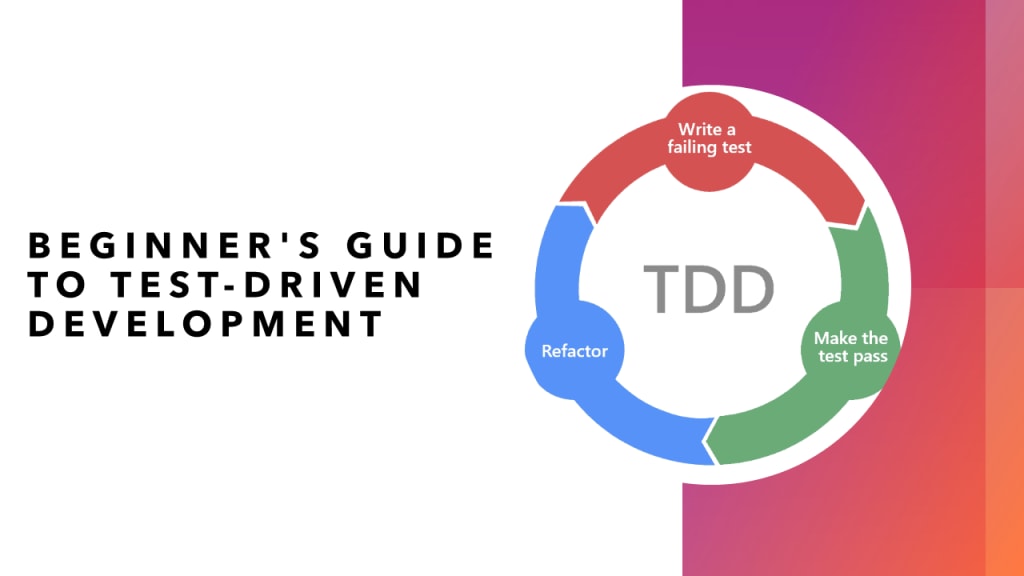

1. Red-Green-Refactor Cycle

The red-green-refactor cycle is at the core of test-driven development (TDD). It's a short, repeatable approach that directs the entire development process:

- Write Failing Test: The first step is to write a new automated test case based on the desired behavior or requirement. The new test should fail because the code to pass it has yet to be written. This failing test is the "red" portion of the cycle.

- Write Production Code to Pass Test: Next, write the minimum amount of implementation code required to make the failing test pass. At this stage, the test should go from red to green.

- Refactor Code While Ensuring Tests Pass: Finally, tidy up the code through refactoring while ensuring all tests continue to pass. Refactoring improves internal structure without changing external behavior.

TDD drives the entire development cycle from requirements to design coding by continually repeating this red-green-refactor process in short, incremental iterations.

2. Types of Tests

TDD utilizes automated tests at multiple levels of granularity:

- Unit Tests: Low-level tests focus on isolated discrete software components and methods. Unit tests form the majority of TDD tests using stubs and drivers to simulate needed systems.

- Integration Tests: Verify the correct interoperation of component modules that integrate larger functional areas—test integrated groups internally and at their boundaries using fundamental integrated components.

- End-To-End Tests: Assess the fully integrated system from start to finish using its actual interfaces and data flow—map to major application requirements and workflows.

3. Anatomy of a Good Unit Test

Effective TDD relies on well-structured unit tests that follow certain core principles and patterns:

Follow FIRST Principles: Unit tests should exhibit the FIRST properties:

- Fast: Tests should dash to facilitate frequent short TDD iterations

- Independent: Test cases should not depend on each other or test order

- Repeatable: Identical outcomes when run in any environment

- Self-Verification: Pass or fail clearly without manual inspection.

- Timely: Written just before the production code that enables them

Keep them small and focused: Each test case must cover a single use case or requirement following the single responsibility principle.

Structure with Arrange, Act, Assert: Split unit test code into three sections:

- Arrange: Setup required preconditions and inputs

- Act: Execute the object/method under test

- Assert: Verify expected results and postconditions

Applying the FIRST principles and the Arrange-Act-Assert structure to narrowly focused test cases yields fast, reliable TDD that increments development at an efficient and sustainable pace suitable for beginners and experts.

Setting Up a TDD Environment

Choose Testing Frameworks and Assertion Libraries

To reap the benefits of TDD, you need a testing framework and assertion library for your language and environment. JUnit and TestNG allow organizing and running test cases for Java, while Hamcrest and AssertJ provide assertions. In .NET, MSTest, NUnit, or xUnit combined with FluentAssertions offer full testing capabilities. Most frameworks support data-driven tests for efficient inputs.

Configure Build Automation

Automating your build pipeline is critical to efficiently running tests and getting rapid feedback. Build tools like Maven, Gradle, MSBuild, Make, Ant, or Nant can compile code, execute test suites, and package artifacts with every code change. Utilizing continuous integration servers like Jenkins, TravisCI, CircleCI, or TeamCity provides platform automation.

Integrate With the Version Control System

Use version control systems like Git, SVN, or Mercurial to manage code and enable collaboration. VCS integration surfaces code changes, test metrics, and failures to the team, tracks increments, and supports branching per feature. Automated versioning of test artifacts in lockstep with production code changes. Implement Pull Request workflows with required passing tests, peer reviews, and integration verifications.

TDD Process, Patterns, and Best Practices

1. When to Write Tests

TDD calls for writing tests before production code, but determining what precisely to test can be challenging for beginners. Focus on testing essential application requirements early, one logical use case at a time. Define inputs and expected outputs that map to specific requirements. As understanding grows, write tests for positive and negative scenarios, edge cases, exceptions, and standards compliance. Let tests reveal and drive design rather than over-planning. Refactor tests along with code while preserving behavior. Expand tests progressively as incremental development unfolds.

2. Role of Design in TDD

TDD does not eliminate design needs but shifts design decisions to incremental evolution guided by rapid feedback cycles. Follow a "just-in-time" minimal design approach, delivering the most straightforward solutions that satisfy the current test case. More significant requirements decompose into smaller testable chunks developed iteratively. Resist over-engineering upfront since future tests will fail to guide required changes. Refactoring continually improves the design over time, keeping it agile. External design frameworks can provide boundaries but defer concrete implementations until directed by failing tests.

3. Strategies for Refactoring Cycles

Frequent, incremental refactoring is essential to prevent accumulating technical debt and keep applications testable and flexible. Look for duplication that can be consolidated, excessive complexity to simplify, inappropriate dependencies that enable loose coupling, poor naming and comments needing clarification, violations of best practices, inefficient logic flows and algorithms needing streamlining, and messy code to clean up. Move refactoring changes incrementally through TDD cycles by cleaning areas surrounding new development. Leverage automated analysis tools like SonarQube to systematically surface areas needing priority attention—schedule time to pay back technical debt.

4. Mocking Dependencies

External dependencies like databases, network calls, or heavy computation make tests brittle, slow, order-dependent, and hard to isolate. Test doubles like mocks, stubs, fakes, and spies simulate dependencies through indirect input/output verification. Mocks offer configurable canned answers supporting test repeatability and portability. Isolate external variance to clarify test failures. But balance mocks against some integration testing verifying end-to-end behavior. Mock too much, and units lose meaning. Utilize Test Hooks and Dependency Injection to abstract and substitute dependencies.

5. Legacy Code and Technical Debt

Lacking tests is challenging for TDD adoption and often represents technical debt accumulating from poor practices. Begin by adding tests at module boundaries using existing interfaces. Characterize existing behaviors to enable future safe change. Prioritize testing functionality critical for customers and changes. As tests grow, incrementally refactor modules into more testable units. Seek to repay test debt before adding new features or severe regressions may result. Budget time explicitly to build test coverage of legacy systems. Celebrate new tests fixing old design flaws. Over time, leverage growing tests to modernize systems.

Common Challenges and Solutions

Getting stuck on implementation details

Beginners often need help knowing where to start testing and prematurely specifying details. To avoid analysis paralysis:

Focus on one logical requirement or user story at a time based on priorities.

Break larger goals down into more minor testable behaviors driving incremental development.

Write tests assuming an ideal interface first, deferring low-level class details as long as tests guide design evolution.

Build up both tests and implementation incrementally in small pieces.

Brittle and slow tests

Hard-coded test data, dependencies across test cases, inconsistent component initialization, verbose test reporting, unoptimized queries, and unnecessary logic can impede test effectiveness. Isolate test cases from each other and the test runner using setup/teardown methods. Follow FIRST principles emphasizing speed through concise, focused tests. Inject configurable test data for flexibility. Mock out ancillary logic globally for tests sharing standard semantics. Regularly profile and tune lengthy test runs impacting productivity: balance unit and integrated tests checking end-to-end performance.

Defining test scope

New teams need help knowing how much to test as requirements evolve. Start by testing key happy paths and get confidence before expanding the scope. Prioritize testing security, compliance, reliability, and central business logic based on risk. As understanding improves, expand variation with negative test cases. Utilize code coverage reporting to fully exercise implementation logic through different inputs and usage patterns. Expand end-to-end workflow testing through UI scenarios and lower-level component behavior—review tests before marking development complete.

When to stop testing

For each increment, strive for "good enough" coverage, certifying core behavior works as expected and preventing future issues. But know when further testing offers diminishing returns versus effort expended. Weigh relative risk, testing costs, and project delays when evaluating rigor. Utilize risk checklists tailored to project type and experience to determine critical test coverage required. Extend testing progressively when uncertainties emerge. Rely on production telemetry to validate quality post-deployment. Prioritize addressing gaps over perfect assurance upfront. Set testing goals to attain perfection.

Conclusion

In summary, test-driven development offers transformative benefits over testing only at the end but requires new practices focused on incremental design and testing evolution. By embracing automated tests as requirements, designs as fluid responses to feedback, coding tuned to pass defined test bars, and refactoring as constant virtuous improvement, dramatic gains in code quality, reliability, and agility emerge. Fail early to fear less.

Through ever-expanding tests, future code mutations become safe, defects identifiable, and designs flexible to enable continuous delivery of business value. Test today to code with confidence tomorrow via this profoundly effective development discipline now accessible to all who aspire to be higher.

About the Creator

Miles Brown

I'm Miles Brown, a Programming & Technology professional with expertise in using various technologies for software & web development @Positiwise Software Pvt Ltd, a leading technology solution for Software Development & IT Outsourcing.

Comments

Miles Brown is not accepting comments at the moment

Want to show your support? Send them a one-off tip.