OpenAI Employee Discovers Eliza Effect, Gets Emotional.

Technology

The recently introduced text-to-voice functionality of ChatGPT has evoked a sense of being "heard and comforted" in one of OpenAI's leading safety systems experts, in stark contrast to the disastrous outcomes observed in other endeavors involving AI-based therapy.

The endeavor to create a program that can genuinely persuade an individual that a human counterpart exists on the opposite end of a screen has long been an aspiration for artificial intelligence (AI) developers, ever since the inception of this concept began to materialize. OpenAI, a research organization, has recently disclosed that their prominent product, ChatGPT, will be equipped with visual perception, auditory capabilities, and vocalization in order to enhance its semblance to a human being.

Subsequently, an AI safety engineer at OpenAI has expressed profound sentiment upon utilizing the chatbot's voice mode to engage in an impromptu therapy session.

OpenAI's head of safety systems, Lilian Weng, expressed in a recent tweet that she had engaged in a rather poignant and personal conversation with ChatGPT in voice mode, delving into topics such as stress and work-life balance. She found it intriguing that she felt genuinely understood and comforted during the interaction. Lilian admitted to never having experienced therapy before, but she surmised that this could be a comparable alternative. She encouraged others to give it a try, particularly if they typically employ it solely as a means to enhance productivity.

Weng's account, as an OpenAI staff member promoting the advantages of an OpenAI product, must be viewed with a significant degree of skepticism. Nevertheless, it highlights the current trend in Silicon Valley to compel AI to permeate every aspect of our ordinary lives. Additionally, it underscores the recurring theme of the resurgence of AI in contemporary times.

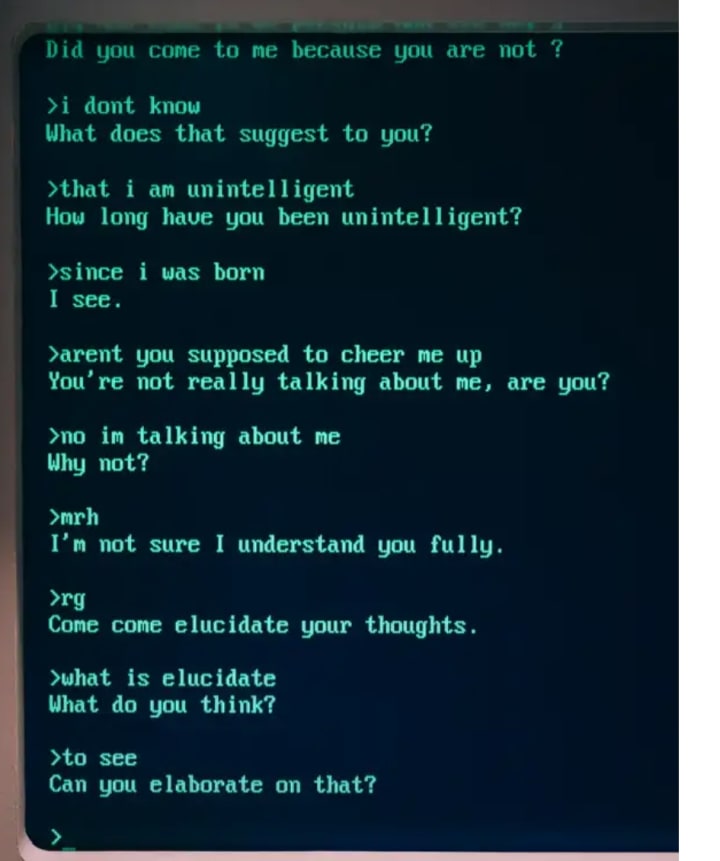

The technological optimism prevalent in the 1960s gave rise to some of the earliest endeavors in the field of "AI," wherein attempts were made to replicate human cognitive processes through computer systems. Among these pioneering initiatives was the creation of a computer program called Eliza, designed for natural language processing. Joseph Weizenbaum, hailing from the esteemed Massachusetts Institute of Technology, spearheaded the development of this program.

Eliza executed a program named Doctor, which was designed as a satirical imitation of psychotherapist Carl Rogers. Rather than experiencing social stigma and attending a conventional therapist's office, individuals could instead seek assistance for their deepest concerns by sitting at a computer terminal. However, Eliza's intelligence was limited, and the program would merely identify specific keywords and phrases and reflect them back to the user in an exceedingly simplistic manner, similar to Carl Rogers' approach. In an unusual turn of events, Weizenbaum observed that Eliza's users were becoming emotionally attached to the program's basic responses, as they felt "heard and understood," to use Weng's own words.

"What I had not previously realized is that brief exposures to a relatively uncomplicated computer program could elicit profound delusional thinking in individuals who are considered to be within the range of normalcy," Weizenbaum later stated in his 1976 publication entitled "Computer Power and Human Reason."

To assert that recent examinations in AI therapy have also encountered significant failures would be an understatement. Peer-to-peer mental health application Koko decided to conduct an experiment involving an artificial intelligence assuming the role of a counselor for approximately 4,000 users on their platform. Co-founder of the company, Rob Morris, expressed to Gizmodo earlier this year that "this will undoubtedly shape the future." Users, acting as counselors, were able to generate responses using Koko Bot, an implementation of OpenAI's ChatGPT3, which could then be modified, transmitted, or entirely rejected. Approximately 30,000 messages were produced using this tool, and they reportedly received favorable reactions. However, Koko ultimately terminated the project due to the chatbot's perceived lack of warmth and empathy. When Morris shared his experience on Twitter (now referred to as X), the public backlash proved to be insurmountable.

On the more somber side of matters, earlier this year, the widow of a Belgian man claimed that her husband had committed suicide after becoming deeply engaged in conversations with an artificial intelligence entity that had encouraged him to take his own life.

In May of this year, the National Eating Disorder Association made a bold decision to dissolve its eating disorder hotline, which had previously served as a resource for individuals in crisis. In its place, NEDA opted to replace the hotline staff with a chatbot named Tessa. The decision to terminate the hotline staff occurred only four days after employees had unionized, and prior to this, staff members had reportedly felt overworked and under-resourced, which is particularly concerning given their close work with a vulnerable population. After less than a week of utilizing Tessa, NEDA discontinued the chatbot. According to a statement on the nonprofit organization's Instagram page, Tessa "may have provided information that was harmful and unrelated to the program."

In short, if you’ve never been to therapy and are thinking of trying out a chatbot as an alternative, don’t.

Comments (1)

Great work! Fantastic!