M3GAN and OMNI-scient AI Predictions

As an AI powered doll takes over the box office and social media, the idea of “technological singularity” feels all the more important.

Over the weekend a film starring a blonde, impeccably dressed, out-for-blood robot swept the box office. “M3GAN” grossed over $30 million domestically opening weekend, behind only James Cameron’s highly anticipated “Avatar: The Way of Water.”

The impact of the film includes TikToks of moviegoers and professional dancers at PR events alike replicating M3GAN’s outlandish dance, and the hint of a merger by two major horror film houses Blumhouse Productions and Atomic Monster, who co-produced the film. The plot of M3GAN, which consists of a hastily programmed AI best friend taking its charge too seriously, has even bigger implications, making us think about how Artificial Intelligence (AI) can go wrong.

“Technological singularity” is a significant term in futurism, describing the point where AI understanding extends beyond that of humans. This point is often described as causing irrevocable damage to humankind, as they will no longer be in control of the technology they created and enabled. With recent media including M3GAN and Klara and the Sun, Kazuo Ishiguro’s popular novel which places a solar-powered AF (Artificial Friend) as the protagonist, the idea of singularity is bound to be on our minds and in online discourse more and more.

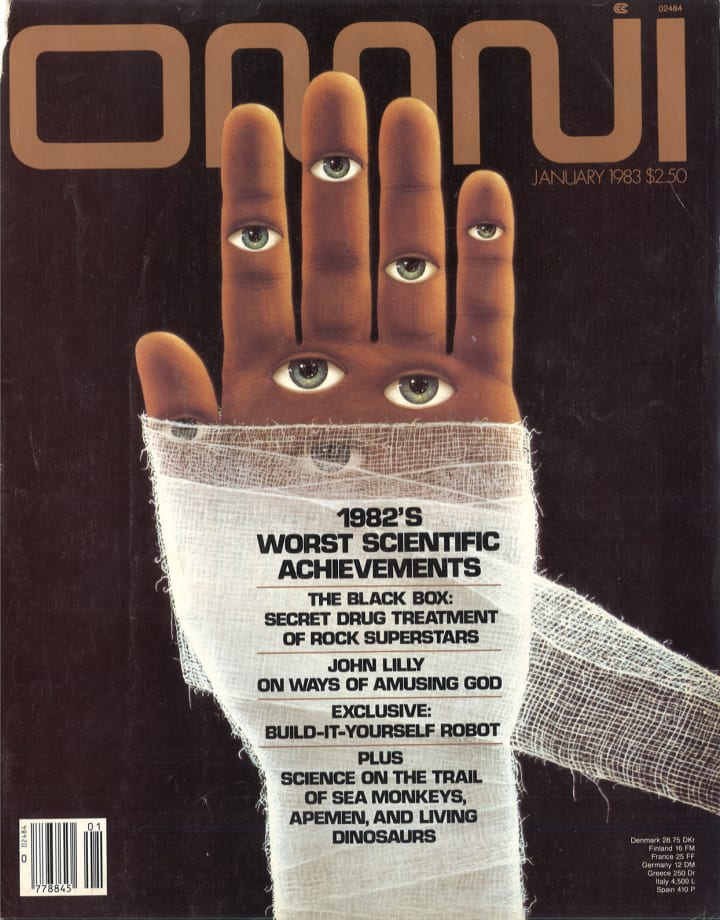

While the term was first used by scientist and member of the United States Atomic Energy Commission John von Neumann (b. 1903 - d. 1957), it is often credited to science fiction author Vernor Vinge, who popularized the term in his “First Word” for the January 1983 issue of Omni. Vinge had a more positive spin on the idea, positing that progress is inevitable and there is a world where humans and AI can coexist. Vinge’s spin is republished below, take a look if you, like me, are still thinking about M3GAN long past the theater.

First Word by Vernor Vinge

“We are caterpillars, soon to be butterflies, and, when we look to the stars, we take that vast silence as evidence of other races transformed.”

Social and technological prediction is very popular. This is reasonable. It seems that change is the only constant in our lives; we want to be prepared.

Yet there is a stone wall set across any clear view of our future, and it’s not very far down the road. Something drastic happens to a species when it reaches our stage of evolutionary development — at least that’s one explanation for why the universe seems so empty of other intelligence. Physical catastrophe (nuclear war, biological pestilence, Malthusian doom) could account for this emptiness, but nothing makes the future of any species so unknowable as technical progress itself.

A favorite game of futurists is to plot technological performance — computer speed, say — against time. Such trend curves climb ever more steeply. Extrapolated 30 or 40 years, they are so high and steep that even the most naive futurist discounts their accuracy. Some who talk about that era predict a leveling off of progress. After all, saturation effects are observed in other growth processes.

There is an important reason why this process won’t level off. We are at the point of accelerating the evolution of intelligence itself. The exact means of accomplishing this phenomenon cannot yet be predicted — and is not important. Whether our work is cast in silicon or DNA will have little effect on the ultimate results. The evolution of human intelligence took millions of years. We will devise an equivalent advance in a fraction of that time. We will soon create intelligences greater than our own.

When this happens, human history will have reached a kind of singularity, an intellectual transition as impenetrable as the knotted space-time at the center of a black hole, and the world will pass far beyond our understanding. This singularity, I believe, already haunts a number of science-fiction writers. It makes realistic extrapolations to an interstellar future impossible. To write a story set more than a century hence, one needs a nuclear war in between — to [stop] progress enough so that the world remains intelligible.

A Cro-Magnon man brought into our present could eventually understand the changes of the last 35,000 years. The difference between contemporary man and the creatures who live beyond the singularity is incomparably more profound: Even if we could visit their era, most of what we would see would be forever incomprehensible.

This is a vision most people reserve for the far future, perhaps after another million years of normal evolution. Surprise — it is a future that will happen as soon as superhuman intelligences are created. And given our progress in computer and biological sciences, that should be between 20 and 100 years from now.

The passage into this great singularity should not be abrupt. So far there has been little progress in automating the distinct prerogatives of the human mind. During the next 20 years this will change. Programs that can perform symbolic as well as numeric processing will become common. The student’s hand computer will become competent enough to solve most problems in our present undergraduate courses. Universities will scramble to provide curricula that will train students to think with these new tools. Graduates will need an ever-deeper understanding of their majors to compete successfully for technical jobs. As the years pass, we will find that a rapidly diminishing number of humans will be necessary to keep large systems running.

It’s missing the point to call this process “technological unemployment.” Even before the last jobs are automated, our successors — the more-than-human intelligences we are creating — will already be onstage. Whatever paradise the world may be, man will be the leading actor no more.

For some, this scenario may be even less desirable, indeed more cataclysmic, than having a nice atomic war, after which we might proceed with human business as usual.

But if the singularity is properly considered, it need not be depressing. Marvin Minsky, of Massachusetts Institute of Technology, has suggested we regard these new beings as our children — children who will do still better than their parents did.

Sometimes this point of view is enough for me; often it is not. I want man to be a continuing participant. And perhaps there is a way. The machine intelligences need not be independent of our own. Even now, when we use personal computers, we are extending our memory and our ability to solve problems and dilemmas that confront us daily. In a sense we are augmenting our own intelligence. As we improve the man/computer interface, this amplification effect will increase. When the computer half of the partnership becomes intelligent, it might still be part of an entity that includes us. The singularity then becomes the result of a massive amplification of human intelligence rather than simply its replacement by machines.

Falling into the singularity is admittedly a frightening thing, but now we might regard ourselves as caterpillars who will soon be butterflies and, when we look to the stars, take that vast silence as evidence of other races already transformed.

---

Vernor Vinge is the author of several science-fiction novels, the most recent being True Names. An associate professor of math sciences at San Diego State University, he served as a panelist at the Omni Symposium on Artificial Intelligence and Science Fiction, held last summer.

About the Creator

OG Collection

Exploring the most significant and hidden stories of the 20th century through iconic magazines and the titan of publishing behind them.

Check out our AI OG sandbox - https://vocal.media/authors/og-ai

Comments (4)

Interesting

A very interesting post

Fantastic piece! Eye-opening, insightful...and a little terrifying.

Ai only next future