When we need to cluster a data set, the first couple of algorithms we might look into are K means, DB scan, or hierarchical clustering algorithm. Those classic clustering algorithms always treat each data point as a dot. However, those data points usually have size or boundary(bounding box) in real life. Ignoring the edge of points might cause further bias. RVN algorithm is a method that considers points and the bounding box of each point.

Background

We were inspired by a business case of a furniture company. Our job is clustering pieces of furniture by lifestyle, such as working space or living space. Since each piece of furniture has a different shape and size and whether some pieces of furniture are overlapped are more critical than the distance between each other, we created the RVN algorithm that can consider the size of each point. We believe this algorithm can further be implemented in other domains, such as ecosystem and pixel clustering.

World Map Example — K means

When you need to cluster all countries on earth, first, you need each country’s coordinates (longitude and latitude).

Second, you can use K mean or other algorithms to tune the best number of clusters or find the best eps for DB scan. We will use K mean and silhouette score to find the best K.

According to silhouette score, we pick k=3.

It looks great! Right?

However, we can notice some bias for some countries, such as Russia.

You can see that Russia was clustered with other Asian countries. The reason is that the point representing Russia’s location is closer to other Asian countries. If we put this point more left, Russia will be clustered to the left group.

RVN Algorithm

Let’s introduce the basic logic of the RVN algorithm.

Basic logic

Data requirement: Upper bound and lower bound for each point

Initial

1. Initialize n clusters(The data size is n), each point is one cluster

2. Calculate the radius of each cluster (use upper bound and lower bound )

Iteration:

1. Check all the overlapping points. (overlap of range)

2. Group all overlapping points as the same cluster

3. Update centroid and radius of each cluster

Stopping criterion

Basic : Stop if there is no overlapping group

Stop by k : Set a K and stop the algorithm when total cluster is lower than K (k mean concept)

Other possible stopping criterion : percentage of all size, distance of nearest cluster

Let’s use five points to demonstrate this algorithm further.

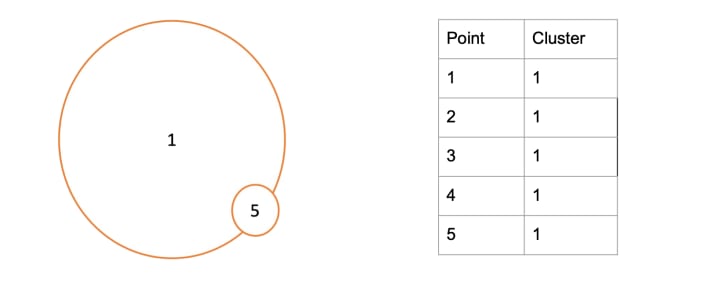

Step 1: Initial cluster

Step 2: Check point 1

Step 3: Update point 1 and 3 to cluster 1

Step 4: Check point 2, already updated

Step 5: Check point 3, update 4

Step 6: Check point 5, no overlapped point

Step 7: Update the centroid and boundary. End of first iteration

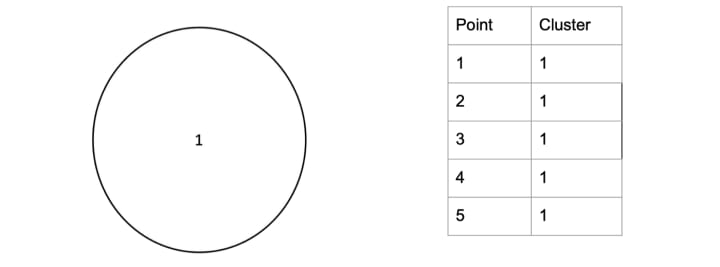

Step 8: Start the second iteration, check group 1 and update point 5 to point 1

Step 9: Check point 5, update nothing

Step 10: Update the centroid and boundary, end the second iteration

Groups Expanding Method

There is one inevitable situation, there is no overlapping point, but we still want to keep grouping points together.

If we stop the algorithm according to the basic rule, there might be too many groups.

We provide three possible solutions.

Naive: Gradually increase all radius by a constant number so the two closest groups will overlap each other (fast because all groups’ radius increase at the same time, but might cause bias)

Approximate: Group two nearest clusters together. (Slow but less bias, because other groups’ radius remain the same)

Other possible solutions: Increase radius by percentage, increase by stochastic number

RVN Algorithm — Parameters

In the RVN algorithm, some parameters will require tuning to find the optimal one.

Radius : If the data set is two-dimensional, there will be four candidate radius, which are x-upperbound_x, x-lowerbound_x, y-upperbound_y, and y-lowerbound_y. We sort candidates to pick the best one or choose by experience.

Expansion speed : How fast do the circles want to grow when there is no overlapped point.

Threshold of K : When the total cluster number is less than K, the algorithm stops. (only use for “stop by K logic”)

Find the best K

Same as K mean algorithm, if we want to find stop by K logic, we need to find the best K. Usually, there are two ways to find the best K for any clustering method. Silhouette score and sum of square error (each point and group centroid). Since we use the bounding box instead of points, directly applying silhouette score and the sum of square error will cause bias.

Therefore, when calculating silhouette score and sum of square error, we can create four extra points (child point)for each point (mother point) and assign them the same group as the mother point. The coordinate of children’s points are (x, upper-bound y), (x, lower-bound y), (upper-bound x, y), and (lower-bound x, y).

Because children points and mother points together can better represent the size of each mother point, we can get a more unbiased K by silhouette score and sum of square error.

Before diving into the case example, let us go back to the world map again.

World Map Example — RVN

Other than the longitude and latitude of each country, we also need upper bound and lower bound.

We skip the K tuning part in this example because we only want to show

the different results.

Let’s run the algorithm.

Let’s have a closer look on Russia.

We can see that Russia now is clustered with all the surrounding countries.

Furniture Example

Now, we have a floor plan, and we will use RVN to cluster all pieces of furniture.

Let’s find the optimal K

Result

We can see that although some points are very close to larger groups because their range does not overlap with the larger group, they were considered different settings.

Python Implementation

Please check the following site the see the implementation of the NVR algorithm

Challenge

Data collection : Data collection is the most troubling part of this algorithm because you need to collect the location of one point and the bounding box.

High dimensional data : Ideally, this algorithm can implement on high dimension space. However, we have not tried to apply it to data with more than three dimensions.

Circle assumption : RVN has an assumption that groups expand as a circle, meaning that if a group grow, it will extend the same size in all direction. However, this assumption could be wrong in some situations, as in the following picture.

Intuitively, this data should be clustered into 11 groups.

However, because the scale of the x-axis and the y-axis are so different (y range is from 0 to 1000, x range is from 0 to 50), the result of the RVN algorithm could be very counterintuitive.

One possible solution we have is standardizing the x range or y range. This action can guarantee one dimension will expand faster than the other.

Speed Performance : Different ways to merge groups will lead to the different speeds of the algorithm. We currently do not have an optimal way.

Overall Performance : This algorithm works better than DB scan and K means in the floor plan circumstance. However, we currently do not know if RVN will perform better in other situations such as biomes clustering.

Future

This is a brand new algorithm inspired by the furniture industry’s floor plan. I sincerely hope we can keep developing this special method; therefore, please do not hesitate to contact me if anyone has any questions regarding improvement or implementation.

This algorithm was created by Ray, Vamshi, and Noah

About the Creator

Ray Hsu

Came from Taiwan.

Live in Michigan.

About to move to Stamford.

Love traveling.

What to show the world my stories.

Comments

There are no comments for this story

Be the first to respond and start the conversation.