Evolution of Computer

A journey from father of computer to today's advance era and evolution from early to advance computer

Charles Babbage

Charles Babbage was a British mathematician and inventor who lived from 1791 to 1871. He is widely considered to be the father of computing, due to his work on the design of mechanical computers during the 19th century.

Babbage's most famous invention was the Analytical Engine, which was a mechanical computer that was capable of performing any calculation that could be done by a human using pen and paper. Although the Analytical Engine was never fully built during Babbage's lifetime, his designs and ideas were highly influential in the development of modern computing.

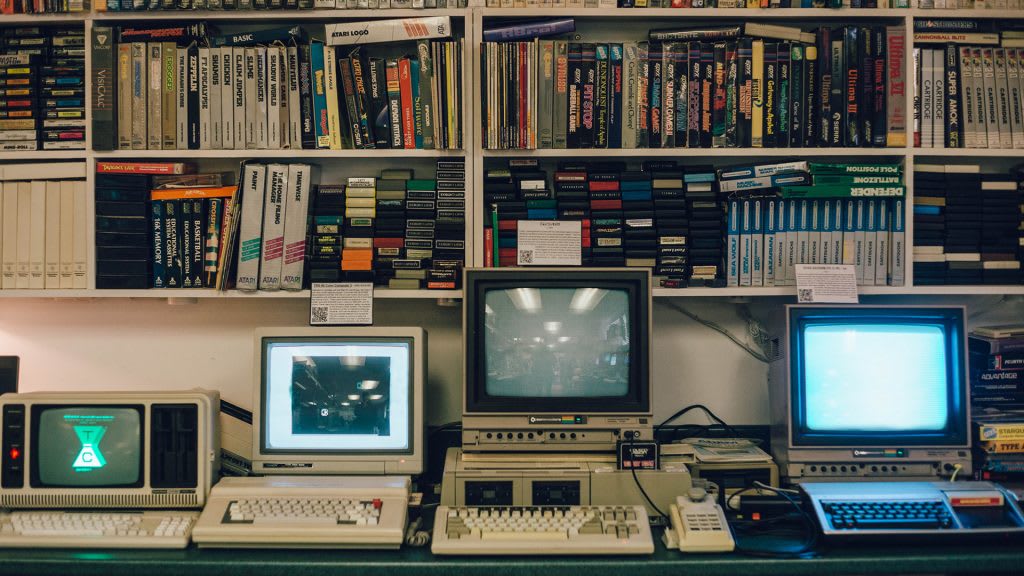

The evolution of personal computers began in the 1970s with the development of microprocessors and the introduction of the first commercially successful personal computer, the Altair 8800. Throughout the 1980s and 1990s, personal computers became increasingly powerful and affordable, and they quickly became a ubiquitous part of modern life.

The introduction of graphical user interfaces, such as the Macintosh in 1984, and the widespread adoption of the internet in the 1990s, helped to make personal computers even more useful and accessible to people around the world.

Today, personal computers continue to evolve and improve, with advances in hardware and software allowing for ever more powerful and versatile machines. From Babbage's mechanical computers to modern-day laptops and desktops, the evolution of personal computing has been a fascinating and transformative journey

Charles Babbage is considered to be the father of the computer. He was a British mathematician and inventor who is credited with designing and conceptualizing the first mechanical computer. Babbage is best known for his designs of the Analytical Engine, a general-purpose programmable computer that could perform arithmetic and logical operations.

Babbage's work on the Analytical Engine laid the groundwork for the development of modern computers, and he is often referred to as the "father of computing." Although Babbage never completed the construction of the Analytical Engine, his designs and ideas have been influential in the development of computer technology.

Babbage's contributions to the field of computing include the idea of using punched cards to store and manipulate data, the concept of a stored program, and the use of mechanical components to perform calculations. His work laid the foundation for the development of modern computing and has influenced the design of computers and programming languages to this day.

In summary, Charles Babbage's work on the Analytical Engine and his contributions to the field of computing make him a key figure in the history of computers and technology.

The early 1960's Era:

The early 1960s were a period of significant innovation in the computer industry, with several key developments shaping the evolution of computers over the next few decades. Here are some of the notable developments in computer technology from the early 1960s:

1. Mainframe computers: In the early 1960s, mainframe computers were the dominant type of computer used in business and government. These large, centralized computers were used for tasks like data processing and scientific calculations and were often shared among multiple users.

2. Transistors: In 1958, the first integrated circuit was developed, which allowed for the miniaturization of electronic components. This led to the development of smaller and more powerful computers, as transistors replaced vacuum tubes in computer circuitry.

3. Time-sharing: Time-sharing was a new way of using computers that allowed multiple users to access a single computer simultaneously. This made it possible for more people to use computers for a wider range of tasks, and was a precursor to modern multi-user computing environments.

4. Programming languages: In the early 1960s, several new programming languages were developed, including COBOL, BASIC, and FORTRAN. These languages made it easier for people without specialized technical knowledge to program computers, and helped to expand the use of computers in business and education.

5. Microprogramming: Microprogramming was a new approach to computer architecture that allowed for the development of more complex and sophisticated instruction sets. This made it possible to create more powerful and flexible computers, and was a key factor in the development of later generations of computers.

Evolution of Computers:

Over the next few decades, these developments in computer technology continued to evolve and improve, leading to the development of new types of computers like personal computers and supercomputers. Today, computers are used in almost every aspect of modern life, from communication and entertainment to scientific research and business operations. The evolution of computers over the past several decades has been a remarkable journey that has transformed the way we live and work.

The evolution of personal computers has been a fascinating journey that has spanned over several decades. It all started in the 1970s when the first personal computers were introduced, which were bulky, expensive, and lacked the capabilities we have today.

The 1980s saw the rise of IBM and the creation of the IBM PC, which set the standard for personal computers. The 1990s brought about the rise of graphical user interfaces and the World Wide Web, which transformed personal computers into powerful tools for communication and information sharing.

The early 2000s saw the emergence of laptops and mobile devices, which allowed for greater mobility and flexibility in how we use personal computers. In recent years, we've seen the rise of cloud computing, artificial intelligence, and the Internet of Things, which have further expanded the capabilities of personal computers.

Overall, the evolution of personal computers has been marked by a constant drive for greater performance, smaller form factors, and increased functionality. As technology continues to evolve, we can expect personal computers to continue to play an essential role in our lives, both at work and at home.

The evolution of computers can be traced back to the early 19th century with the invention of mechanical calculators, such as Charles Babbage's difference engine and analytical engine. These early machines were designed to perform mathematical calculations automatically and were the precursors to modern-day computers.

The development of electronic computers began in the mid-20th century with the invention of the transistor, which allowed for the miniaturization of electronic components. The first electronic computer, called the ENIAC, was built in 1945 and was used to perform calculations for the U.S. military.

Over the next few decades, computer technology continued to advance rapidly, with the development of the first mainframe computers in the 1950s and the first personal computers in the 1970s. The invention of the microprocessor in the 1970s further revolutionized the computer industry by making it possible to build powerful computers that were small and affordable.

In the 1980s and 1990s, the personal computer market exploded, with companies like IBM, Apple, and Microsoft leading the way. The development of the internet in the 1990s further transformed the computer industry, making it possible for people to connect and communicate with each other on a global scale.

Today, computer technology continues to evolve rapidly, with advances in artificial intelligence, quantum computing, and other fields pushing the boundaries of what is possible. From mechanical calculators to supercomputers and beyond, the evolution of computers has been a fascinating journey that has transformed the way we live, work, and communicate.

In recent years, computers have continued to evolve rapidly, with new technologies and innovations transforming the way we use and interact with these machines. Here are some of the notable developments in computer technology that have taken place in the last few years:

ADVANCE COMPUTERS:

Advanced computers refer to a range of cutting-edge technologies that are at the forefront of computer science and engineering. These technologies are designed to push the limits of what computers can do, and are often used in applications that require extreme processing power, speed, and accuracy. Here are some of the key areas of advanced computer technology:

1. Quantum computing: Quantum computers are a new type of computing technology that uses the principles of quantum mechanics to perform calculations much faster than traditional computers. While still in the early stages of development, quantum computers have the potential to revolutionize fields like cryptography, chemistry, and materials science.

2. Virtual and augmented reality: Virtual and augmented reality technologies have made it possible to create immersive digital environments that can be used for everything from gaming to training simulations. As these technologies continue to advance, they may also be used to create new forms of art, entertainment, and communication.

3. Blockchain: Blockchain technology is a type of distributed ledger that allows for secure, transparent transactions without the need for a centralized authority. While most commonly associated with cryptocurrencies like Bitcoin, blockchain technology has the potential to be used for a wide range of applications, from supply chain management to voting systems.

4. Cloud computing: Cloud computing has transformed the way businesses and individuals store and access data. With cloud computing, users can store data and access applications from anywhere with an internet connection, making it easier to collaborate and work remotely.

5. Internet of Things (IoT): The Internet of Things is a network of connected devices that can communicate with each other and the internet. These devices can range from smart home appliances to wearable technology and even industrial equipment. The data generated by IoT devices can be used to optimize processes and make more informed decisions.

6. Edge computing: Edge computing is a type of computing that takes place closer to the source of data, rather than in a centralized data center. This can help reduce latency and improve the speed and reliability of applications, particularly those that rely on real-time data.

7. 5G networks: 5G networks are the next generation of cellular networks and offer faster speeds and lower latency than previous generations. This technology has the potential to enable new applications like autonomous vehicles, remote surgery, and smart cities.

8. Cybersecurity: With the increasing reliance on digital systems and the rise of cyber threats, cybersecurity has become a critical concern for individuals and organizations alike. Advances in cybersecurity technology, such as AI-powered threat detection and blockchain-based identity management, are helping to protect against these threats.

9. Sustainability: As computing technology becomes more pervasive, there is growing concern about the environmental impact of data centers and other computing infrastructure. To address this concern, companies are developing more sustainable computing technologies, such as low-power processors and renewable energy-powered data centers.

10. Advanced computers refer to a range of cutting-edge technologies that are at the forefront of computer science and engineering. These technologies are designed to push the limits of what computers can do, and are often used in applications that require extreme processing power, speed, and accuracy. Here are some of the key areas of advanced computer technology:

11. Artificial intelligence (AI) and machine learning: AI and machine learning are revolutionizing the way computers process and analyze data. These technologies are being used in everything from natural language processing to image recognition and autonomous systems.

12. High-performance computing (HPC): HPC refers to the use of supercomputers and other advanced computing systems to solve complex problems in fields like climate modeling, drug discovery, and materials science. These systems are designed to perform massive calculations and simulations at extremely high speeds.

13. Quantum computing: Quantum computers use the principles of quantum mechanics to perform calculations much faster than traditional computers. These systems are still in the early stages of development, but have the potential to revolutionize fields like cryptography, chemistry, and optimization.

14. Neuromorphic computing: Neuromorphic computing is an emerging field that aims to create computers that mimic the structure and function of the human brain. These systems could be used for tasks like natural language processing, image recognition, and robotics.

15. Edge computing: Edge computing is a type of computing that takes place closer to the source of data, rather than in a centralized data center. This can help reduce latency and improve the speed and reliability of applications, particularly those that rely on real-time data.

CONCLUSION:

The evolution of computers over the years has been a fascinating journey that has transformed the way we live and work. From the early mainframe computers of the 1960s to the advanced computing technologies of today, computers have become an indispensable tool in almost every aspect of modern life. The development of new hardware and software technologies, such as microprocessors, programming languages, and artificial intelligence, has enabled computers to become faster, more powerful, and more versatile than ever before. As technology continues to advance, we can expect to see even more transformative changes that will further shape the future of computing.

Advanced computer technology has evolved rapidly over the years and continues to do so at an unprecedented pace. With the rise of artificial intelligence, high-performance computing, quantum computing, neuromorphic computing, and edge computing, computers have become more powerful and versatile than ever before. In addition to these technologies, the development of the internet of things, cloud computing, block chain, 3D printing, and augmented and virtual reality have opened up new possibilities for innovation and creativity. As these advanced computer technologies continue to evolve, we can expect to see even more transformative changes in the way we live and work. The potential applications for these technologies are endless, and it is an exciting time to be a part of the field of computer science and engineering.

About the Creator

Muhammad Rafey

Hi, This is Muhammad Rafey. An Architect by profession with great knowledge about computer and it's software I like to write about what I know to aware the public and consider their thoughts regarding my content.

Comments

There are no comments for this story

Be the first to respond and start the conversation.