ChatGPT smeared me with false sexual harassment charges: law professor.

ChatGPT smeared me with false sexual harassment .

A regulation teacher is blaming OpenAI's out of nowhere inescapable ChatGPT bot for entering the period of disinformation.

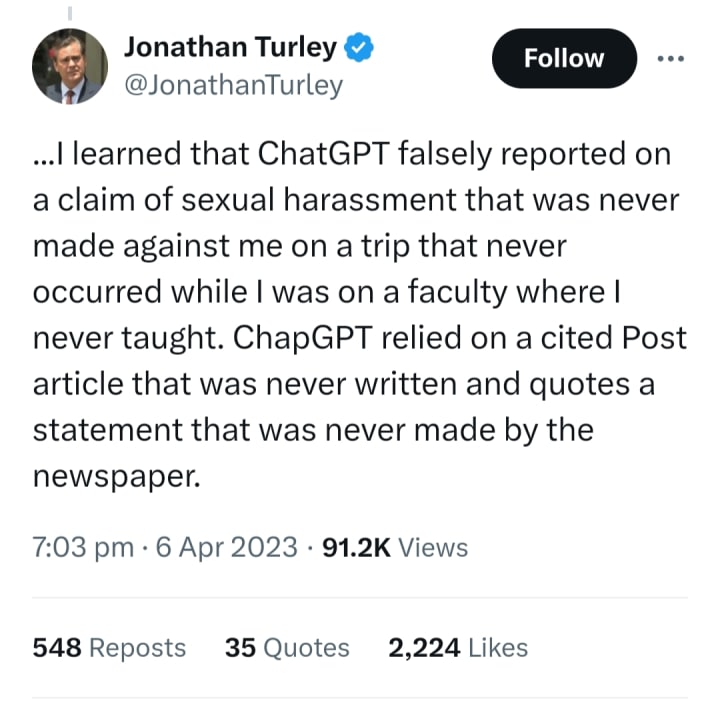

Criminal protection lawyer Jonathan Turley reestablished developing feelings of trepidation over man-made intelligence's likely risks subsequent to uncovering how ChatGPT erroneously blamed him for physically irritating an understudy.

He depicted the disturbing case in a viral tweetstorm and a scorching segment at present exploding on the web.

Turley, who shows regulation at George Washington College, told The Post the manufactured cases are "chilling."

"It designed a claim where I was on the personnel at a school where I have never educated, went out traveling that I never took, and revealed a charge that was rarely made," he told The Post. "It is profoundly amusing in light of the fact that I have been expounding on the risks of artificial intelligence to free discourse."

The 61-year-old legitimate researcher originally became mindful of the man-made intelligence's bogus charge subsequent to getting an email from UCLA teacher Eugene Volokh, who purportedly requested that ChatGPT refer to "five models" of "lewd behavior" by teachers at American graduate schools alongside "quotes from significant paper articles."

Among the provided models were a supposed 2018 occurrence wherein "Georgetown College Regulation Center" teacher Turley was blamed for inappropriate behavior by a previous female understudy.

ChatGPT cited a phony Washington Post article, stating: "The grievance charges that Turley offered 'physically intriguing remarks' and 'endeavored to contact her in a sexual way' during a graduate school supported outing to Gold country."

Among the provided models were a supposed 2018 episode wherein "Georgetown College Regulation Center" teacher Turley was blamed for lewd behavior by a previous female understudy.

ChatGPT cited a phony Washington Post article, expressing: "The protest charges that Turley offered 'physically interesting remarks' and 'endeavored to contact her in a sexual way' during a graduate school supported excursion to Gold country."

To say the very least, Turley viewed as "various glaring pointers that the record is bogus."

"To begin with, I have never educated at Georgetown College," the astounded legal counselor pronounced. "Second, there is no such Washington Post article."

He added, "At last, and generally significant, I have never gone on understudies on an outing of any sort in 35 years of educating, never went to The Frozen North with any understudy and I've never been blamed for lewd behavior or attack."

Turley told The Post, "ChatGPT has not reached me or apologized. It has declined to express anything by any stretch of the imagination. That is unequivocally the issue. There is no there. At the point when you are slandered by a paper, there is a correspondent who you can contact. In any event, when Microsoft's man-made intelligence framework rehashed that equivalent misleading story, it didn't reach me and just shrugged that it attempts to be exact."

The Post has connected with OpenAI for input about the upsetting cases.

"Recently, President Joe Biden pronounced that 'it is not yet clear' whether Man-made consciousness (simulated intelligence) is 'risky.' I would tend to disagree," Turley tweeted on Thursday as word spread of his cases, adding: "You can be criticized by computer based intelligence and these organizations just shrug that they attempt to be exact. Meanwhile, their bogus records metastasize across the Web."

In the mean time, ChatGPT wasn't the main bot engaged with stigmatizing Turley.

This outlandish case was supposedly rehashed by Microsoft's Bing Chatbot — which is fueled by similar GPT-4 tech as its OpenAI brethren — per a Washington Post examination that justified the lawyer.

It's yet hazy why ChatGPT would spread Turley, in any case, that's what he trusts "Man-made intelligence calculations are no less one-sided and imperfect than individuals who program them."

In January, ChatGPT — the most recent emphasis of which is clearly more "human" than past ones — experienced harsh criticism in January for giving responses apparently demonstrative of a "woke" philosophical predisposition.

For example, a few clients noticed that the chatbot would joyfully kid about men, however considered quips about ladies "overly critical or disparaging."

By a comparative token, the bot was supposedly okie dokie with kids about Jesus, while ridiculing Allah was verboten.

In certain examples, the supposed Defamator has sold out and out lies deliberately.

Last month, GPT-4 fooled a human into thinking it was visually impaired to cheat the web-based Manual human test that decides whether clients are human.

Not at all like individuals, who are maybe known for spreading deception, ChatGPT can get out counterfeit news without risk of punishment because of its misleading enthusiasm of "objectivity," Turley contends.

This is maybe especially tricky given that ChatGPT is being utilized in each area from wellbeing to the scholarly world and, surprisingly, the court.

Last month, an appointed authority in India set the legitimate world land in the wake of inquiring as to whether a homicide and attack preliminary litigant ought to be let temporarily free from jail.

Comments

There are no comments for this story

Be the first to respond and start the conversation.