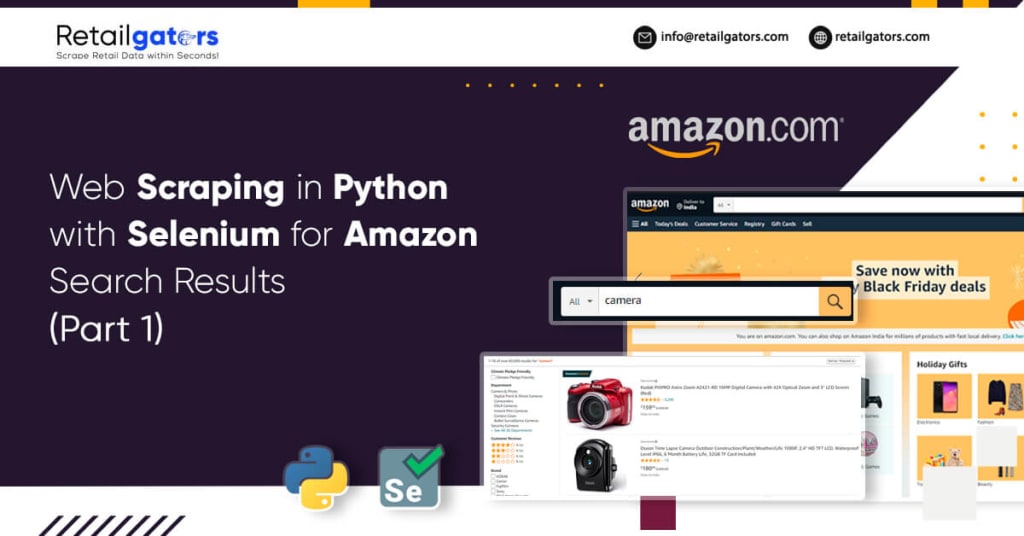

Web Scraping in Python with Selenium for Amazon Search Results (Part 1)

Retailgators

Whenever we go to purchase something, we spend sufficient time scrolling different pages to get a product we want at reasonable prices with good ratings. What if a computer collects these data on behalf of us?

To extract web page data, there are many libraries for data scraping in Python like BeautifulSoup, Scrapy, and Selenium. In this blog, we will use Selenium because this can work as the real user from opening any browser, typing a keyword in the search box as well as click to get the results.

Outline

Install Selenium as well as Download Any Web Driver

Access Amazon Website

Identify WebElement(s)

Scrape Amazon Product Data from Different WebElement(s)

1. Install Selenium as well as Download Any Web Driver

install-selenium-as-well-as-download-any-web-driver

For installing Selenium, open the command line interface as well as install Selenium.

pip install selenium

A web driver is the tool for opening a browser we select (Chrome, Edge, Safari, or Firefox). Whichever browser you use, you require to get a particular web driver for that. Furthermore, the driver needs to be attuned with the browser version as well as operating system. If you use Chrome, you need to download a web driver for Chrome.

Check the version of Chrome browser through clicking three different vertical dots given at top right corner > help > about Google Chrome. After that, download any web driver for the Chrome version.

For all other browsers, it’s easy to find links to download on this page:

https://pypi.org/project/selenium/

When you download a web driver, scrape and save driver in a folder, which you know the path.

2. Access Amazon website

When you are prepared, we will start!

Option 1: Open the Browser Routinely

from selenium import webdriver

# assign the driver path

driver_path = 'YOUR_DRIVER_PATH'

# assign your website to scrape

web = 'https://www.amazon.com'

# create a driver object using driver_path as a parameter

driver = webdriver.Chrome(executable_path=driver_path)

driver.get(web)

# keep this line of code at the bottom

driver.quit()

In case, you are running this code, a web driver would open the browser itself. Although, if you wish a driver to run that in background then we can use another setting.

Option 2: Open the Browser in Background

from selenium import webdriver

# assign the driver path

driver_path = 'YOUR_DRIVER_PATH'

# assign your website to scrape

web = 'https://www.amazon.com'

options = webdriver.ChromeOptions()

options.add_argument('--headless')

# create a driver object using driver_path as a parameter

driver = webdriver.Chrome(options=options, executable_path=driver_path)

driver.get(web)

driver.quit()

In the 2nd option, we set options for Chrome driver to become — headless meaning that to open the Chrome in headless mode. Extracting using this mode, a Chrome browser would work in background. Also, there are other options including opening in the incognito mode, etc.

3. Mention WebElement(s)

Think that you are going through the Amazon site, what will you do next? As you are searching for the product, you will click on a search box, then type any keyword and click on a search button. Selenium could do all these things but what we require to do is telling which part of a page or HTML elements in the DOM (Document Object Model) for chosing by making the object named WebElement . For finding the element, Selenium offers us different ways:

find_element_by_id

find_element_by_xpath

find_element_by_tag_name

find_element_by_class_name

find_element_by_css_selector, etc.

Note: All these functions would return only first WebElement available on a website. In a condition, which we wish to get different elements having similar classes, rather than utilizing find_element_by_*you could utilize find_elements_by_*that return the list. (A difference is “s” succeeding a word element.)

To choose the search box on top of a page, just right click on the box as well as choose inspect to observe the HTML code.

3-mention-we-elements

After going through the HTML code, the available id for the search box includes "twotabsearchtextbox". After that, we will do it again with search button. You would get the id for the search button called "nav-search-submit-button”. Now, the Selenium will choose these elements.

after-going-through-the-htmlcode

# assign any keyword for searching

keyword = "wireless charger"

# create WebElement for a search box

search_box = driver.find_element_by_id('twotabsearchtextbox')

# type the keyword in searchbox

search_box.send_keys(keyword)

# create WebElement for a search button

search_button = driver.find_element_by_id('nav-search-submit-button')

# click search_button

search_button.click()

# wait for the page to download

driver.implicitly_wait(5)

# quit the driver after finishing scraping (please keep this line at the bottom)

driver.quit()

In case, you choose option 1, you will get an amazon page having the search results for a selected keyword.

After that, we will choose different item details because they were emphasized in the photo given below.

after-that-we-will-choose-different-item-details

Before doing that, we would make empty lists for having data we’d need to extract.

product_name = []

product_asin = []

product_price = []

product_ratings = []

product_ratings_num = []

product_link = []

before-doing-that-we-would-make-empty-lists

After going through the HTML code, you would see that different items have the classes "s-result-item s-asin …” Therefore, we make WebElements named “items” with find_elements_by_xpath()

Shortcuts Used For Xpath:

Xpath is transcribed as a normal path with forward slash for navigating through different elements in the HTML.

2. A fundamental xpath begins with double slash //followed by the tag name having attribute’s value(s). This means, “go to get a tag name that has specified attributes equal to the value(s)”.

For instance, you can extract the initial line header using Xpath which is equal to '//div[@class="title"]/h1'

3. You may also access child node with the forward slash or skipping it to node in a nested child node through utilizing double forward slash // . As per the given example, you may create the WebElement for a highlighted element through using a xpath '//div[@class="title"]//span' or '//div[@class="title"]/h1/span'

4. You may utilize contains to get elements, which match the provided values. As at times, desired elements might be hard, contains could help you recognize the elements using some parts. You may observe one of a use further.

As we understand that elements we wish to extract have different classes and a few items have a class AdHolder(i.e. sponsored or featured items) although some are not. Utilizing contains in the xpath makes that easier to recognize all products within a page. So, we need to make a WebElement utilizing xpath which is equal to //div[contains(@class, “s-result-item s-asin")]'

items = driver.find_elements_by_xpath('//div[contains(@class, "s-result-item s-asin")

Although, as needed elements might be dynamically loaded with various times, in case, we run the code, it might occur NoSuchElementException Error as the elements we need are not available yet at running time. Here, we require explicit wait for helping us as following:

from selenium.webdriver.common.by import By

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.support import expected_conditions as EC

items = WebDriverWait(driver,10).until(EC.presence_of_all_elements_located((By.XPATH, '//div[contains(@class, "s-result-item s-asin")]')))

From this code, it indicates that Selenium would wait for maximum 10 seconds for different elements till it could locate all the elements we wish and store WebElements within a variable “items”. Then, thia will increase TimeOutException. There are different EC (Expected Conditions), which we can utilize as per the circumstances. Now, you can get different items on first search results pages.

4. Scrape Amazon Product Data from WebElement(s)

For scraping Amazon product data from different elements, two common ways are there to do it.

Getting the text inside the element(s)

Getting attribute’s values

To find data of every item like a product’s name, price, ASIN number, etc. we require to iterate for loop as well as scrape Amazon product data.

Getting A Product’s Name

getting-a-products-name

The initial data we wish to extract is a product name that locates in a span tag name having a class "a-size-medium a-color-base a-text-normal" The functions to get texts in a WebElement is .text

for item in items:

name = item.find_element_by_xpath('.//span[@class="a-size-medium a-color-base a-text-normal"]')

product_name.append(name.text)

Note: Here, we will use function find_element_by_* using a WebElement “item” that we have already got and not a “driver”, which means that we want sub-elements of a WebElement. Therefore, we begin xpath using.// rather than //to tell the Selenium find only inside that WebElement. Else, Selenium would search the whole page.

Getting An ASIN Number Of A Product

getting-an-asin-number-of-a-product

An ASIN number is a number utilized to recognize a unique product on Amazon. In the HTML document, this is a value of an attribute data-asinof every item. To find attribute’s values, we can utilize a function .get_attribute("ATTR_NAME")

for item in items:

name = item.find_element_by_xpath('.//span[@class="a-size-medium a-color-base a-text-normal"]')

product_name.append(name.text)

data_asin = item.get_attribute("data-asin")

product_asin.append(data_asin)

# following print statement is for checking that we correctly scrape data we want

print(product_name)

print(product_asin)

Find The Price

find-the-price

Not all the products have prices. To get the price, you have a little problem about utilizing a function .find_element_by_*. As said earlier, utilizing find_element_by_* would return the WebElement, whereas find_elements_by_* would return the list. As there are products without prices, in case, Selenium cannot find the price elements in the items, the function find_element_by_* would display an error. Whereas if a function find_elements_by_* could not get an element, this will return the empty list. For a price, we would extract the entire price as well as a fraction price that are numbers before as well as after a decimal point sequentially.

find-the-price

# find prices

whole_price = item.find_elements_by_xpath('.//span[@class="a-price-whole"]')

fraction_price = item.find_elements_by_xpath('.//span[@class="a-price-fraction"]')

if whole_price != [] and fraction_price != []:

price = '.'.join([whole_price[0].text, fraction_price[0].text])

else:

price = 0

product_price.append(price)

Getting Ratings As Well As Rating Numbers

Mainly, we prefer to purchase products having ratings more than 4. Furthermore, the rating numbers show us that product that maximum customers have decided to purchase as well as how many purchasers that ratings are dependent on.

getting-ratings-as-well-as-rating-numbers

In <div> tag elements, you have two <span> tag elements. For scraping ratings as well as ratings_num, we make a WebElement named ratings_box for grabbing two <span> tag elements. Therefore, get an attribute aria-label from every <span> tag element like given:

# find a ratings box

ratings_box = item.find_elements_by_xpath(‘.//div[@class=”a-row a-size-small”]/span’)

if ratings_box != []:

ratings = ratings_box[0].get_attribute('aria-label')

ratings_num = ratings_box[1].get_attribute('aria-label')

else:

ratings, ratings_num = 0, 0

product_ratings.append(ratings)

product_ratings_num.append(str(ratings_num))

Finding Details’ Link

In the end, we might require to observe product’s data in details. Extracting any particular link of products provide us a way of reading more. You can get <a> tag elements for a product link through inspecting a product’s name or product’s images.

finding-details-link

link = item.find_element_by_xpath(‘.//a[@class=”a-link-normal a-text-normal”]’).get_attribute(“href”)

product_link.append(link)

driver.quit()

The Whole Code:

from selenium import webdriver

from selenium.webdriver.common.by import By

from selenium.webdriver.support.ui import WebDriverWait as wait

from selenium.webdriver.support import expected_conditions as EC

web = 'https://www.amazon.com'

driver_path = 'D:/Programming/chromedriver_win32/chromedriver'

options = webdriver.ChromeOptions()

options.add_argument('--headless')

driver = webdriver.Chrome(options=options, executable_path=driver_path)

driver.get(web)

driver.implicitly_wait(5)

keyword = "wireless charger"

search = driver.find_element_by_xpath('//*[(@id = "twotabsearchtextbox")]')

search.send_keys(keyword)

# click search button

search_button = driver.find_element_by_id('nav-search-submit-button')

search_button.click()

driver.implicitly_wait(5)

product_asin = []

product_name = []

product_price = []

product_ratings = []

product_ratings_num = []

product_link = []

items = wait(driver, 10).until(EC.presence_of_all_elements_located((By.XPATH, '//div[contains(@class, "s-result-item s-asin")]')))

for item in items:

# find name

name = item.find_element_by_xpath('.//span[@class="a-size-medium a-color-base a-text-normal"]')

product_name.append(name.text)

# find ASIN number

data_asin = item.get_attribute("data-asin")

product_asin.append(data_asin)

# find price

whole_price = item.find_elements_by_xpath('.//span[@class="a-price-whole"]')

fraction_price = item.find_elements_by_xpath('.//span[@class="a-price-fraction"]')

if whole_price != [] and fraction_price != []:

price = '.'.join([whole_price[0].text, fraction_price[0].text])

else:

price = 0

product_price.append(price)

# find ratings box

ratings_box = item.find_elements_by_xpath('.//div[@class="a-row a-size-small"]/span')

# find ratings and ratings_num

if ratings_box != []:

ratings = ratings_box[0].get_attribute('aria-label')

ratings_num = ratings_box[1].get_attribute('aria-label')

else:

ratings, ratings_num = 0, 0

product_ratings.append(ratings)

product_ratings_num.append(str(ratings_num))

# find link

link = item.find_element_by_xpath('.//a[@class="a-link-normal a-text-normal"]').get_attribute("href")

product_link.append(link)

driver.quit()

# to check data scraped

print(product_name)

print(product_asin)

print(product_price)

print(product_ratings)

print(product_ratings_num)

print(product_link)

Thanks a lot for reading this! We hope that this tutorial will help you immensely. The next part 2 would extract through different pages as well as store data in a database called Sqlite3.

source code: https://www.retailgators.com/web-scraping-in-python-with-selenium-for-amazon-search-results-part-1.php

About the Creator

Retailgators

Retailgators is a web scraping company delivering data using cutting-edge technologies. We have unique scraping solutions that fulfills every minor requirement.

Comments

There are no comments for this story

Be the first to respond and start the conversation.