Two Simple Reasons that ChatGPT (AI) Won’t Work in End-to-End Test Automation. Part 1

It is just hype, like many over the last two decades.

With the hype of ChatGPT, AI in Test Automation has been talked about a lot; some even listed it as the Testing Trend 2023. This view is wrong.

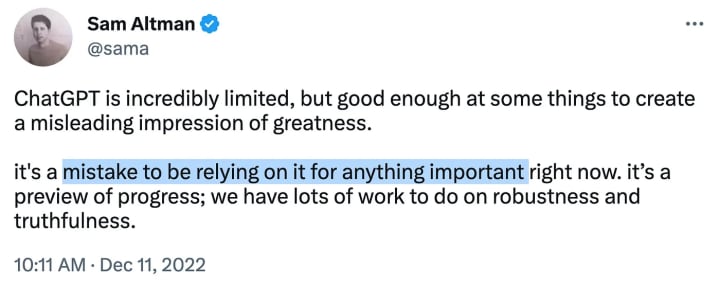

“ChatGPT is incredibly limited”

This comment is not mine. It is from a tweet by Sam Altman, CEO of OpenAI (the company behind ChatGPT):

As a test automation engineer, I consider my job quite important. Do you?

If you treat your work as mine, then “talking about AI replacing test automation” is totally irresponsible and wrong.

Accept the “ChapGPT is incredibly limited” for general use. Next, I will explain why it is even much more limited in the context of real End-to-End test automation.

Recap of What ChatGPT can do in End-to-End Test Automation

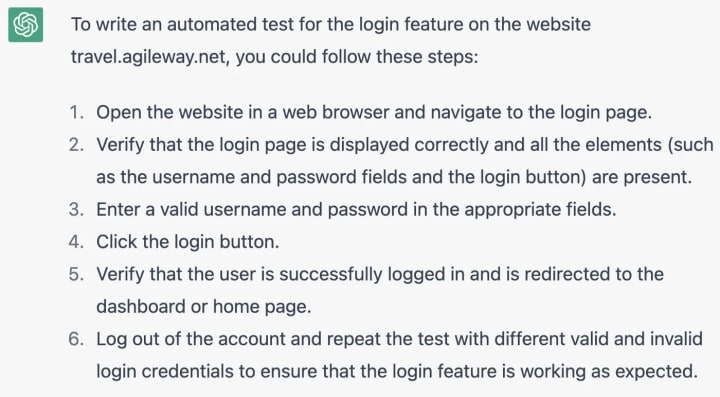

I did a few exercises using ChatGPT for testing (in this article), like this simple one below.

ChapGPT only generated generic, hypothetical test steps.

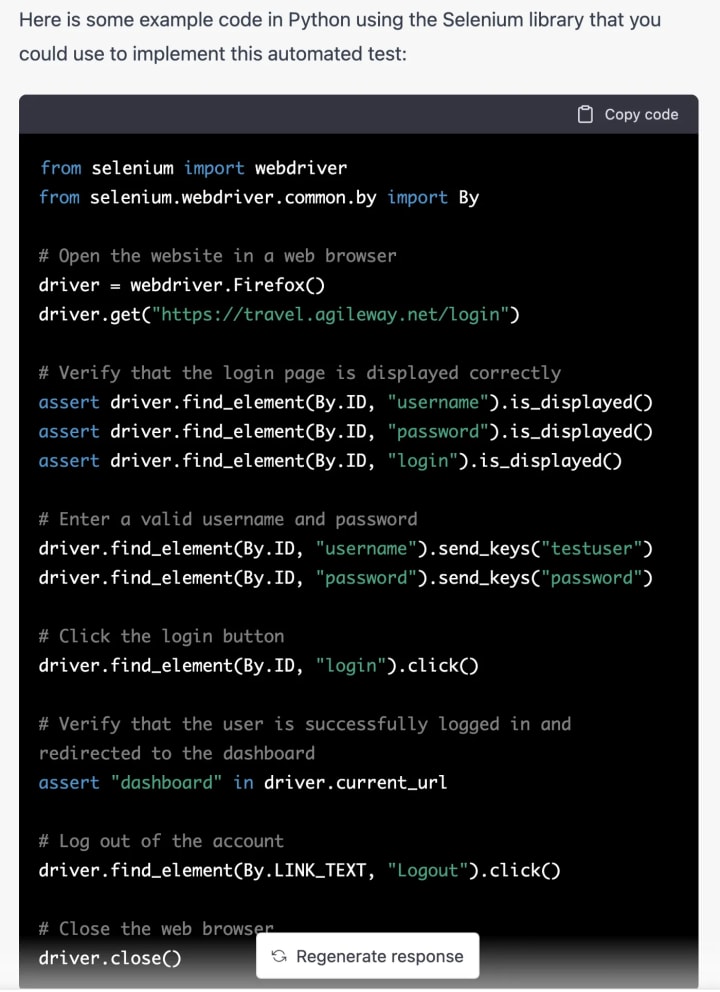

And a test script.

This is a “Hello World” (i.e. simplest) level test case. Yet, to me, chatGPT treated it as writing a made-up story, as its test steps and test scripts are irrelevant to my target site: https://travel.agileway.net (this site has no dashboard).

I do like ChatGPT’s writing & translation ability, including poems and made-up stories. (For the past week, I have been sending ChatGPT’s retelling of Chinese historical stories, and it’s quite amusing because 60–90% part of each story is incorrect) But hey, we are talking about engineering and test-step-must-be-100%-correct testing.

“A simple rule of end-to-end test automation: if one step of a 100-step test script is not implemented or not working reliably, the whole test script is useless!” — Zhimin Zhan

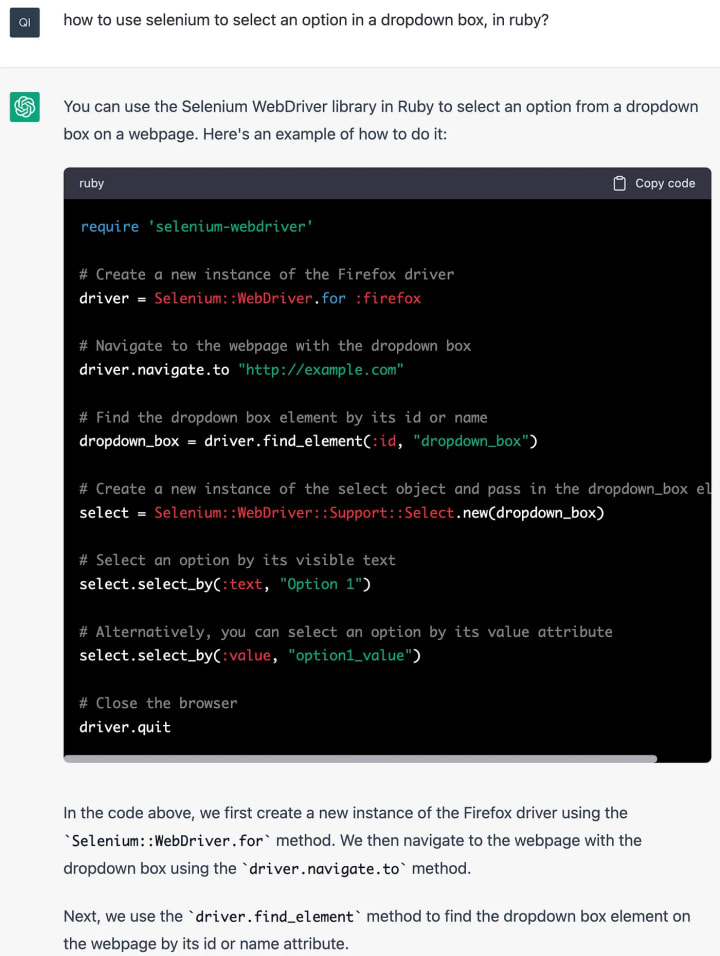

As you can see, ChatGPT is useless for creating a valid test script (often, invalid ones will consume more time). chatGPT might help answer a specific question, like the example below.

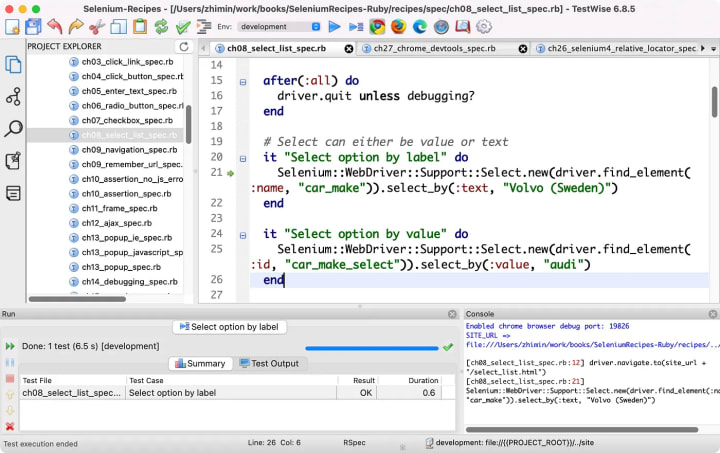

The answer is correct. But you can get the answer on Google or Stack Overflow. There is a much quicker to get a solution for a generic problem like this. For example, find the recipe in “Selenium WebDriver Recipes in Ruby” and run it.

My points here:

- ChatGPT definitely won’t create a working complete test script for you.

- ChatGPT does not even create a single step for your scenario (in your target app).

- It only offers a well-known solution to a generic problem.

Why is AI (such as ChatGPT) very limited in assisting End-to-End Test Automation?

Two simple reasons (there are more, but these two should be enough).

- AI, if indeed it works, will achieve programmer-less coding first, as development is easier than E2E testing.

“Testing is harder than developing. If you want to have good testing you need to put your best people in testing.”- Gerald Weinberg, in a podcast (2018)

- Test Creation is only a minor effort in Test Automation

1. Test Automation is harder than Coding, and AI is not replacing programmers yet.

Developing enterprise software is not hard, to some degree, quite mechanical, as it usually follows MVC (Model-View-Controller) pattern. A typical user story:

- Transform the UI design (created by graphic artists) into HTML

- Code the controller to read parameters

- CRUD (create, read, update and delete) the model, database

- Return the response to the user

That’s why many developers refer to work as “cutting code”, relying on Stack Overflow.

I started web development in 1997. Compared to a large system software I developed solo, such as TestWise (a functional testing IDE) and international award winning BuildWise (a Continuous Testing server), web development is really easy. The challenging part is end-to-end testing; coding is relatively minor and mechanical.

1). Test Automation is Harder Than Coding

The fact: most software projects can reach a production release, regardless of quality or on time on budget. But, how many software teams have you worked could achieve real test automation success that enables ‘daily production releases’? My guess: the number in your mind now is 0.

“In my experience, great developers do not always make great testers, but great testers (who also have strong design skills) can make great developers. It’s a mindset and a passion. … They are gold”. - Google VP Patrick Copeland, in an interview (2010)

“95% of the time, 95% of test engineers will write bad GUI automation just because it’s a very difficult thing to do correctly”. - this interview from Microsoft Test Guru Alan Page (2015)

2) AI coding has been proven failed

AI in coding (or codeless) is not a new concept at all. Personally, I have experienced two waves. The first one was in the 90s. I was at the university then, using a special descriptive text as input to a code-generator to produce software, it sucked!

In ~2008, I worked on two projects with workflow software (using BPML), hoping to create software with fewer and no developers. Of course, both failed. I remember the perfect work was pictured below in one project:

- Business Analysts create XForm (I voted yes as one of the three WWW8 reviewers for this, which I regretted) as the UI tier

- Business Analysts specify business rules mostly via drag-and-drop UI (BPML underneath)

If the software is generated, no testing is required! How good was that! It turned out to be a nightmare, of course. (I developed a pure java implementation replacing the expensive, buggy and non-working workflow engine).

The fact: the demand for programmers has always been high, for the past four decades. The average salary of programmers in top tech companies is increasing quickly.

Please note that the majority of programmers are hired to work on enterprise apps, in which creativity is not required.

My point: if AI (like ChatGPT) can do real test automation, it must have achieved AI coding first. But if so, there is no or little need for software testing as there are no human errors. Regarding total job replacement, we, test automation engineers, are safer than programmers.

---

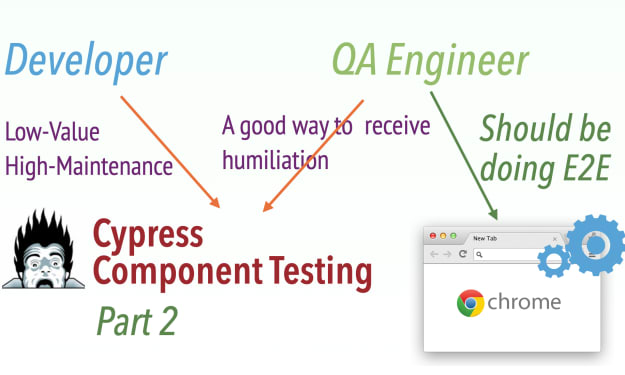

In Part 2, I will cover the second reason.

The original article was published on my Medium Blog, 2023-02-23

About the Creator

Zhimin Zhan

Test automation & CT coach, author, speaker and award-winning software developer.

A top writer on Test Automation, with 150+ articles featured in leading software testing newsletters.

Comments (2)

Exactly my thoughts, thanks man for putting those quotes together in the article so I can refer whoever has questions as to why test automation is not software development. As I asked a lot about it

so amazing what are you waiting for can you join the group of my friends read the nice story that I have prepared for you have prepared for you