The Dark Side of Social Media Screening Technology

Your social media activity may be preventing you from finding a job in ways you’ve never imagined.

I lead the recruiting department of a large New York City social services agency. Recently, we purchased social media background reports on each of the finalist candidates for an executive-level position. The reports came from a leading social media screening provider that uses computer algorithms to gather and analyze language in any publicly available social media content.

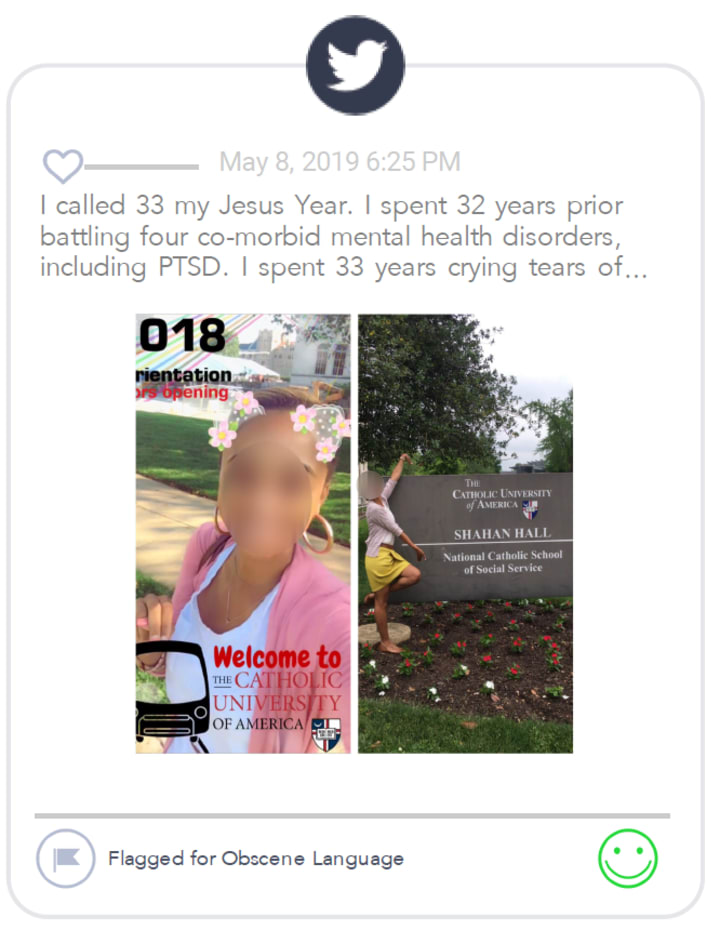

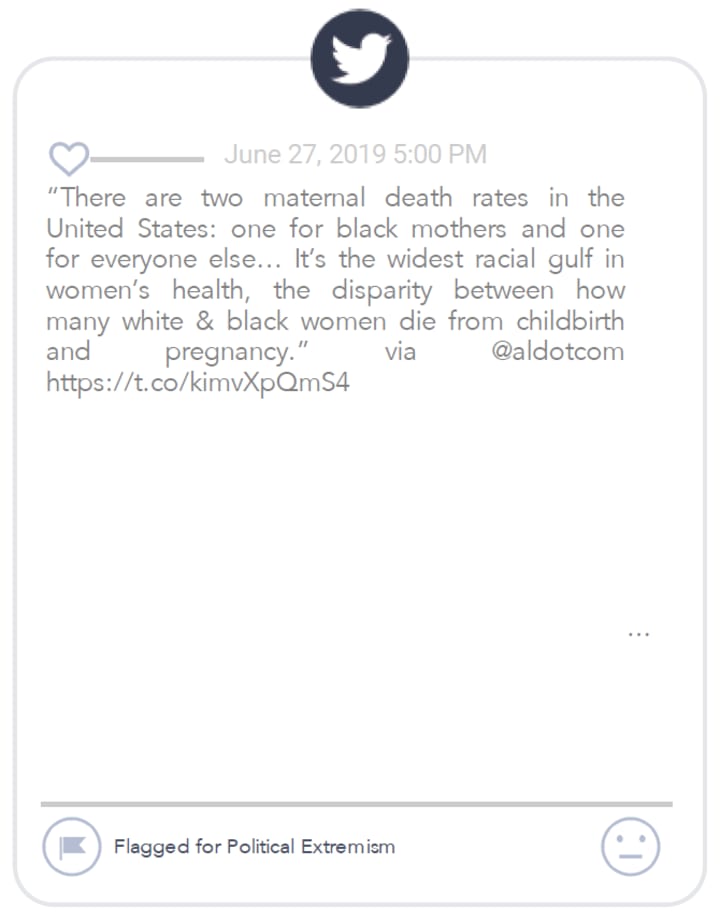

Here are some excerpts from one of those reports. The candidate under review has a thriving career in government and community relations and is a highly regarded professional in this space. It’s important to note that the tweets featured below aren’t even the candidate’s own posts, but posts they liked. Yes, the algorithms are sizing you up that way, too.

The candidate happens to be a person of color. And tellingly, the only thing these posts have in common is that they reference people of color in affirming and supportive ways. None are even remotely extreme or obscene by any understanding of those terms, and yet the algorithms flagged them as such.

In this particular case, there was a human being (me) assessing the usefulness of the information we received in this custom report. However, this technology is also being used to assess the “riskiness” of very large numbers of candidates at once. And that’s where things gets scary.

Consider this promise from social media screening company ThirdPro:

Combing through a large number of candidates, or hiring the wrong ones, is costly to your company. The ThirdPro audit allows recruiters to quickly eliminate risky candidates from the process and insures against the risk of hiring a candidate who will pose a real risk for the organization.

Let’s say you and 99 other people all applied for the same job, but because you are a person of color and tend to like posts by and about people of color, the algorithms (created by programmers who are 71% White, 20% Asian, and only 5% Black) deem your posts politically extreme or obscene. As a recruiter, I see 100 applicants sorted into a color-coded indicator scheme that tells me where they fall on the spectrum of riskiness. Green = safe, red = risky. Since I have limited time to review candidates (and let’s face it, anything that can help me narrow down the pile in a reasonable way is welcome), I focus on the “green” resumes. And I will never even see your resume because the algorithms have confined you to the red zone.

Screening technology companies hire experts in Industrial Organizational Psychology to ensure their searches are sufficiently not-racist enough to prevent lawsuits. I would like to believe they are also addressing the moral repercussions of their work rather than simply looking after bare-minimum compliance, but my personal experience with the company we used makes me doubtful.

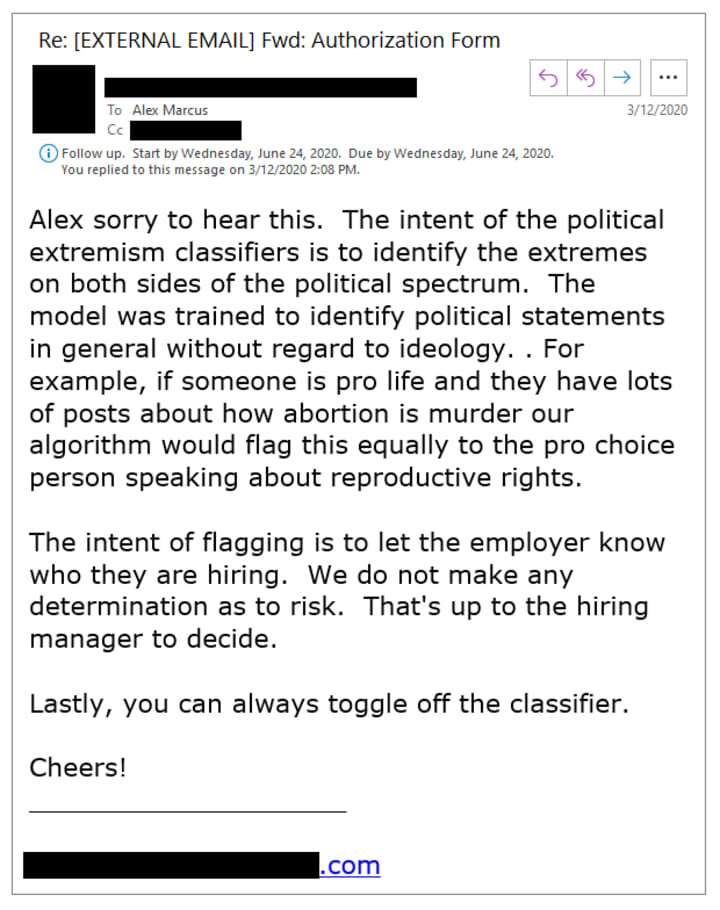

I thought perhaps the company would be alarmed to hear that their results were problematic from a racial perspective, so I emailed about my concerns:

Were they concerned?

(Cheers?)

I fail to see how a Black father being proud of his son; or a Black woman being proud to have overcome mental health challenges to graduate from college; or the disparity in maternal death rates between Black and non-Black mothers; or Black girls and women being inspired by a state of the union address, constitute a political stance. The comparison to abortion activism is absurd and cruel.

I said as much in my follow-up response to this company. Not surprisingly, that was the last I heard from them.

There is always a human being with unconscious bias making decisions: programmers, data scientists, analysts. Everyone who touches this data influences the outcome. And the outcome, as we’ve seen, can be shockingly unjust. How many people of color will never even be considered for jobs because of a social media presence that any reasonable person would assume is benign?

I asked Roy Altman, an HR technology management and analytics expert, if there are auditing firms or government regulatory bodies conducting objective assessments to protect the public from unintended harmful impact. “Absolutely not,” he replied, “this technology is so new that it’s the Wild West right now.”

So please, if you are a company: think twice before investing in technology that promises to save you time and money by subjecting your candidates to social media screening, personality assessments, facial expression analysis (yes, that’s what they’re doing with your video interview), or any other computer-assisted weeding out of “undesirable” candidates. I know poring through resumes is a pain in the ass, but that’s what your recruiters are trained to do.

If you’re a recruiter or hiring manager, work to identify your own unconscious bias and confront it. Bias runs deep and can never be removed, but the effects can be mitigated if you care to create a workplace that gives equitable opportunity to people of all backgrounds.

And if you’re a candidate, make all of your social media accounts private while you are looking for a job. As far as I know, it is not possible to conduct searches on private accounts. Taking down content that you think may count against you will not only drive you insane, but your accounts also include a long history of likes that you can do nothing about.

* * * *

For more information on algorithmic bias, check out Joy Buolamwini’s TED talk How I’m fighting bias in algorithms and upcoming book, Justice Decoded; and Safiya Umoja Noble’s book Algorithms of Oppression.

If you’re aware of AI harms or biases, you can report it by contacting the Algorithmic Justice League.

* * * *

This article first appeared on Medium here.

Comments

There are no comments for this story

Be the first to respond and start the conversation.