Building a Data Lakehouse in Azure with Databricks

How to build a modern Data Platform with Databricks and Azure Cloud

If you want to create a modern data platform for your organisation, the Data Lakehouse could be one of the more promising solutions existing out there. In case of when you or your company are utilizing the Microsoft Azure Cloud, there are even more approaches and solutions existing. One of them is the usage of Databricks.

What is a Data Lakehouse again?

Let's start with a short recap of what a Data Lakehouse is. It's not only about the integration of Data Lake with a Data Warehouse, but rather the integration of a Data Lake, a Data Warehouse, and purpose-built storage to make unified governance and ease of data movement possible [1]. From my own experience, it has often shown that Data Lakes can be realized much faster. Once when all data is accessible, Data Warehouses can be still added on top of the structure functioning as a hybrid solution.

Benefits of a/an (Azure Databricks) Data Lakehouse

A Databricks Lakehouse in Azure Cloud connects ACID transactions and Data Governance of Data Warehouses with the ability of being flexible and cost efficient of Data Lake. This then enables you and your company with (Self-Service) Business Intelligence, Machine Learning or Deep Learning. Databricks Lakehouse is collecting the data in a comprehensively scalable cloud and is built on open source data standardizations, so people are able to use the data in every place and in any way that they want to [2]. Competitors such as Google, AWS, or the platform-independent provider Snowflake, naturally provide similar solutions here. If you want to dive deeper into this topic, the linked article below might be also interesting for you:

Building up a Databricks Solution in Azure

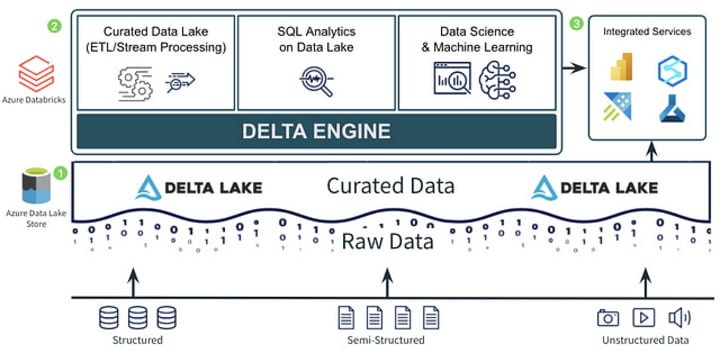

A possible architecture structure might look like the one down below, where one utilizes a Data Lake storage from Azure as the foundation storage. Of course, one could also think of using only relational database storage if they don’t really have any semi-structured or unstructured data within the organisation. However, there is nothing wrong with staying flexible for upcoming events in the future.

By storing data with Delta Lake, you enable Data Scientists and Engineers to use the same production data that your core ETL workloads are based on when that data is processed [3].

By using the built-in Unity Catalog, you can deal with data governance and discovery on Azure Databricks in an effortless manner. Available in Notebooks, Jobs, and Databricks SQL, Unity Catalog provides features and user interfaces that make workloads to users available, designed for both Data Lakes and Data Warehouses [3]. This is also necessary so that the correct data arrives at the right time to the right people or can be found by them and shared under policies and to enable later approaches such as a Data Mesh. Afterwards, the data can then be utilized for methods such as Business Intelligence through e.g. Power BI & Co. or for reporting and dashboarding tasks. You can also connect to Python Notebooks or Azure Synapse, which interestingly could also be another alternative to build up a Data Lakehouse. In this regard, Microsoft also launched Microsoft Fabric which should ease the implementation of data pipelines and a data platform. You can gain more information on this topic, if you click on the article linked down below:

Summary

As a contemporary architecture, the Data Lakehouse will probably go on with playing a dominant role within organisations. One possible approach is to make use Azure Data Lake in combination with Databricks as a Data Warehouse component. As already previously mentioned, Azure also provides various solutions such as Azure Synapse. At the end of the day, you have to specifically decide on what is the better solution for your needs and requirements. It is also worth it to look at the costs of the individual services.

Sources and Further Readings

[1] AWS, What is a Lake House approach? (2021)

[2] Microsoft, What is the Databricks Lakehouse? (2022)

[3] Analytics on Azure Blog, Simplify Your Lakehouse Architecture with Azure Databricks, Delta Lake, and Azure Data Lake Storage (2022)

About the Creator

The Datanator

Just a regular dude, who likes to write and share all about Data stuff (and other interesting things).

Comments

There are no comments for this story

Be the first to respond and start the conversation.