Semantic role labeling

In natural language processing, semantic role labeling (also called shallow semantic parsing or slot-filling) is the process that assigns labels to words or phrases in a sentence that indicates their semantic role in the sentence, such as that of an agent, goal, or result. It serves to find the meaning of the sentence.

In natural language processing for machine learning models, semantic role labeling is associated with the predicate, where the action of the sentence is depicted. SRL or semantic role labeling does the crucial task of determining how different instances are related to the primary predicate. Semantic Role Labelling is also referred to as thematic role labeling and goes systematically for interpreting the syntactic expression of a sentence, ideally, with the parsing tree method.

Semantic role labeling is appropriate for NLP tasks that involve the extraction of multiple meanings mentioned in a language and depends largely on the structure or scheme of the parsing trees applied. The semantic role labeling method is also used in image captioning for deep learning and Computer Vision tasks; herein, SRL is utilized for extracting the relation between the image and the background. In NLP applications, SRL is executed for text summarization, information extraction, and translation for machines. It also applies well to question-answering-based NLP tasks.

How is SRL taken up in NLP?

Semantic role labeling is appropriately used in NLP-based applications for the extraction of semantic meaning is mandatory. Typically, semantic role labeling is concerned with identification, classification, and establishing distinct identities. In some instances, semantic role labeling may not be effective through parsing trees. Sometimes, SRL is then applied via pruning and chunking. Re-ranking is also applied through which multiple labels are aligned to every instance or argument and the context is then globally extracted from final labels.

Approaches in Semantic Role Labeling

From being grammar-based to statistical, semantic role labeling has been a supervised learning task with annotated machine learning data in place to execute. In 2016, a dependency path approach was used by Roth and Lapata, which is applied to the action and its related arguments. It is also used as a neural network approach, wherein a multi-layered methodology brings out the final classification layer.

Another approach BiLSTM uses Convolutional Neural Network or CNNs were applied as character embeddings, in order to get the input. This approach has been most effective for Along with this, Shi and Lin used BERT for semantic role labeling sans syntactic relation producing highly accurate results. Then, the relation by relation (R by R) by approach is based on the relation between dependency trees and constituent trees. We see that this approach has a significant impact on localizing semantic for specific predicates the argument structure is interpreted as per lexical units through dependency relations. A similar approach has been used as CCG or Combinatory Categorical Grammar (CCG) for extracting the dependency relations of the argument in the predicate.

Recent Developments in Semantic Role Labeling

The term state-of-the-art is often attached with Semantic Role labeling for Natural Language Processing tasks, for its ability to deliver accuracy in NLP tasks with multiple approaches.

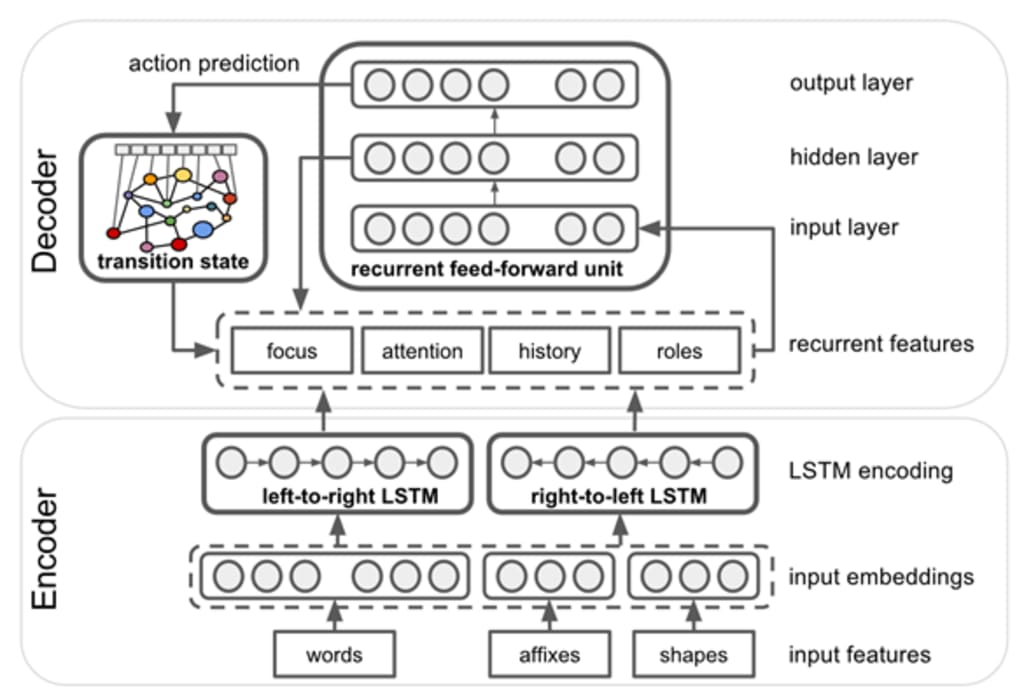

In 2017, Google has named Sling for SRL with direct parsing through directly capturing the semantic labeling in frame graph format and built on an architecture of encoder and decoder. It is open-source and one of the most efficient parsing architectures for SRL. Meanwhile, using Propbank is a corpus developed for the proposition and related argument, in 2016, Universal Decompositional Semantic has been devised which adds to the syntax of universal semantic dependencies.

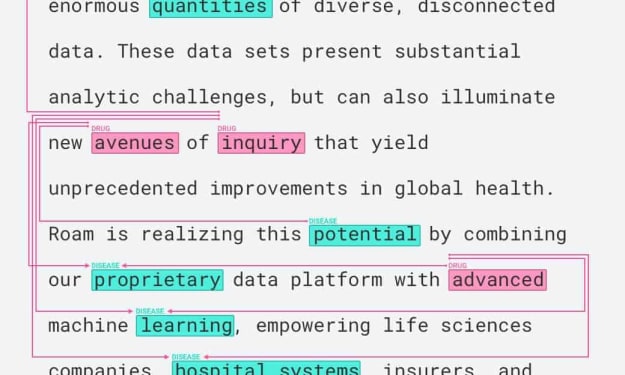

To elaborate and quote an instance from what has been adopted with the use of Semantic Role Labeling, in the biomedical medical field, SRL is extensively used for has simplified biomedical literature. A key development in this field for IE or information extraction has helped in determining biomedical relations of interactions. In comparison to what has been employed for relation extraction, innovative SRL techniques have been able to extract the syntactic meaning of the predicate as well as aspects like timing, location, and manner. Using maximum entropy in the machine learning model, the biomedical field has advanced in extracting relations in cases such as gene-disease and protein-protein relation. SRL clearly helped in setting up of proposition bank and eased out the information extraction, augmenting techniques to find biomedical relations.

Concluding note

In recent times, for NLP tasks based on deep learning, work as per attentive representations and utilize the attention mechanism. This mechanism works on input and generates output, delivering a higher level of efficiency. The self-attention mechanism of SRL is well accepted in NLP Tasks since it focuses on intra-connection on every word of a sentence. It also helps in capturing hierarchical information from self-attention modules in the attentive representations.

Semantic role labeling is rightly called state-of-the-art as the technique has universal application and capability to fit in diverse fields for dissecting predicate across various information structures in micro sense and enable architectures for building innovative machine learning models, in its macro sense.

This post is originally published at click here

About the Creator

Matthew McMullen

11+ Years Experience in machine learning and AI for collecting and providing the training data sets required for ML and AI development with quality testing and accuracy. Equipped with additional qualification in machine learning.

Comments

There are no comments for this story

Be the first to respond and start the conversation.